This topic provides answers to some frequently asked questions about batch synchronization.

Overview

When you view the questions described in this topic, you can search for common similar questions by keyword and view the solutions to the questions.

Network connectivity

Why is the network connectivity test of a data source successful, but the batch synchronization task that uses the data source fails to be run?

What do I do if the error message

Communications link failure

is returned when I run a batch synchronization task to read data from or write data to a MySQL data source?

What do I do if the same batch synchronization task fails to be run occasionally?

Inappropriate resource configuration

What do I do if the error message

[TASK_MAX_SLOT_EXCEED]:Unable to find a gateway that meets resource requirements. 20 slots are requested, but the maximum is 16 slots

is returned when I run a batch synchronization task to synchronize data?

What do I do if the error message

OutOfMemoryError: Java heap space

is returned when I run a batch synchronization task to synchronize data?

Conflict during task running

What do I do if the error message

Duplicate entry 'xxx' for key 'uk_uk_op'

is returned when I run a batch synchronization task to synchronize data?

Timeout error

When I run my batch synchronization task to synchronize data, the following error message is returned: MongoDBReader$Task - operation exceeded time limitcom.mongodb.MongoExecutionTimeoutException: operation exceeded time limit. What do I do?

What do I do if a batch synchronization task runs for an extended period of time?

Resource group change

How do I change the resource group that is used to run a batch synchronization task of Data Integration?

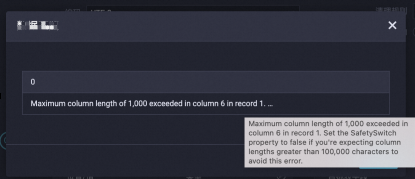

Dirty data

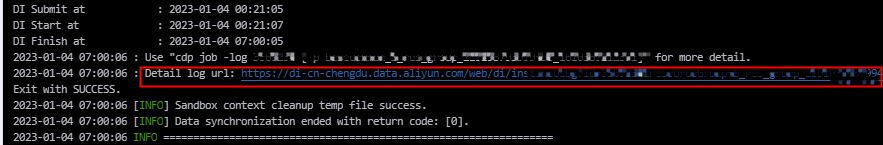

How do I locate and handle dirty data?

How do I view dirty data?

Is all data not synchronized if the number of dirty data records generated during batch synchronization exceeds the specified upper limit?

How do I handle a dirty data error that is caused by encoding format configuration issues or garbled characters?

Whether to retain information of a source table in a destination table

Does the system retain information of a source table, such as not-null properties and the default values of fields of the source table, in the mapped destination table that is automatically created?

Shard key

Can I use a composite primary key as a shard key for a batch synchronization task?

SSRF attack

What do I do if an SSRF attack

is detected in a batch synchronization task and the error message

Task have SSRF attacks

is returned?

Write of DATE-type data

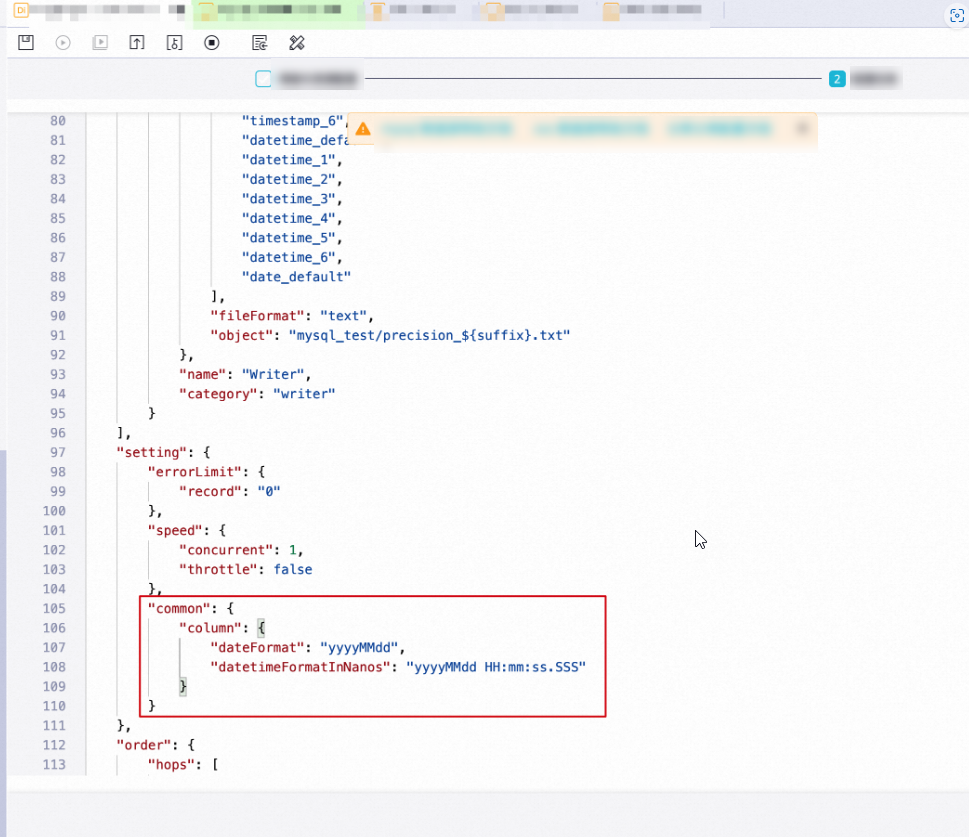

How do I retain the millisecond part or a specified custom date or time format when I use a batch synchronization task to synchronize data of the DATE or TIME type to text files?

MaxCompute

What are the items that I must take note of when I add a source field in a batch synchronization task that synchronizes data from a MaxCompute table?

How do I configure a batch synchronization task to read data from partition key columns in a MaxCompute table?

How do I configure a batch synchronization task to read data from multiple partitions in a MaxCompute table?

How do I perform operations such as filtering fields, reordering fields, and setting empty fields to null when I run a batch synchronization task to synchronize data to MaxCompute?

What do I do if the number of fields that I want to write to a destination MaxCompute table is greater than the number of fields in the table?

What are the precautions that I must practice when I configure partition information for a destination MaxCompute table?

How do I ensure the idempotence of data that is written to MaxCompute in task rerun and failover scenarios?

What do I do if the error message

The download session is expired

is returned when I run a batch synchronization task to read data from a MaxCompute table?

The download session is expired

is returned when I run a batch synchronization task to read data from a MaxCompute table?

What do I do if the error message

Error writing request body to server

is returned when I run a batch synchronization task to write data to a MaxCompute table?

Error writing request body to server

is returned when I run a batch synchronization task to write data to a MaxCompute table?

MySQL

How do I configure a batch synchronization task to synchronize data from tables in sharded MySQL databases to the same MaxCompute table?

What do I do if the Chinese characters that are synchronized to a MySQL table contain garbled characters because the encoding format of the related MySQL data source is utf8mb4?

What do I do if the error message

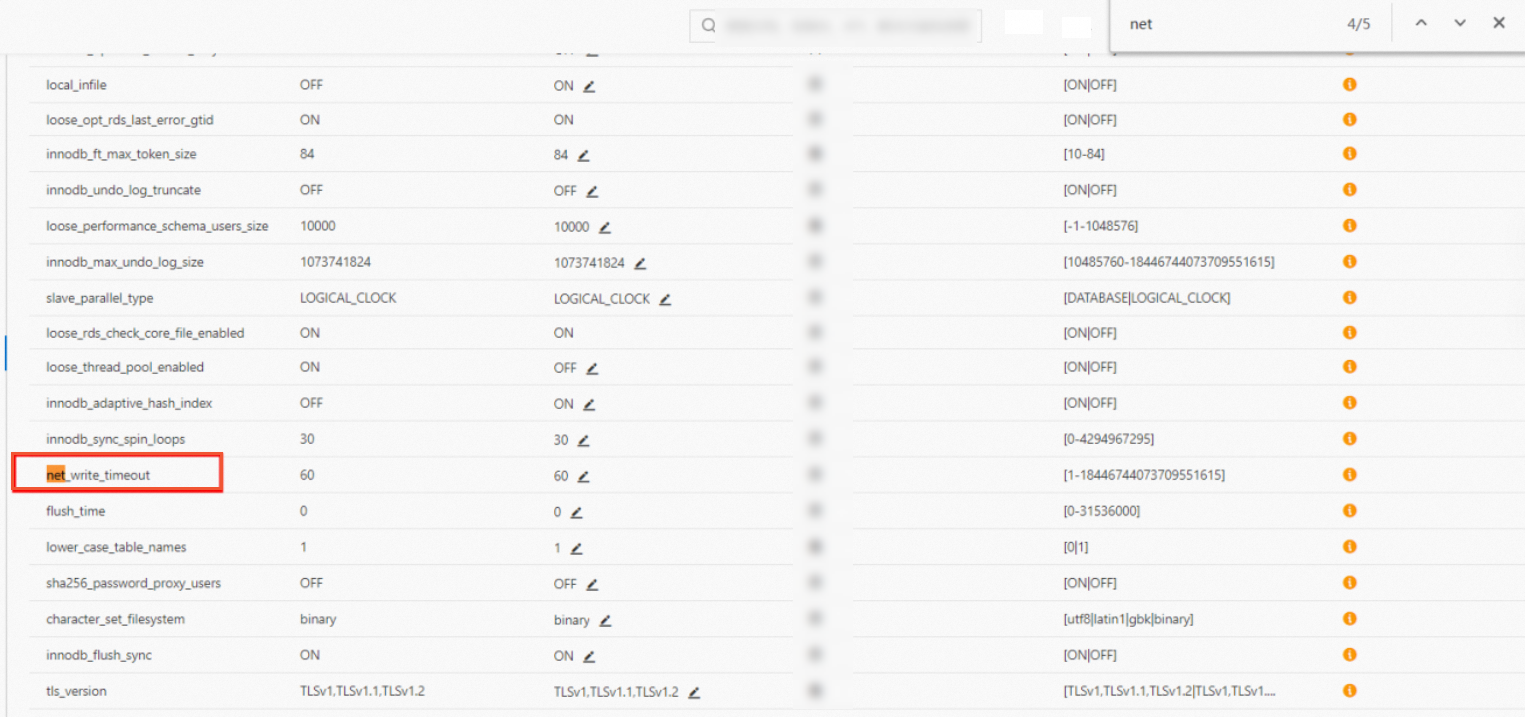

Application was streaming results when the connection failed. Consider raising value of 'net_write_timeout/net_read_timeout' on the server

is returned when I run a batch synchronization task to read data from or write data to ApsaraDB RDS for MySQL?

Application was streaming results when the connection failed. Consider raising value of 'net_write_timeout/net_read_timeout' on the server

is returned when I run a batch synchronization task to read data from or write data to ApsaraDB RDS for MySQL?

What do I do if the error message

[DBUtilErrorCode-05]ErrorMessage: Code:[DBUtilErrorCode-05]Description:[Failed to write data to the specified table.]. - com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: No operations allowed after connection closed

is returned when I run a batch synchronization task to synchronize data to a MySQL data source?

[DBUtilErrorCode-05]ErrorMessage: Code:[DBUtilErrorCode-05]Description:[Failed to write data to the specified table.]. - com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: No operations allowed after connection closed

is returned when I run a batch synchronization task to synchronize data to a MySQL data source?

What do I do if the error message

The last packet successfully received from the server was 902,138 milliseconds ago

is returned when I run a batch synchronization task to read data from a MySQL data source?

The last packet successfully received from the server was 902,138 milliseconds ago

is returned when I run a batch synchronization task to read data from a MySQL data source?

PostgreSQL

What do I do if the error message

org.postgresql.util.PSQLException: FATAL: terminating connection due to conflict with recovery

is returned when I run a batch synchronization task to synchronize data from PostgreSQL?

org.postgresql.util.PSQLException: FATAL: terminating connection due to conflict with recovery

is returned when I run a batch synchronization task to synchronize data from PostgreSQL?

RDS

What do I do if the error message

Host is blocked

is returned when I run a batch synchronization task to synchronize data from an Amazon RDS data source?

Host is blocked

is returned when I run a batch synchronization task to synchronize data from an Amazon RDS data source?

MongoDB

What do I do if an error occurs when I use the root user to add a MongoDB data source?

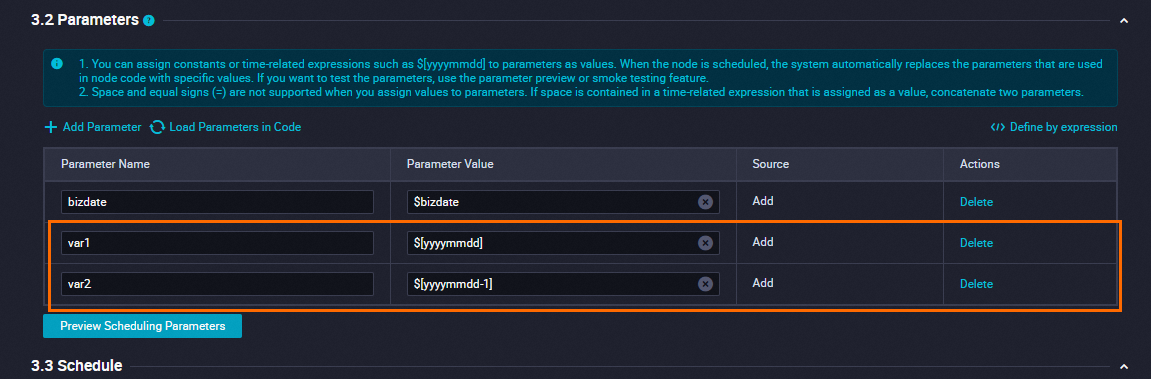

How do I convert the values of the variables in the query parameter into values of the TIMESTAMP data type when I synchronize incremental data from a table of a MongoDB database?

After data is synchronized from a MongoDB data source to a destination, the time zone of the data is 8 hours ahead of the original time zone of the data. What do I do?

What do I do if a batch synchronization task fails to synchronize data changes in a MongoDB data source to a destination?

Is MongoDB Reader case-sensitive to the names of fields from which I want to synchronize data?

How do I configure a timeout period for MongoDB Reader?

What do I do if the error message

no master

is returned when I run a batch synchronization task to synchronize data from a MongoDB data source?

no master

is returned when I run a batch synchronization task to synchronize data from a MongoDB data source?

What do I do if the error message

MongoExecutionTimeoutException: operation exceeded time limit

is returned when I run a batch synchronization task to synchronize data from a MongoDB data source?

MongoExecutionTimeoutException: operation exceeded time limit

is returned when I run a batch synchronization task to synchronize data from a MongoDB data source?

What do I do if the error message

DataXException: operation exceeded time limit

is returned when I run a batch synchronization task to synchronize data from a MongoDB data source?

DataXException: operation exceeded time limit

is returned when I run a batch synchronization task to synchronize data from a MongoDB data source?

What do I do if the error message "no such cmd splitVector" is returned when I run a batch synchronization task to synchronize data from MongoDB?

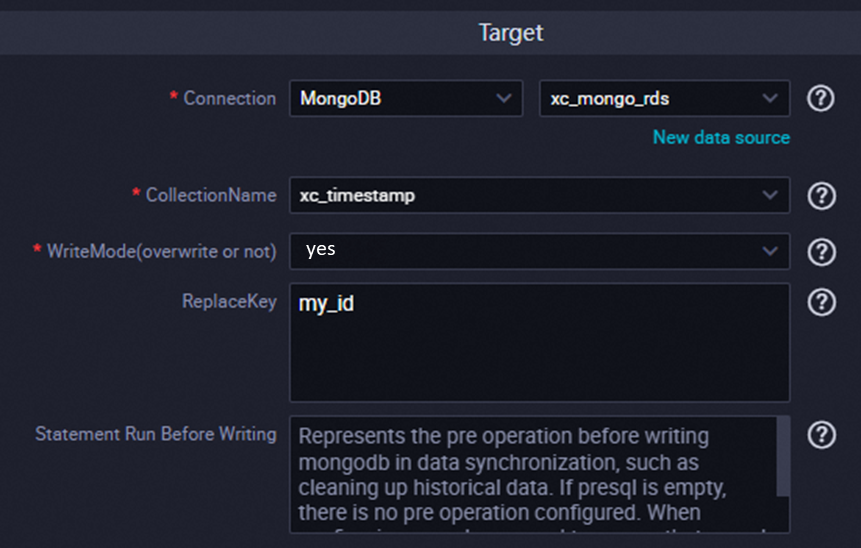

What do I do if the following error message is returned when I run a batch synchronization task to synchronize data to MongoDB: After applying the update, the (immutable) field '_id' was found to have been altered to _id: "2"?

What do I do if the following error message is returned when I run a batch synchronization task to synchronize data to MongoDB: After applying the update, the (immutable) field '_id' was found to have been altered to _id: "2"?

Redis

Redis

What do I do if the error message

Code:[RedisWriter-04], Description:[Dirty data]. - source column number is in valid!

is returned for storing data written to Redis in hash mode?

What do I do if the error message

Code:[RedisWriter-04], Description:[Dirty data]. - source column number is in valid!

is returned for storing data written to Redis in hash mode?

OSS

OSS

Is the number of OSS objects from which OSS Reader can read data limited?

Is the number of OSS objects from which OSS Reader can read data limited?

How do I remove the random strings that appear in the data I write to OSS?

How do I remove the random strings that appear in the data I write to OSS?

What do I do if the error message

AccessDenied The bucket you access does not belong to you

is returned when I run a batch synchronization task to synchronize data from OSS?

What do I do if the error message

AccessDenied The bucket you access does not belong to you

is returned when I run a batch synchronization task to synchronize data from OSS?

Hive

Hive

What do I do if the error message

Could not get block locations

is returned when I run a batch synchronization task to synchronize data to an on-premises Hive data source?

What do I do if the error message

Could not get block locations

is returned when I run a batch synchronization task to synchronize data to an on-premises Hive data source?

DataHub

DataHub

What do I do if data fails to be written to DataHub because the amount of data that I want to write at a time exceeds the upper limit?

What do I do if data fails to be written to DataHub because the amount of data that I want to write at a time exceeds the upper limit?

LogHub

LogHub

Why is no data obtained when I run a batch synchronization task to synchronize data from a LogHub data source whose fields contain values?

Why is no data obtained when I run a batch synchronization task to synchronize data from a LogHub data source whose fields contain values?

Why is some data missed when I run a batch synchronization task to read data from a LogHub data source?

Why is some data missed when I run a batch synchronization task to read data from a LogHub data source?

What do I do if the LogHub fields that are read based on the field mappings configured for a batch synchronization task are not the expected fields?

What do I do if the LogHub fields that are read based on the field mappings configured for a batch synchronization task are not the expected fields?

Lindorm

Lindorm

Is historical data replaced each time data is written to Lindorm in bulk mode provided by Lindorm?

Is historical data replaced each time data is written to Lindorm in bulk mode provided by Lindorm?

Elasticsearch

Elasticsearch

How do I query all fields in an index of an Elasticsearch cluster?

How do I query all fields in an index of an Elasticsearch cluster?

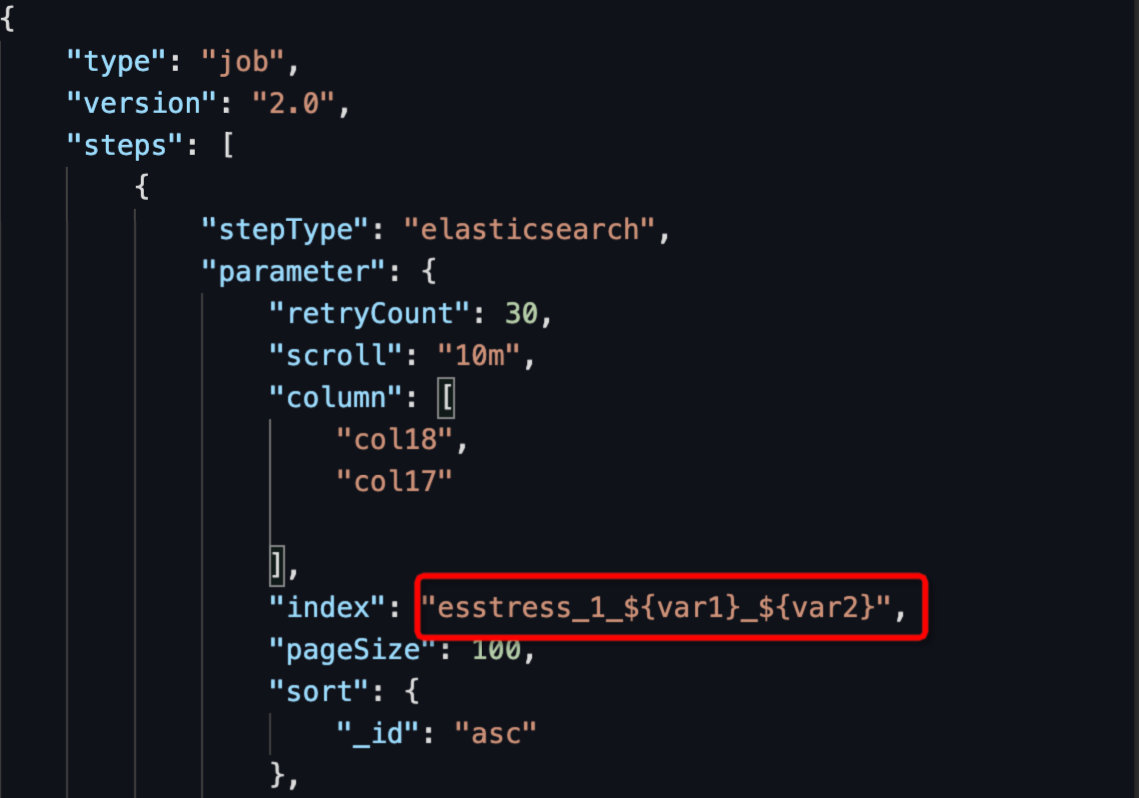

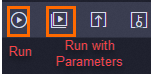

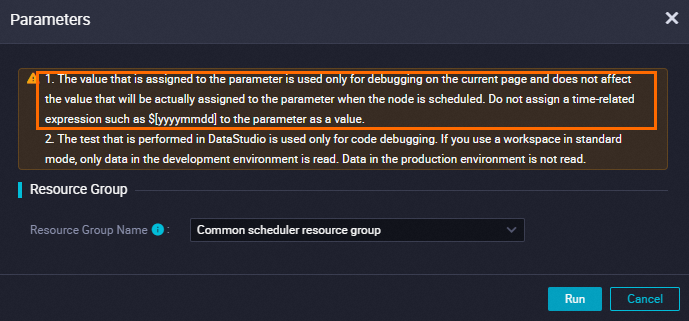

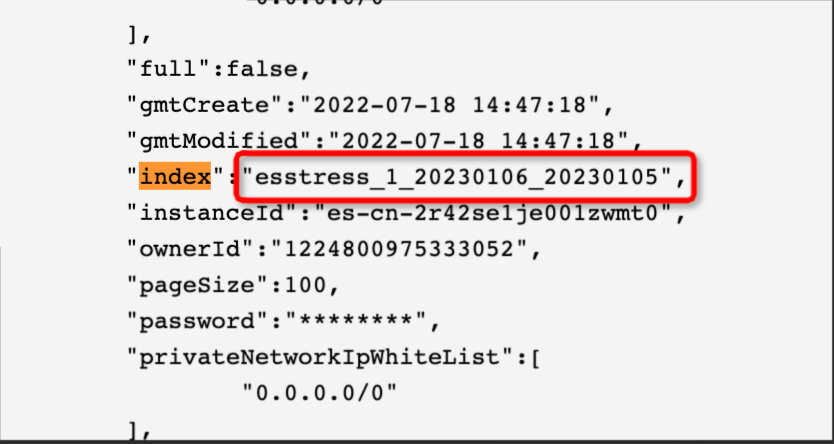

How do I configure a batch synchronization task to synchronize data from indexes with dynamic names in an Elasticsearch data source to another data source?

How do I configure a batch synchronization task to synchronize data from indexes with dynamic names in an Elasticsearch data source to another data source?

How do I configure Elasticsearch Reader to synchronize the properties of object fields or nested fields, such as object.field1?

How do I configure Elasticsearch Reader to synchronize the properties of object fields or nested fields, such as object.field1?

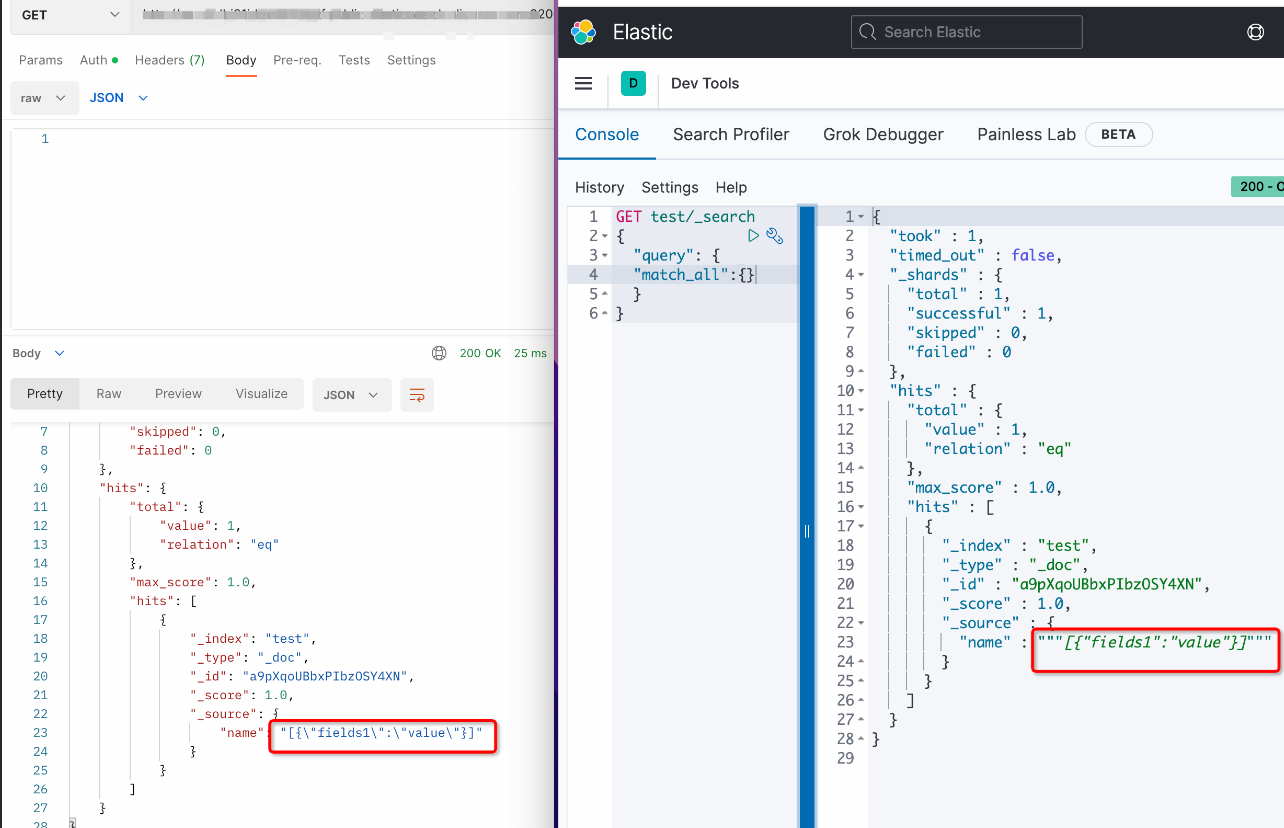

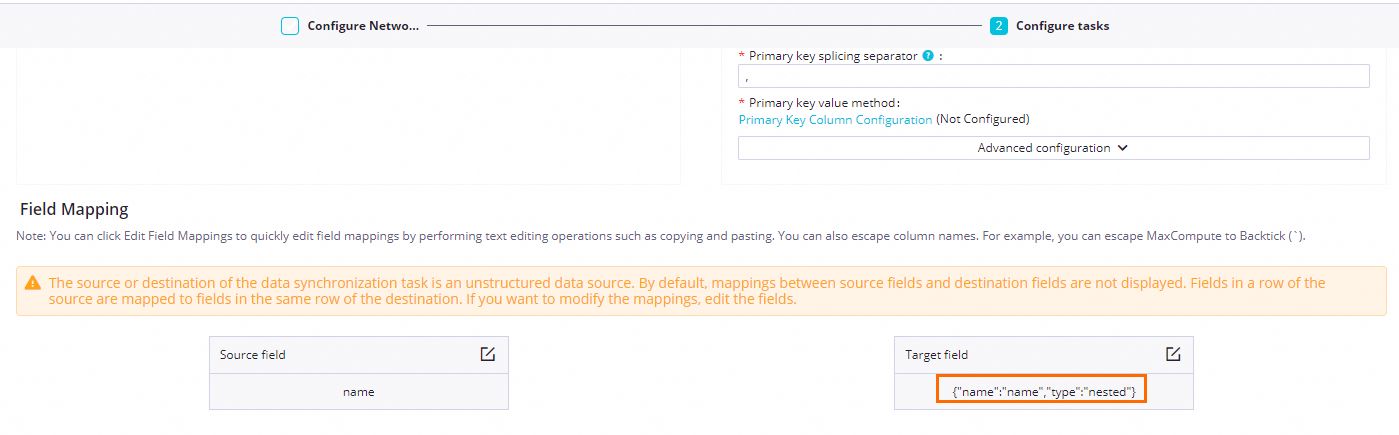

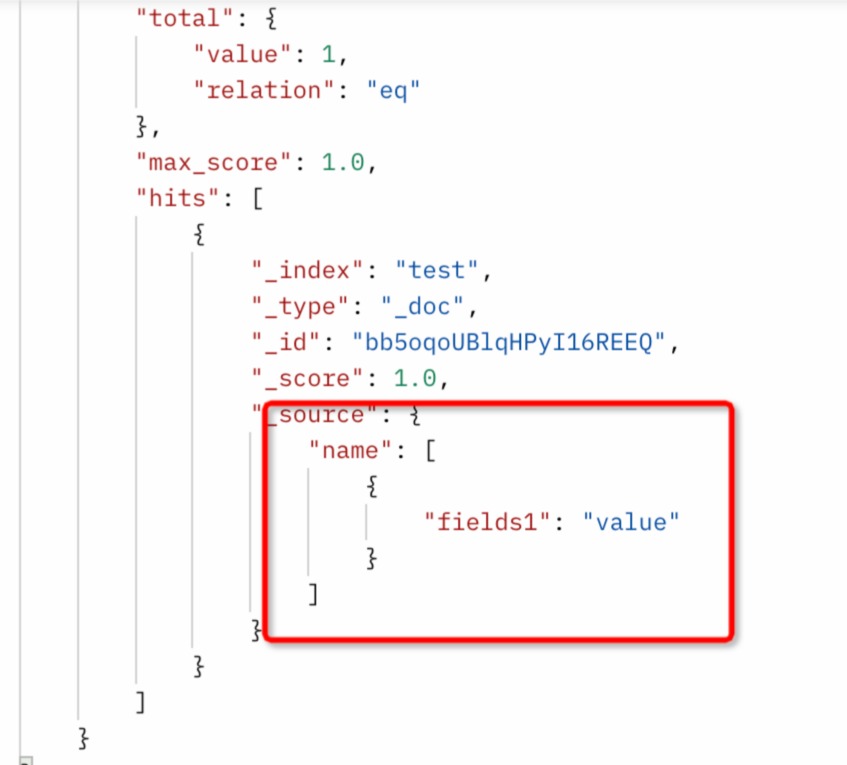

What do I do if data of a string type in a MaxCompute data source is enclosed in double quotation marks (") after the data is synchronized to an Elasticsearch data source? How do I configure the JSON strings read from a MaxCompute data source to be written to nested fields in an Elasticsearch data source?

What do I do if data of a string type in a MaxCompute data source is enclosed in double quotation marks (") after the data is synchronized to an Elasticsearch data source? How do I configure the JSON strings read from a MaxCompute data source to be written to nested fields in an Elasticsearch data source?

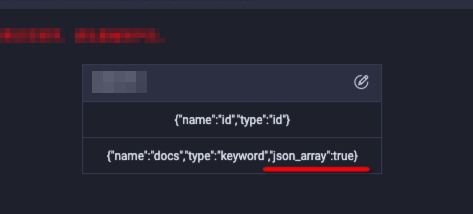

How do I configure a batch synchronization task to synchronize data such as

string "[1,2,3,4,5]"

from a data source to an Elasticsearch data source as an array?

How do I configure a batch synchronization task to synchronize data such as

string "[1,2,3,4,5]"

from a data source to an Elasticsearch data source as an array?

Each time data is written to Elasticsearch, an unauthorized request is sent, and the request fails because the username verification fails. As a result, a large number of audit logs are generated every day because all the requests are logged. What do I do?

Each time data is written to Elasticsearch, an unauthorized request is sent, and the request fails because the username verification fails. As a result, a large number of audit logs are generated every day because all the requests are logged. What do I do?

How do I configure a batch synchronization task to synchronize data to fields of a date data type in an Elasticsearch data source?

How do I configure a batch synchronization task to synchronize data to fields of a date data type in an Elasticsearch data source?

What do I do if a write error occurs when the type of a field is set to version in the configuration of Elasticsearch Writer?

What do I do if a write error occurs when the type of a field is set to version in the configuration of Elasticsearch Writer?

What do I do if the error message

ERROR ESReaderUtil - ES_MISSING_DATE_FORMAT, Unknown date value. please add "dataFormat". sample value:

is returned when I run a batch synchronization task to synchronize data from an Elasticsearch data source?

What do I do if the error message

ERROR ESReaderUtil - ES_MISSING_DATE_FORMAT, Unknown date value. please add "dataFormat". sample value:

is returned when I run a batch synchronization task to synchronize data from an Elasticsearch data source?

What do I do if the error message

com.alibaba.datax.common.exception.DataXException: Code:[Common-00]

is returned when I run a batch synchronization task to synchronize data from an Elasticsearch data source?

What do I do if the error message

com.alibaba.datax.common.exception.DataXException: Code:[Common-00]

is returned when I run a batch synchronization task to synchronize data from an Elasticsearch data source?

What do I do if the error message

version_conflict_engine_exception

is returned when I run a batch synchronization task to synchronize data to an Elasticsearch data source?

What do I do if the error message

version_conflict_engine_exception

is returned when I run a batch synchronization task to synchronize data to an Elasticsearch data source?

What do I do if the error message

illegal_argument_exception

is returned when I run a batch synchronization task to synchronize data to an Elasticsearch data source?

What do I do if the error message

illegal_argument_exception

is returned when I run a batch synchronization task to synchronize data to an Elasticsearch data source?

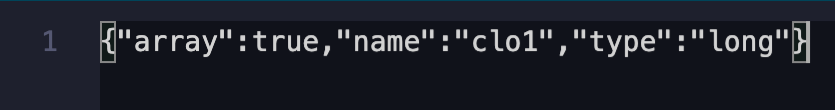

What do I do if the error message

dense_vector

is returned when I run a batch synchronization task to synchronize data from fields of an array data type in a MaxCompute data source to an Elasticsearch data source?

What do I do if the error message

dense_vector

is returned when I run a batch synchronization task to synchronize data from fields of an array data type in a MaxCompute data source to an Elasticsearch data source?

Why do the settings that are configured for Elasticsearch Writer not take effect during the creation of an index?

Why do the settings that are configured for Elasticsearch Writer not take effect during the creation of an index?

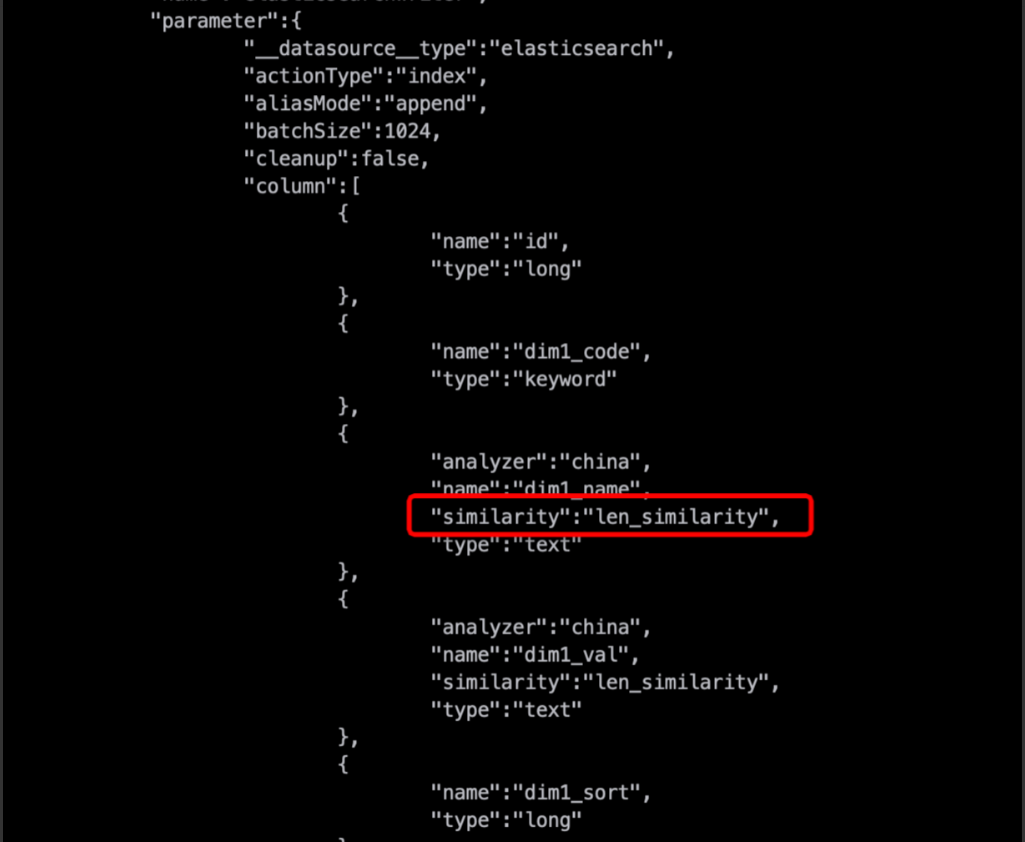

The property type of a field in a self-managed Elasticsearch index is keyword, but the type of the child property of the field is changed to keyword after the related batch synchronization task is run with the

cleanup=true

setting configured. Why does this happen?

The property type of a field in a self-managed Elasticsearch index is keyword, but the type of the child property of the field is changed to keyword after the related batch synchronization task is run with the

cleanup=true

setting configured. Why does this happen?

Kafka

Kafka

I configured the endDateTime parameter to specify the end time for reading data from a Kafka data source, but some data that is read is generated at a point in time later than the specified end time. What do I do?

I configured the endDateTime parameter to specify the end time for reading data from a Kafka data source, but some data that is read is generated at a point in time later than the specified end time. What do I do?

What do I do if a batch synchronization task used to synchronize data from a Kafka data source does not read data or runs for a long period of time even if only a small amount of data is stored in the Kafka data source?

What do I do if a batch synchronization task used to synchronize data from a Kafka data source does not read data or runs for a long period of time even if only a small amount of data is stored in the Kafka data source?

RestAPI

RestAPI

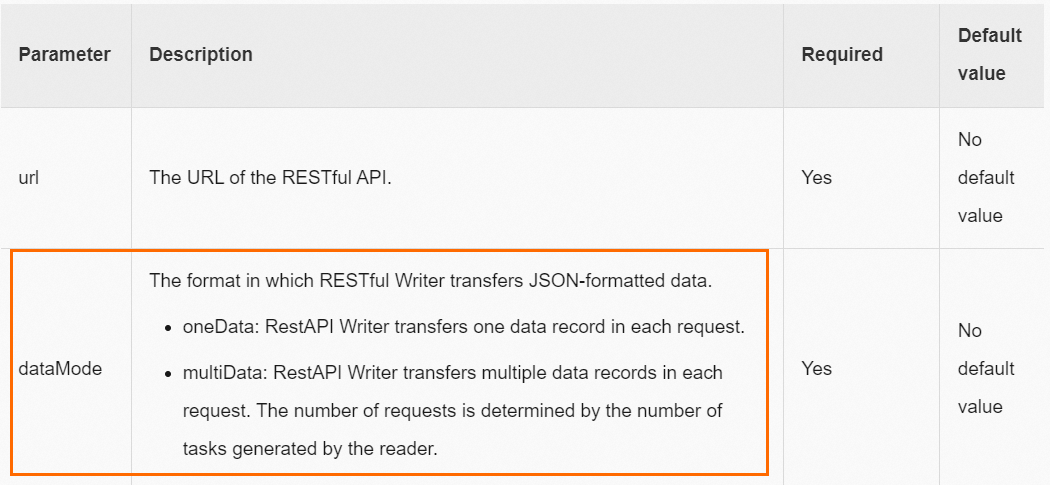

What do I do if the

JSON data returned based on the path:[] condition is not of the ARRAY type

when I use RestAPI Writer to write data?

What do I do if the

JSON data returned based on the path:[] condition is not of the ARRAY type

when I use RestAPI Writer to write data?

Configuration of Tablestore Writer

Configuration of Tablestore Writer

How do I configure Tablestore Writer to write data to a destination table that contains auto-increment primary key columns?

How do I configure Tablestore Writer to write data to a destination table that contains auto-increment primary key columns?

Configuration of a time series model

Configuration of a time series model

How do I use

_tags

and

is_timeseries_tag

in the configurations of a time series model to read or write data?

How do I use

_tags

and

is_timeseries_tag

in the configurations of a time series model to read or write data?

Configuration of custom table names

Configuration of custom table names

How do I specify custom table names in a batch synchronization task?

How do I specify custom table names in a batch synchronization task?

Failure of finding the desired table

Failure of finding the desired table

What do I do if the table that I want to select is not displayed when I configure a batch synchronization task by using the codeless UI?

What do I do if the table that I want to select is not displayed when I configure a batch synchronization task by using the codeless UI?

Keyword in a table name

Keyword in a table name

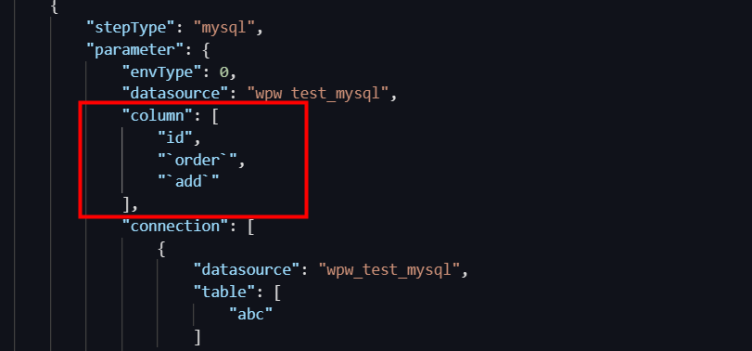

What do I do if a batch synchronization task fails to be run because the name of a column in the source table is a keyword?

What do I do if a batch synchronization task fails to be run because the name of a column in the source table is a keyword?

Column addition to a source table

Column addition to a source table

What do I do if a field is added to or updated in a source table of a batch synchronization task?

What do I do if a field is added to or updated in a source table of a batch synchronization task?

Field mapping

Field mapping

What do I do if the error message

plugin xx does not specify column

is returned when I run a batch synchronization task to synchronize data?

What do I do if the error message

plugin xx does not specify column

is returned when I run a batch synchronization task to synchronize data?

What do I do if an error message is displayed when I preview field mappings of an unstructured data source?

What do I do if an error message is displayed when I preview field mappings of an unstructured data source?

Index

Index

What do I do if a full scan for a MaxCompute table slows down data synchronization because no index is added in the WHERE clause?

What do I do if a full scan for a MaxCompute table slows down data synchronization because no index is added in the WHERE clause?

TTL modification

TTL modification

Can I use only the ALTER TABLE statement to modify the TTL of a table from which data needs to be synchronized?

Can I use only the ALTER TABLE statement to modify the TTL of a table from which data needs to be synchronized?

Field aggregation by using a function

Field aggregation by using a function

Can I use a function supported by a source to aggregate fields when I synchronize data by using an API operation? For example, can I use a function supported by a MaxCompute data source to aggregate Fields a and b in a MaxCompute table as a primary key for synchronizing data to Lindorm?

Can I use a function supported by a source to aggregate fields when I synchronize data by using an API operation? For example, can I use a function supported by a MaxCompute data source to aggregate Fields a and b in a MaxCompute table as a primary key for synchronizing data to Lindorm?

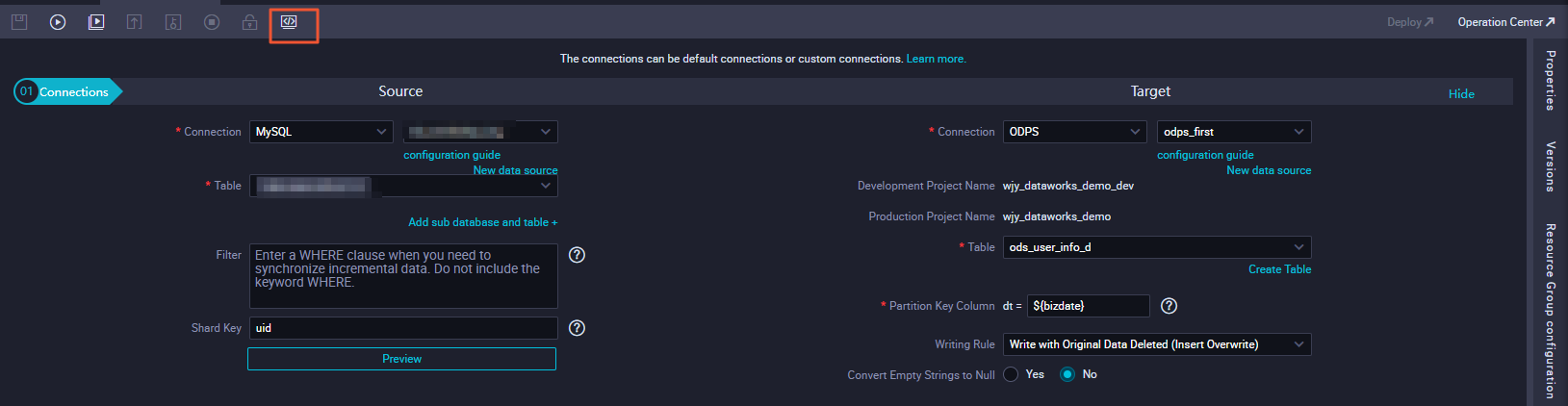

icon in the top toolbar to switch to the code editor.

icon in the top toolbar to switch to the code editor.

The default delimiter is "-,-".

The default delimiter is "-,-".

Configure the following setting:

Configure the following setting: