link管理

链接快照平台

- 输入网页链接,自动生成快照

- 标签化管理网页链接

相关文章推荐

|

|

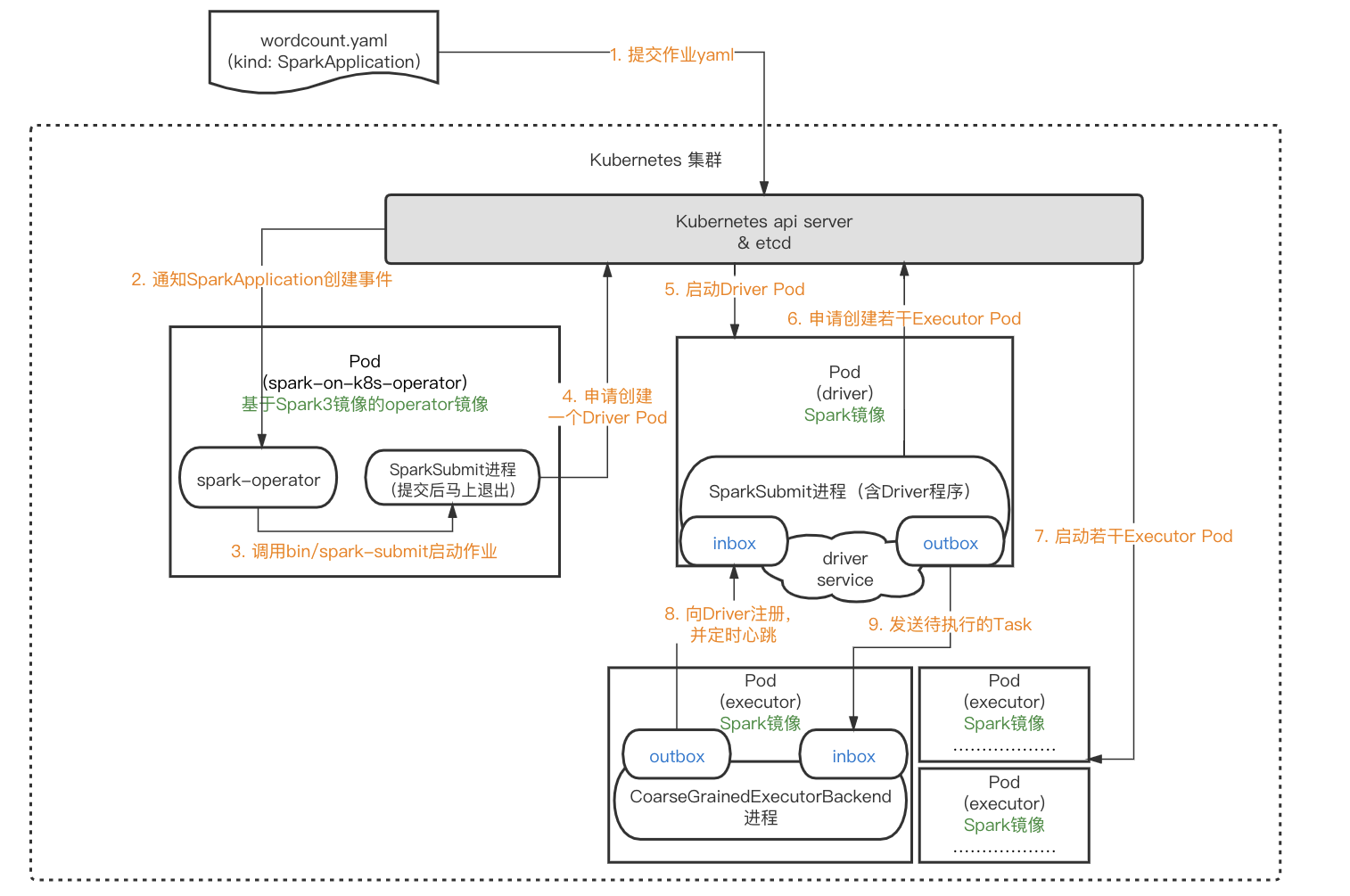

深情的葡萄酒 · 使用ACK One ...· 3 天前 · |

|

|

焦虑的单车 · 初识etcd | 极客熊生· 3 天前 · |

|

|

老实的香菇 · CoreOS發布新版Tectonic平臺,新 ...· 3 天前 · |

|

|

玩命的小虾米 · 红帽公布CoreOS与红帽 ...· 3 天前 · |

|

|

俊逸的草稿本 · Istio 运维实战系列(1):应用容器对 ...· 4 天前 · |

|

|

纯真的核桃 · 融了14轮的地平线赴港IPO | 投中网· 1 月前 · |

|

|

不爱学习的柠檬 · 钢铁雄心4德国怎样打法国英国美国苏联(德国玩 ...· 2 月前 · |

|

|

欢乐的领带 · 严弘:中科大少年班走出来的金融教授 - ...· 3 月前 · |

|

|

从容的柿子 · “国防七子”、“C9联盟”、“华东五虎”,中 ...· 4 月前 · |

|

|

风度翩翩的西装 · 按键精灵脚本问题:第0行:没有权限: ...· 5 月前 · |