- 输入网页链接,自动生成快照

- 标签化管理网页链接

|

|

傻傻的佛珠 · VMCI problem | vSphere· 1 周前 · |

|

|

酒量大的米饭 · 警惕违规医疗竞价广告“死灰复燃”_中华人民共 ...· 3 月前 · |

|

|

英俊的水桶 · 小程序开发模拟器上是可以搜索到周围的蓝牙设备 ...· 1 年前 · |

|

|

气势凌人的打火机 · 8款蟑螂药产品效果测评:最贵的一款蟑螂药灭蟑 ...· 1 年前 · |

|

|

成熟的黄豆 · 千年雄安:新时代中国的诗与远方——写在《河北 ...· 1 年前 · |

|

|

斯文的剪刀 · 【图】【2022款思皓X8大圣版 300T ...· 2 年前 · |

| memory 虚拟机 host vsphere |

| https://techzone.vmware.com/resource/deploying-hardware-accelerated-graphics-vmware-horizon-7 |

|

|

低调的鸵鸟

2 年前 |

Engineers, designers, and scientists have traditionally relied on dedicated graphics workstations to perform the most demanding tasks, such as manipulating 3D models and visually analyzing large data sets. These standalone workstations carry high acquisition and maintenance costs. In addition, in areas such as oil and gas, space exploration, aerospace, engineering, science, and manufacturing, individuals with these advanced requirements must be located in the same physical location as the workstation.

This guide describes hardware-accelerated graphics in VMware virtual desktops in VMware Horizon® . It begins with typical use cases and matches these use cases to the three types of graphics acceleration, explaining the differences. Later sections provide installation and configuration instructions, as well as best practices and troubleshooting.

Note : This guide describes hardware-accelerated graphics in a VMware Horizon environment that uses a VMware vSphere® infrastructure.

Moving the graphics acceleration hardware from the workstation to a server is a key architectural innovation. This shift changes the computing metaphor for graphics processing, putting the additional compute, memory, networking, and security advantages of the data center at the disposal of the user, so that complex models and very large data sets can be accessed and manipulated from virtually anywhere.

With appropriate network bandwidth and suitable remote client devices, IT can now offer the most advanced users an immersive 3D-graphics experience while freeing them from the limitations of the old computing metaphor:

- Fewer physical resources are needed.

- The wait time to open complex models or run simulations is greatly reduced.

- Users are no longer tied to a single physical location.

In addition to handling the most demanding graphical workloads, hardware acceleration can also reduce CPU usage for less demanding basic desktop or published application usage, and for video encoding or decoding, which includes the default Blast Extreme remote display protocol.

VMware Horizon provides a platform to deliver a virtual desktop solution as well as an enterprise-class application-publishing solution. Horizon features and components, such as the Blast Extreme display protocol, instant-clone provisioning, VMware App Volumes ™ application delivery, and VMware Dynamic Environment Manager ™, are also integrated into Remote Desktop Services to provide a seamless user experience and an easy-to-manage, scalable solution.

There are three types of graphics acceleration for Horizon:

- Virtual Shared Graphics Acceleration

- Virtual Shared Pass-Through Graphics Acceleration

- Virtual Dedicated Graphics Acceleration

- AMD FirePro S7100X/S7150/S7150X2

- Intel Iris Pro Graphics P580/P6300

- NVIDIA Quadro M5000/P6000, Tesla M6/M10/M60/P4/P6/P40/P100/V100/T4 and RTX6000/8000

- Virtual shared pass-through graphics acceleration (MxGPU or vGPU) – When performance and features (hardware video encoding and decoding, or DirectX/OpenGL levels) matter most.

- Virtual shared graphics acceleration (vSGA) – When consolidation matters most.

- Virtual shared pass-through graphics acceleration (MxGPU or vGPU) – When availability or consolidation matters most.

- Virtual dedicated graphics acceleration (vDGA) – When every bit of performance counts.

- ESXi 6.x or 7.x host

- Virtual machine

- Guest operating system

- Horizon pool and farm settings

- License server

- Install the graphics card on the ESXi host.

- Put the host in maintenance mode.

-

If you are using an NVIDIA Telsa P card, make sure

ECC

is turned off, and when using an NVIDIA Tesla M card, make sure the card is set to graphics mode (compute by default), with

GpuModeSwitch, which comes as a bootable ISO, or a VIB:

-

Install

GpuModeSwitchwithout an NVIDIA driver installed:esxcli software vib install --no-sig-check -v /<path_to_vib>/NVIDIA-GpuModeSwitch-1OEM.xxx.0.0.xxxxxxx.x86_64.vib - Reboot the host.

-

Change all GPUs to graphics mode:

gpumodeswitch --gpumode graphics -

Remove

GpuModeSwitch:esxcli software vib remove -n NVIDIA-VMware_ESXi_xxx_GpuModeSwitch_Driver -

Install the GPU vSphere Installation Bundle (VIB):

esxcli software vib install -v /<path_to_vib>\NVIDIA-VMware_ESXi_xxx_Host_Driver_xxx.xx-1OEM.xxx.0.0.xxxxxxx.vibIf you are using ESXi 6.0, the VIB files for vSGA and vGPU are separate; with ESXi 6.5, there is a single VIB for both vSGA and vGPU.

- Reboot and take the host out of maintenance mode.

-

If you are using vSphere 6.5 or later and an NVIDIA card:

-

In the vSphere Web Client, navigate to

Host

>

Configure

>

Hardware

>

Graphics

>

Graphics Device

>

Edit icon

.

The Edit Host Graphics Settings window appears.

- Select Shared Direct for vGPU, or Shared for vSGA.

Installation and Configuration Recommendations for MxGPU

- Install the graphics card on the ESXi host.

- Put the host in maintenance mode.

- In the BIOS of the ESXi host, verify that Single-Root IO Virtualization (SR-IOV) is enabled: and that one of the following is also enabled:

- Intel Virtualization Technology support for Direct I/O (Intel VT-d)

- AMD input–output memory management unit (IOMMU)

-

Browse to the location of the AMD FirePro VIB driver and AMD VIB install utility:

cd /<path_to_vib> -

Make the VIB install utility executable, and execute it:

chmod +x mxgpu-install.sh && sh mxgpu-install.sh –i -

The script shows three options; select the option that suits your environment.

Enter the configuration mode([A]uto/[H]ybrid/[M]anual,default:A)A -

The script asks for the number of virtual functions (VFs); indicate the number of users you want to run on a GPU:

Please enter number of VFs: (default:4): 8 -

The script asks if you want to keep performance fixed, independent of the number of active VMs; indicate

YesorNoaccording to your requirements.Do you want to enable Predictable Performance? ([Y]es/[N]o,default:N)N

…

Done

The configuration needs a reboot to take effect - Reboot and take the host out of maintenance mode.

Installation and Configuration Recommendations for vDGA

- Install the graphics card on the ESXi host.

- Verify that Intel VT-d or AMD IOMMU is enabled in the BIOS of the ESXi host.

-

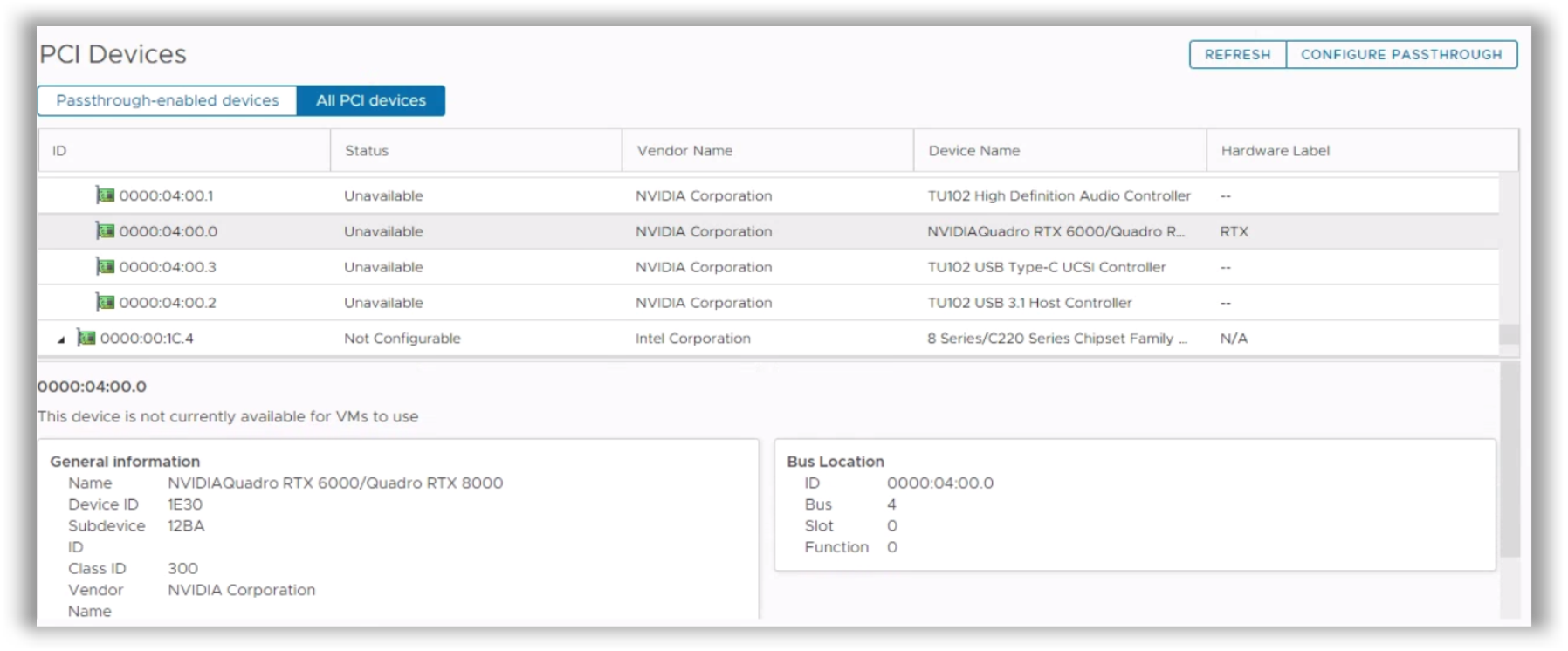

To enable pass-through for the GPU, in the vSphere Web Client, navigate to

Host

>

Configure

>

Hardware

>

PCI Devices

>

Edit

.

The All PCI Devices window appears.

-

Select the check box for the GPU, and reboot.

- Knowledge workers: 2

- Power users: 4

- Designers: 6

- Knowledge workers: 2 GB

- Power users: 4 GB

- Designers: 8 GB

- Enable 3D graphics by selecting Enable 3D Support .

- Set 3D Renderer to Automatic or Hardware .

- Automatic – Uses hardware acceleration if there is a capable, and available, hardware GPU in the host that the VM is starting in. However, if a hardware GPU is not available, the VM uses software 3D rendering for any 3D tasks. The Automatic option allows the VM to be started on, or migrated (using vSphere vMotion) to any host (vSphere 5.0 or later), and to use the best solution available on that host.

- Hardware – Uses only hardware-accelerated GPUs. If a hardware GPU is not present in a host, the VM will not start, or you will not be able to perform a live vSphere vMotion migration to that host. vSphere vMotion is possible with the Hardware option as long as the host that the VM is being moved to has a capable and available hardware GPU. This setting can be used to guarantee that a VM will always use hardware 3D rendering when a GPU is available, but that strategy in turn limits the VM to running on only those hosts that have hardware GPUs.

-

Select the amount of video memory (

3D Memory

).

3D Memory has a default of 96 MB, a minimum of 64 MB, and a maximum of 512 MB.

-

Add a PCI device to the VM and select the appropriate PCI device to enable GPU pass-through on the VM.

After you add a shared PCI device, you see a list of all supported graphics profile types that are available from the GPU card on the ESXi host. Select the correct profile from the drop-down menu.

The last part of the GPU profile string (2q, in this example) tells the amount of frame buffer (VRAM) in gigabytes (0meaning 512 MB; in this example 2 GB), and the type of GRID license required (qin this example):b - GRID Virtual PC : Virtual GPUs for business desktop computing

a - GRID Virtual Application : Virtual GPUs for Remote Desktop Session Host (RDSH) servers

q - Quadro Virtual Datacenter Workstation (vDWS) : Workstation-specific graphics features and accelerations, such as up to four 4 K monitors and certified drivers for professional applications. - In the same New PCI Device window, reserve all memory when creating the VM by clicking Reserve all memory .

- For devices with a large BAR size (for example, Tesla P40), vSphere 6.5 or later must be used, and the following advanced configuration parameters must be set on the VM:

-

firmware="efi" -

pciPassthru.use64bitMMIO="TRUE" -

pciPassthru.64bitMMIOSizeGB="64" -

Add a PCI device (virtual functions are also presented as PCI devices) to the VM, and select the appropriate PCI device to enable GPU pass-through on the VM. With MxGPU, you can also do this by installing the Radeon Pro Settings for the VMware vSphere Client Plug-in:

Or you can do this for multiple machines at once from

ssh, as follows.-

Browse to the location of the AMD FirePro VIB driver and AMD VIB install utility:

cd /<path_to_vib -

Edit

vms.cfg:vi vms.cfgPress I and change

.*to a regular expression (or multiple expressions on multiple lines) to match the names of your VMs that require a GPU. Following is an example to match*MxGPU*to VM names that include MxGPU, such asWIN10-MxGPU-001orWIN8.1-MxGPU-002:.*MxGPU.*Press Esc, enter

:wq, and press Enter to save and quit. -

Assign the virtual functions to the VMs:

sh mxgpu-install.sh –a assignEligible VMs:WIN10-MxGPU-001

WIN10-MxGPU-002

WIN8.1-MxGPU-001

WIN8.1-MxGPU-002These VMs will be assigned a VF, is it OK?[Y/N]yPress Enter.

-

Select

Reserve all guest memory (All locked)

when creating the VM.

For the guest operating system, perform the following installations and configurations.

Installation and Configuration for Windows Guest Operating System

For a Windows guest operating system, install and configure as follows.

-

Install Windows 10 or Windows Server 2012 R2, 2016, or 2019, and install all updates. The following installations are also recommended:

-

Install common Microsoft runtimes and features:

Before updating Windows in the VM, install all required versions of Microsoft runtimes that are patched by Windows Update and that can run side by side in the image. For example, install:

- .NET Framework (3.5, 4.5, and so on)

-

Visual C++ Redistributables x86 / x64 (2005 SP1, 2008, 2012, and so on)

-

Install Microsoft updates:

Install all available updates to Microsoft Windows and other Microsoft products with Windows Update or Windows Server Update Service. You might need to first manually install Windows Update Client for Windows 8.1 and Windows Server 2012 R2: March 2016 .

-

Tune Windows with the VMware OS Optimization Tool.

Run the VMware OS Optimization Tool with the default options.

-

Install Microsoft updates:

- If you are not using vSGA, obtain the GPU drivers from the GPU vendor (with vGPU, this is a matched pair with the VIB file) and install the GPU device drivers (with MxGPU, make sure the GPU Server option is selected) in the guest operating system of the VM.

- Install VMware Tools and Horizon Agent (select 3D RDSH feature for Windows 2012 R2 Remote Desktop Session Hosts) in the guest operating system, and reboot.

Installation and Configuration for Red Hat Enterprise Linux Operating System, vGPU and vDGA

For a Red Hat Enterprise Linux guest operating system, install and configure as follows.

- Install Red Hat Enterprise Linux, install all updates, and reboot.

-

Install

gcc, kernel makefiles, and headers:sudo yum install gcc-c++ kernel-devel-$(uname -r) kernel-headers-$(uname -r) -y -

Turn off

libvirt:sudo systemctl disable libvirtd.service -

Turn off the open-source nouveau driver:

-

Add

blacklist=nouveauto the line forGRUB_CMDLINE_LINUXsudo vi /etc/default/grubor for RHEL 6.x:

/boot/grub/grub.conf -

Add

blacklist nouveauline anywhere inblacklist.conf.sudo vi /etc/modprobe.d/blacklist.conf -

Generate a new grub

configandinitramfs:sudo grub2-mkconfig -o /boot/grub2/grub.cfgsudo dracut /boot/initramfs-$(uname -r).img $(uname -r) -f - Reboot.

-

Install the NVIDIA driver:

init 3chmod +x NVIDIA-Linux-x86_64-xxx.xx-grid.runsudo ./NVIDIA-Linux-x86_64-xxx.xx-grid.run(Acknowledge all questions.) - (Optional) Install the CUDA Toolkit (run file method recommended), but do not install the included driver.

-

Add license server information:

sudo cp /etc/nvidia/gridd.conf.template/etc/nvidia/gridd.confsudo vi /etc/nvidia/gridd.confSet

ServerAddressandBackupServerAddressto the DNS names or IPs of your license servers, and setFeatureTypeto1for vGPU or2for vDGA. -

Install Horizon Agent:

tar -zxvf VMware-horizonagent-linux-x86_64-7.3.0-6604962.tar.gzcd VMware-horizonagent-linux-x86_64-7.3.0-6604962sudo ./install_viewagent.sh(Acknowledge all questions.)During the creation of a new server farm in Horizon, configuring a farm for 3D is the same as configuring a normal farm. During the creation of a new desktop pool in Horizon, configure the pool as normal until you reach the Pool Settings section.

- Scroll down the page until you reach the Remote Display Protocol section. In this section, you see the 3D Renderer option.

- For the 3D Renderer option, do one of the following:

-

For vSGA, select either

Hardware

or

Automatic

.

Automatic uses hardware acceleration if there is a capable, and available, hardware GPU in the host that the VM is starting in. However, if a hardware GPU is not available, the VM uses software 3D rendering for any 3D tasks. The Automatic option allows the VM to be started on, or migrated (using vSphere vMotion) to any host (vSphere 5.0 or later), and to use the best solution available on that host.

Hardware uses only hardware-accelerated GPUs. If a hardware GPU is not present in a host, the VM will not start, or you will not be able to perform a live vSphere vMotion migration to that host. vSphere vMotion is possible with the Hardware option as long as the host that the VM is being moved to has a capable and available hardware GPU. This setting can be used to guarantee that a VM will always use hardware 3D rendering when a GPU is available, but that strategy in turn limits the VM to running only on those hosts that have hardware GPUs

- For vDGA, select Hardware .

- For vGPU, select NVIDIA GRID VGPU .

-

For Horizon 7 version 7.0 or 7.1, you need to configure the amount of VRAM you want each virtual desktop to have, and when using vGPU, select the profile you would like to use. With Horizon 7 version 7.1, vGPU can be used with instant clones, however the profile needs to match the profile set on the parent VM with the vSphere Web Client.

3D Memory has a default of 96 MB, a minimum of 64 MB, and a maximum of 512 MB.

With Horizon 7 version 7.2 and later, the video memory and vGPU profile are inherited from the VM or VM snapshot:

To better manage the GPU resources available on an ESXi host, examine the current GPU resource allocation. The ESXi command-line query utility

gpuvmlists the GPUs installed on an ESXi host and displays the amount of GPU memory that is allocated to each VM on that host.gpuvmXserver unix:0, GPU maximum memory 2076672KBpid 118561, VM "Test-VM-001", reserved 131072KB of GPU memory pid 664081, VM "Test-VM-002", reserved 261120KB of GPU memory GPU memory left 1684480KBTo get a summary of the vGPUs currently running on each physical GPU in the system, run

nvidia-smiThu Oct5 09:28:05 2017+---------------------------------------------------------------------------+

| NVIDIA-SMI 384.73Driver Version: 384.73|

|-----------------------------+----------------------+----------------------+

| GPUName-M| Bus-IdDisp.A | Volatile Uncorr. ECC|

| FanTempPerfPwr:Usage/Cap|Memory-Usage | GPU-UtilCompute M. |

|=============================+======================+======================|

|0Tesla P40On| 00000000:84:00.0 Off |Off |

| N/A38CP060W / 250W |12305MiB / 24575MiB |0%Default |

+-----------------------------+----------------------+----------------------++---------------------------------------------------------------------------+

| Processes:GPU Memory |

|GPUPIDTypeProcess nameUsage|

|===========================================================================|

|0135930M+C+Gmanual4084MiB |

|0223606M+C+Gcentos3D0044084MiB |

|0223804M+C+Gcentos3D0034084MiB |

+---------------------------------------------------------------------------+

To monitor vGPU engine usage across multiple vGPUs, run

nvidia-smi vgpuwith the–uor‑‑utilizationoption:nvidia-smi vgpu -uFor each vGPU, the usage statistics in the following table are reported once every second.

Table 3: Example of Usage Statistics for vGPUs

GPU Index (gpu)

vGPU ID (vgpu)

Compute % (sm)

Memory Controller Bandwidth % (mem)

Video Encoder % (enc)

Video Deocder % (dec)

11924

11903

11908

If an issue arises with vSGA, vGPU, or vDGA, or if Xorg fails to start, try one or more of the following solutions, in any order.

Verify That the GPU Driver Loads

To verify that the GPU VIB is installed, run one of the following commands:

-

For AMD-based GPUs:

#esxcli software vib list | grep fglrx -

For NVIDIA-based GPUs:

#esxcli software vib list | grep NVIDIA

If the VIB is installed correctly, the output resembles the following example:

NVIDIA-VMware304.59-1-OEM.510.0.0.799733 NVIDIAVMwareAccepted2012-11-14To verify that the GPU driver loads, run the following command:

-

For AMD-based GPUs:

#esxcli system module load –m fglrx -

For NVIDIA-based GPUs:

#esxcli system module load –m nvidia

If the driver loads correctly, the output resembles the following example:

Unable to load module /usr/lib/vmware/vmkmod/nvidia: BusyIf the GPU driver does not load, check the

vmkernel.log:# vi /var/log/vmkernel.logSearch for

FGLRXon AMD hardware orNVRMon NVIDIA hardware. Often, an issue with the GPU is identified in thevmkernel.log.Verify That Display Devices Are Present in the Host

To make sure that the graphics adapter is installed correctly, run the following command on the ESXi host:

#esxcli hardware pci list –c 0x0300 –m 0xffThe output should resemble the following example, even if some of the particulars differ:

000:001:00.0Address: 000:001:00.0Segment: 0x0000Bus: 0x01Slot: 0x00Function: 0x00VMkernel Name:Vendor Name: NVIDIA CorporationDevice Name: NVIDIA Quadro 6000Configured Owner: UnknownCurrent Owner: VMkernelVendor ID: 0x10deDevice ID: 0x0df8SubVendor ID: 0x103cSubDevice ID: 0x0835Device Class: 0x0300Device Class Name: VGA compatible controllerProgramming Interface: 0x00Revision ID: 0xa1Interrupt Line: 0x0bIRQ: 11Interrupt Vector: 0x78PCI Pin: 0x69Check the PCI Bus Slot Order

If you installed a second, lower-end GPU in the server, it is possible that the order of the cards in the PCIe slots will choose the higher-end card for the ESXi console session. If this occurs, swap the two GPUs between PCIe slots, or change the primary GPU settings in the server BIOS.

Check Xorg Logs

If the correct devices were present when you tried the previous troubleshooting methods, view the

Xorglog file to see if there is an obvious issue.#vi /var/log/Xorg.logThis section describes specific issues that could arise in graphics acceleration deployments, and presents probable solutions.

Problem :

sched.mem.minerror when starting virtual machine.Solution :

Check

sched.mem.min.If you get a vSphere error about

sched.mem.min, add the following parameter to theVMXfile of the VM:sched.mem.min = "4096"Note : The number in quotes, in this example,

4096, must match the amount of configured VM memory. This example is for a VM with 4 GB of RAM.Problem:

Only able to use one display in Windows 10 with vGPU

-0Bor-0Qprofiles.Solution:

Use a profile that supports more than one virtual display head and has at least 1 GB of frame buffer.

To reduce the possibility of memory exhaustion, vGPU profiles with 512 MB or less of frame buffer support only one virtual display head on a Windows 10 guest OS.

Problem:

Unable to use NVENC with vGPU

-0Bor-0Qprofiles.Solution:

If you require NVENC to be enabled, use a profile that has at least 1 GB of frame buffer.

Using the frame buffer for the NVIDIA hardware-based H.264 / HEVC video encoder (NVENC) might cause memory exhaustion with vGPU profiles that have 512 MB or less of frame buffer. To reduce the possibility of memory exhaustion, NVENC is turned off on profiles that have 512 MB or less of frame buffer.

Problem :

Unable to load vGPU driver in guest operating system.

Depending on the versions of drivers in use, the vSphere VM’s log file reports one of the following errors:

-

A version mismatch between guest and host drivers:

vthread-10| E105: vmiop_log: Guest VGX version(2.0) and Host VGX version(2.1) do not match -

A signature mismatch:

vthread-10| E105: vmiop_log: VGPU message signature mismatch.

Solution:

Install the latest NVIDIA vGPU release drivers that match the installed VIB on ESXi in the VM.

Problem:

Tesla-based virtual GPU fails to start.

Solution:

Ensure that error-correcting code (ECC) is turned off on all GPUs.

Tesla GPUs support ECC, but NVIDIA GRID vGPU does not support ECC memory. If ECC memory is enabled, the NVIDIA GRID vGPU fails to start. The following error is logged in the vSphere VM’s log file:

vthread10|E105: Initialization: VGX not supported with ECC Enabled.-

Use

nvidia-smito list the status of all GPUs, and check for ECC noted as enabled on GPUs. -

Change the ECC status to

Offon each GPU for which ECC is enabled by executing the following command:nvidia-smi -i id -e 0(idis the index of the GPU as reported bynvidia-smi) - Reboot the host.

Problem :

Single vGPU benchmark scores are lower than pass-through GPU.

Solution :

Turn off the frame rate limiter (FRL) by adding the configuration parameter

pciPassthru0.cfg.frame_rate_limiterwith a value of0in the VM’s advanced configuration options.A vGPU incorporates a performance-balancing feature known as frame rate limiter, which is enabled on all vGPUs. The FRL is used to ensure balanced performance across multiple vGPUs that reside on the same physical GPU. The FRL setting is designed to give a good interactive remote graphics experience but might reduce scores in benchmarks that depend on measuring frame-rendering rates, as compared to the same benchmarks running on a pass-through GPU.

Problem :

VMs configured with large memory fail to initialize vGPU when booted.

When starting multiple VMs configured with large amounts of RAM (typically more than 32 GB per VM), a VM might fail to initialize vGPU. The NVIDIA GRID GPU is present in Windows Device Manager but displays a warning sign, and the following device status:

Windows has stopped this device because it has reported problems. (Code 43)The VMware vSphere VM’s log file contains these error messages:

vthread10|E105: NVOS status 0x29vthread10|E105: Assertion Failed at 0x7620fd4b:179vthread10|E105: 8 frames returned by backtracevthread10|E105: VGPU message 12 failed, result code: 0x29vthread10|E105: NVOS status 0x8vthread10|E105: Assertion Failed at 0x7620c8df:280vthread10|E105: 8 frames returned by backtracevthread10|E105: VGPU message 26 failed, result code: 0x8Solution :

Add the configuration parameter

pciPassthru0.cfg.enable_large_sys_memwith a value of1in the VM’s advanced configuration options. This setting increases the portion of reserved frame buffer to 64 GB.A vGPU reserves a portion of the VM’s frame buffer for use in GPU mapping of VM system memory. The default reservation is sufficient to support only up to 32 GB of system memory.

VMware Horizon offers three technologies for hardware-accelerated graphics, each with its own advantages.

- Virtual shared pass-through graphics acceleration (MxGPU or vGPU) – Best match for nearly all use cases.

- Virtual shared graphics acceleration (vSGA) – For light graphical workloads that use only DirectX9 or OpenGL 2.1 and require the maximum level of consolidation.

- Virtual dedicated graphics Aacceleration (vDGA) – For heavy graphical workloads that require the maximum level of performance.

With the information in this guide, you can install, configure, and manage your 3D workloads for Horizon 7 and later on vSphere 6.x or 7.x.

This guide was written by Hilko Lantinga , a Staff Architect in End-User-Computing Technical Marketing, VMware, with a focus on 3D, Horizon Windows desktops, and RDSH, Linux, and applications. Previously, he was a senior consultant in VMware Professional Services, leading large-scale EUC deployments in EMEA. Hilko has 20 years of experience in end-user computing.

To comment on this paper, contact VMware End-User-Computing Technical Marketing at [email protected] .

-

For AMD-based GPUs:

-

Add

-

Install common Microsoft runtimes and features:

-

Install Windows 10 or Windows Server 2012 R2, 2016, or 2019, and install all updates. The following installations are also recommended:

-

Browse to the location of the AMD FirePro VIB driver and AMD VIB install utility:

Set up the VM with general settings, as follows, and then further configure according to the type of graphics acceleration you are using.

Note : To open the dialog box for changing VM settings, in the vSphere Web Client, right-click the VM in the inventory, and select Edit Settings .

General Settings for Virtual Machines

Hardware level – The recommended hardware level is the highest that all hosts support, with Version 11 as the minimum.

CPU – The amount of CPU required depends on the usage and should be determined by actual workload. As a starting point, you might use these numbers:

Memory – The amount of memory required depends on the usage and should be determined by actual workload. As a starting point, you might use these numbers:

Virtual network adapter – The recommended virtual network adapter is VMXNET3.

Virtual storage controller – The recommended virtual disk is LSI Logic SAS, but the highest workloads using local flash-based storage might benefit from using VMware Paravirtual.

Other devices – We recommend removing devices that are not used, such as COM/LTP/DVD/Floppy.

Now that you have configured the general settings for the VMs, configure the VM for the type of graphics acceleration.

Virtual Machine Settings for vSGA

Configure the VM as follows if you are using vSGA.

Virtual Machine Settings for vGPU

Configure the VM as follows if you are using vGPU.

Virtual Machine Settings for MxGPU or vDGA

Configure the VM as follows if you are using MxGPU or vDGA.

-

In the vSphere Web Client, navigate to

Host

>

Configure

>

Hardware

>

Graphics

>

Graphics Device

>

Edit icon

.

-

Install

Virtual shared graphics acceleration (vSGA) allows a GPU to be shared across multiple virtual desktops. It is an attractive solution for users who require the full potential of the GPU’s capability during brief periods. However, vSGA can create bottlenecks, depending on which applications are used, and the resources these applications require from the GPU. vSGA is generally used for knowledge workers and, occasionally, for power users.

With vSGA, the physical GPUs in the host are virtualized and shared across multiple virtual machines (VMs). A vendor driver needs to be installed in the hypervisor. Each VM uses a proprietary VMware vSGA 3D driver that communicates with the vendor driver in the VMware vSphere® host. Drawbacks of vSGA are that applications might need to be recertified to be supported, API support is limited, and support is restricted for the various versions of OpenGL and DirectX.

Important : Some examples of supported vSGA cards for Horizon 7 and later, and vSphere 7 are NVIDIA Tesla M6/M10/M60/P4/P6/P40 cards. For a full list of compatible vSGA cards, see the VMware Virtual Shared Graphics Acceleration Guide .

Virtual shared pass-through graphics acceleration allows a graphical processing unit to be shared with multiple users instead of focused on only one user. The difference from vSGA is that the proprietary VMware 3D driver is not used, and most of the graphics card’s features are supported.

You need to install the appropriate vendor driver in the guest operating system of the VM, and all graphics commands are passed directly to the GPU without having to be translated by the hypervisor. On the hypervisor, a vSphere Installation Bundle (VIB) is installed, which aids or performs the scheduling. Depending on the card, up to 24 VMs can share a GPU, and some cards have multiple GPUs. Calculating the exact number of desktops or users per GPU depends on the type of card, application requirements, screen resolution, number of displays, and frame rate, measured in frames per second (FPS).

The amount of frame buffer (VRAM) per VM is fixed, and the GPU engines are shared between VMs. AMD has an option to also have a fixed amount of compute, which is called predictable performance.

Virtual shared pass-through technology provides better performance than vSGA and higher consolidation ratios than virtual dedicated graphics acceleration (vDGA). It is a good technology to use for low-, mid-, or even advanced-level engineers and designers, as well as for power users with 3D application requirements. One drawback of shared pass-through is that it might require applications to be recertified for support.

Important : Some examples of supported shared pass-through cards for Horizon 7 and later, and vSphere 7 are NVIDIA Tesla M6/M10/M60/P4/P6/P40/P100/V100/T4 and RTX6000/8000 cards. For a full list of compatible shared pass-through graphics cards, see the VMware Shared Pass-Through Graphics Guide .

vDGA technology provides each user with unrestricted, fully dedicated access to one of the GPUs within the host. Although consolidation and management trade-offs are associated with dedicated access, vDGA offers the highest level of performance for users with the most intensive graphics computing needs.

With vDGA, the hypervisor passes one of the GPUs directly to the individual VM. This technology is also known as GPU pass-through. No special drivers are required in the hypervisor. However, to enable graphics acceleration, the appropriate vendor driver needs to be installed in each guest operating system of the VM. The installation procedures are the same as for physical machines. One drawback of vDGA, however, is the lack of vMotion support.

Important : Some examples of supported vDGA cards in Horizon 7 and later, and vSphere 7.x and 6.7 Update 3 are:

For a list of partner servers that are compatible with specific vDGA devices, see the VMware Virtual Dedicated Graphics Acceleration (vDGA) Guide .

vSphere 6.5 DirectX 10.0 SM4.0

vSphere 7.0 DirectX 10.1 SM4.1

vSphere 7.0 U2 DirectX 11.0 SM5.0

All supported versions

All supported versions

Max OpenGL version

vSphere 6.5 3.3

vSphere 7.0 U2 4.1

All supported versions

All supported versions

Video encoding and decoding

Software

Hardware

Hardware

OpenCL or CUDA compute

MxGPU: OpenCL only

GRID 1: No

GRID 2: 1:1 only

vMotion support

Only for vGPU 7+

The hardware requirements for graphics acceleration solutions are listed in Table 2.

Table 2: Hardware Requirements for Hardware-Accelerated Graphics

Component

Description

Physical space for graphics cards

Many high-end GPU cards are full height, full length, and double width, with most taking up two slots on the motherboard, but using only a single PCIe x16 slot. Verify that the host has enough room internally to hold the chosen GPU card in the appropriate PCIe slot.

Host power supply unit (PSU)

Check the power requirements of the GPU to make sure that the PSU is powerful enough and contains the proper power cables to power the GPU. For example, a single NVIDIA K2 GPU can use as much as 225 watts of power and requires either an 8-pin PCIe power cord or a 6-pin PCIe power cord.

For MxGPU, Single-Root IO Virtualization (SR-IOV) needs to be enabled. For vGPU, Intel Virtualization Technology support for Direct I/O (Intel VT-d) or AMD input–output memory management unit (IOMMU) needs to be enabled. To locate these settings in the server BIOS, contact the hardware vendor.

Two display adapters

If the host does not have an extra graphics adapter, VMware recommends that you install an additional low-end display adapter to act as the primary

display adapter because the VMware ESXi™ console display adapter is not available to Xorg. If the GPU is set as the primary adapter, Xorg cannot use the GPU for rendering. If two GPUs are installed, the server BIOS might have an option to select which GPU is primary and which is secondary.

Office workers and executives fall into the knowledge-worker category, typically using applications such as Microsoft Office, Adobe Photoshop, and other non-specialized end-user applications.

Because the graphical load of these users is expected to be low, consolidation becomes important, which is why these types or users are best matched with one of the following types of graphics acceleration:

Power users consume more complex visual data, but their requirements for manipulation of large data sets and specialized software are less intense than for designers, or they use only viewers like Autodesk DWG TrueView.

Power users are best matched with virtual shared pass-through graphics acceleration (MxGPU or vGPU).

Designers and advanced engineering and scientific users often create and work with large, complex data sets, and require graphics-intensive applications such as 3D design, molecular modeling, and medical diagnostics software from companies such as Dassault Systèmes, Enovia, Siemens NX, and Autodesk.

Designers are best matched with one of the following:

The following diagram summarizes the performance and consolidation profiles of the three types of graphics acceleration.

Figure 1: Consolidation and Performance Overview

This section gives details on how to install and configure the following components for graphics acceleration:

The installation and configuration on the ESXi host varies by graphics-acceleration type.

Installation and Configuration Recommendations for vSGA or vGPU

|

|

傻傻的佛珠 · VMCI problem | vSphere 1 周前 |