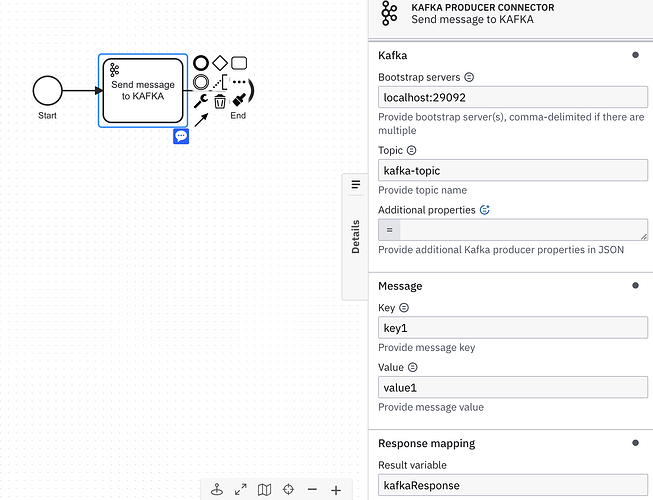

I’m trying to build a demo with Camunda8 and the OOTB Kafka connector.

I’ve installed the Camunda 8 platform on docker on MacOS according to

that doc

I’ve configured a very simple process like this one, based on the

Outbound Kafka connector doc

.

When I’m trying to test it, I’ve got this error in the connector’s pod log :

2023-10-03 07:47:26 2023-10-03T05:47:26.476Z INFO 1 — [pool-2-thread-8] i.c.c.r.c.outbound.ConnectorJobHandler : Received job 2251799813695077

2023-10-03 07:47:26 2023-10-03T05:47:26.488Z INFO 1 — [pool-2-thread-8] org.camunda.feel.FeelEngine : Engine created. [value-mapper: CompositeValueMapper(List(org.camunda.feel.impl.JavaValueMapper@34c1534d)), function-provider: io.camunda.connector.feel.FeelConnectorFunctionProvider@6742f53c, clock: SystemClock, configuration: Configuration(false)]

2023-10-03 07:47:26 2023-10-03T05:47:26.552Z INFO 1 — [pool-2-thread-8] o.a.k.clients.producer.ProducerConfig : ProducerConfig values:

2023-10-03 07:47:26 acks = -1

2023-10-03 07:47:26 auto.include.jmx.reporter = true

2023-10-03 07:47:26 batch.size = 16384

2023-10-03 07:47:26 bootstrap.servers = [localhost:29092]

2023-10-03 07:47:26 buffer.memory = 33554432

2023-10-03 07:47:26 client.dns.lookup = use_all_dns_ips

2023-10-03 07:47:26 client.id = producer-1

2023-10-03 07:47:26 compression.type = none

2023-10-03 07:47:26 connections.max.idle.ms = 540000

2023-10-03 07:47:26 delivery.timeout.ms = 45000

2023-10-03 07:47:26 enable.idempotence = true

2023-10-03 07:47:26 interceptor.classes =

2023-10-03 07:47:26 key.serializer = class org.apache.kafka.common.serialization.StringSerializer

2023-10-03 07:47:26 linger.ms = 0

2023-10-03 07:47:26 max.block.ms = 60000

2023-10-03 07:47:26 max.in.flight.requests.per.connection = 5

2023-10-03 07:47:26 max.request.size = 1048576

2023-10-03 07:47:26 metadata.max.age.ms = 300000

2023-10-03 07:47:26 metadata.max.idle.ms = 300000

2023-10-03 07:47:26 metric.reporters =

2023-10-03 07:47:26 metrics.num.samples = 2

2023-10-03 07:47:26 metrics.recording.level = INFO

2023-10-03 07:47:26 metrics.sample.window.ms = 30000

2023-10-03 07:47:26 partitioner.adaptive.partitioning.enable = true

2023-10-03 07:47:26 partitioner.availability.timeout.ms = 0

2023-10-03 07:47:26 partitioner.class = null

2023-10-03 07:47:26 partitioner.ignore.keys = false

2023-10-03 07:47:26 receive.buffer.bytes = 32768

2023-10-03 07:47:26 reconnect.backoff.max.ms = 1000

2023-10-03 07:47:26 reconnect.backoff.ms = 50

2023-10-03 07:47:26 request.timeout.ms = 30000

2023-10-03 07:47:26 retries = 2147483647

2023-10-03 07:47:26 retry.backoff.ms = 100

2023-10-03 07:47:26 sasl.client.callback.handler.class = null

2023-10-03 07:47:26 sasl.jaas.config = null

2023-10-03 07:47:26 sasl.kerberos.kinit.cmd = /usr/bin/kinit

2023-10-03 07:47:26 sasl.kerberos.min.time.before.relogin = 60000

2023-10-03 07:47:26 sasl.kerberos.service.name = null

2023-10-03 07:47:26 sasl.kerberos.ticket.renew.jitter = 0.05

2023-10-03 07:47:26 sasl.kerberos.ticket.renew.window.factor = 0.8

2023-10-03 07:47:26 sasl.login.callback.handler.class = null

2023-10-03 07:47:26 sasl.login.class = null

2023-10-03 07:47:26 sasl.login.connect.timeout.ms = null

2023-10-03 07:47:26 sasl.login.read.timeout.ms = null

2023-10-03 07:47:26 sasl.login.refresh.buffer.seconds = 300

2023-10-03 07:47:26 sasl.login.refresh.min.period.seconds = 60

2023-10-03 07:47:26 sasl.login.refresh.window.factor = 0.8

2023-10-03 07:47:26 sasl.login.refresh.window.jitter = 0.05

2023-10-03 07:47:26 sasl.login.retry.backoff.max.ms = 10000

2023-10-03 07:47:26 sasl.login.retry.backoff.ms = 100

2023-10-03 07:47:26 sasl.mechanism = GSSAPI

2023-10-03 07:47:26 sasl.oauthbearer.clock.skew.seconds = 30

2023-10-03 07:47:26 sasl.oauthbearer.expected.audience = null

2023-10-03 07:47:26 sasl.oauthbearer.expected.issuer = null

2023-10-03 07:47:26 sasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000

2023-10-03 07:47:26 sasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000

2023-10-03 07:47:26 sasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100

2023-10-03 07:47:26 sasl.oauthbearer.jwks.endpoint.url = null

2023-10-03 07:47:26 sasl.oauthbearer.scope.claim.name = scope

2023-10-03 07:47:26 sasl.oauthbearer.sub.claim.name = sub

2023-10-03 07:47:26 sasl.oauthbearer.token.endpoint.url = null

2023-10-03 07:47:26 security.protocol = PLAINTEXT

2023-10-03 07:47:26 security.providers = null

2023-10-03 07:47:26 send.buffer.bytes = 131072

2023-10-03 07:47:26 socket.connection.setup.timeout.max.ms = 30000

2023-10-03 07:47:26 socket.connection.setup.timeout.ms = 10000

2023-10-03 07:47:26 ssl.cipher.suites = null

2023-10-03 07:47:26 ssl.enabled.protocols = [TLSv1.2, TLSv1.3]

2023-10-03 07:47:26 ssl.endpoint.identification.algorithm = https

2023-10-03 07:47:26 ssl.engine.factory.class = null

2023-10-03 07:47:26 ssl.key.password = null

2023-10-03 07:47:26 ssl.keymanager.algorithm = SunX509

2023-10-03 07:47:26 ssl.keystore.certificate.chain = null

2023-10-03 07:47:26 ssl.keystore.key = null

2023-10-03 07:47:26 ssl.keystore.location = null

2023-10-03 07:47:26 ssl.keystore.password = null

2023-10-03 07:47:26 ssl.keystore.type = JKS

2023-10-03 07:47:26 ssl.protocol = TLSv1.3

2023-10-03 07:47:26 ssl.provider = null

2023-10-03 07:47:26 ssl.secure.random.implementation = null

2023-10-03 07:47:26 ssl.trustmanager.algorithm = PKIX

2023-10-03 07:47:26 ssl.truststore.certificates = null

2023-10-03 07:47:26 ssl.truststore.location = null

2023-10-03 07:47:26 ssl.truststore.password = null

2023-10-03 07:47:26 ssl.truststore.type = JKS

2023-10-03 07:47:26 transaction.timeout.ms = 60000

2023-10-03 07:47:26 transactional.id = null

2023-10-03 07:47:26 value.serializer = class org.apache.kafka.common.serialization.StringSerializer

2023-10-03 07:47:26

2023-10-03 07:47:26 2023-10-03T05:47:26.574Z INFO 1 — [pool-2-thread-8] o.a.k.clients.producer.KafkaProducer : [Producer clientId=producer-1] Instantiated an idempotent producer.

2023-10-03 07:47:26 2023-10-03T05:47:26.605Z INFO 1 — [pool-2-thread-8] o.a.k.clients.producer.ProducerConfig : These configurations ‘[heartbeat.interval.ms, session.timeout.ms, default.api.timeout.ms]’ were supplied but are not used yet.

2023-10-03 07:47:26 2023-10-03T05:47:26.606Z INFO 1 — [pool-2-thread-8] o.a.kafka.common.utils.AppInfoParser : Kafka version: 3.5.1

2023-10-03 07:47:26 2023-10-03T05:47:26.606Z INFO 1 — [pool-2-thread-8] o.a.kafka.common.utils.AppInfoParser : Kafka commitId: 2c6fb6c54472e90a

2023-10-03 07:47:26 2023-10-03T05:47:26.606Z INFO 1 — [pool-2-thread-8] o.a.kafka.common.utils.AppInfoParser : Kafka startTimeMs: 1696312046605

2023-10-03 07:47:26 2023-10-03T05:47:26.720Z INFO 1 — [ad | producer-1] org.apache.kafka.clients.NetworkClient : [Producer clientId=producer-1] Node -1 disconnected.

2023-10-03 07:47:26 2023-10-03T05:47:26.721Z WARN 1 — [ad | producer-1] org.apache.kafka.clients.NetworkClient : [Producer clientId=producer-1] Connection to node -1 (localhost/127.0.0.1:29092) could not be established. Broker may not be available.

Do you have an idea on what is wrong ?

How to investigate the cause of the issue ?

Thanks for your help !

Hello my friend!

It appears to be a connection error.

Have you made sure your Kafka server is running?

Is the port really correct (29092)?

Is your Kafka running in a container? If so, have you mapped the container/host ports?

William Robert Alves

Hi

@WilliamR.Alves

Yes, I was using a Kafka server on docker … so, you’re right : i had to change the host name.

Thanks. It works now !