Written by R. Luke DuBois

Representing the United States at the 2018 London Design Biennale for the second time, Cooper Hewitt presented

Face Values

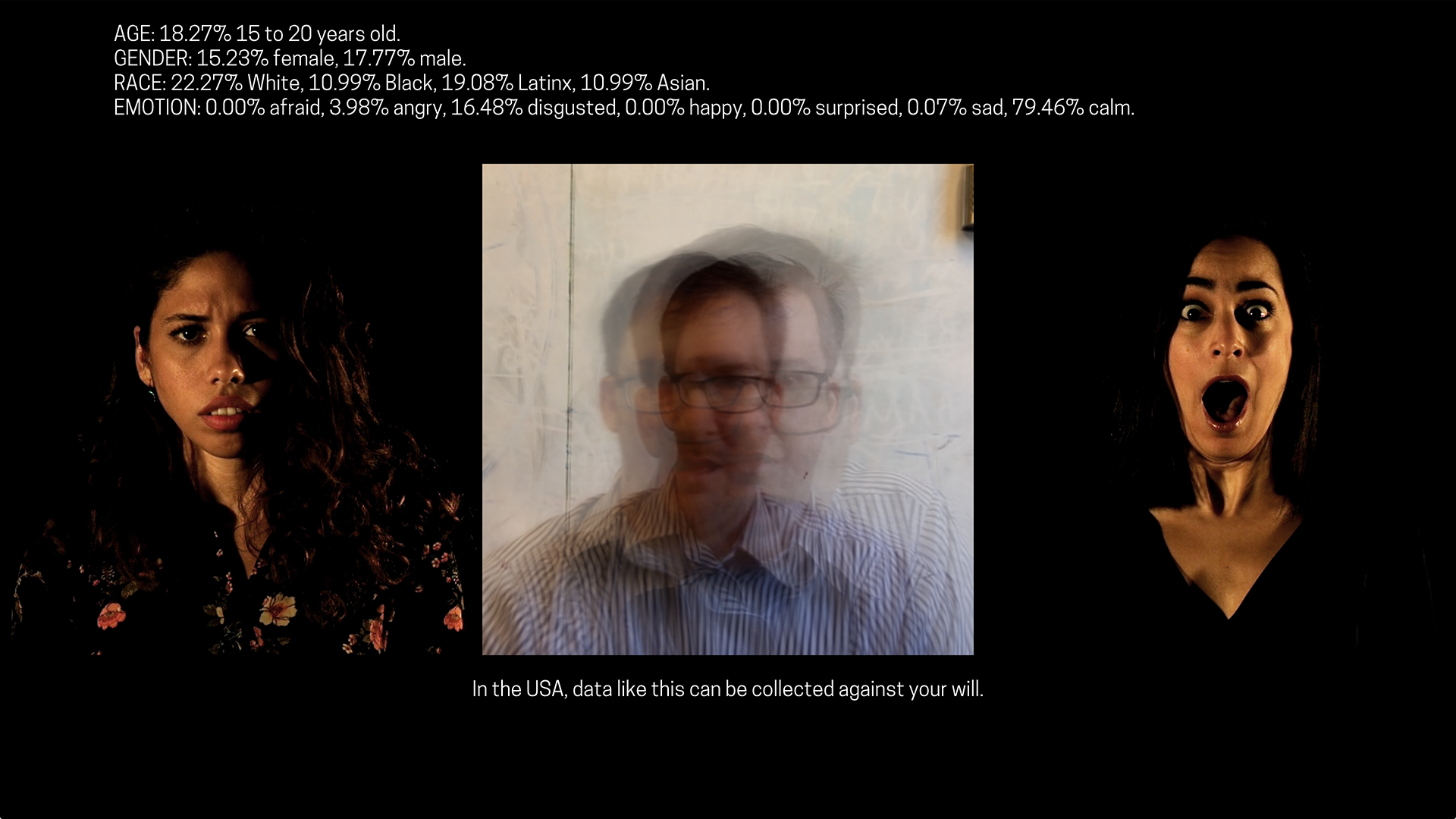

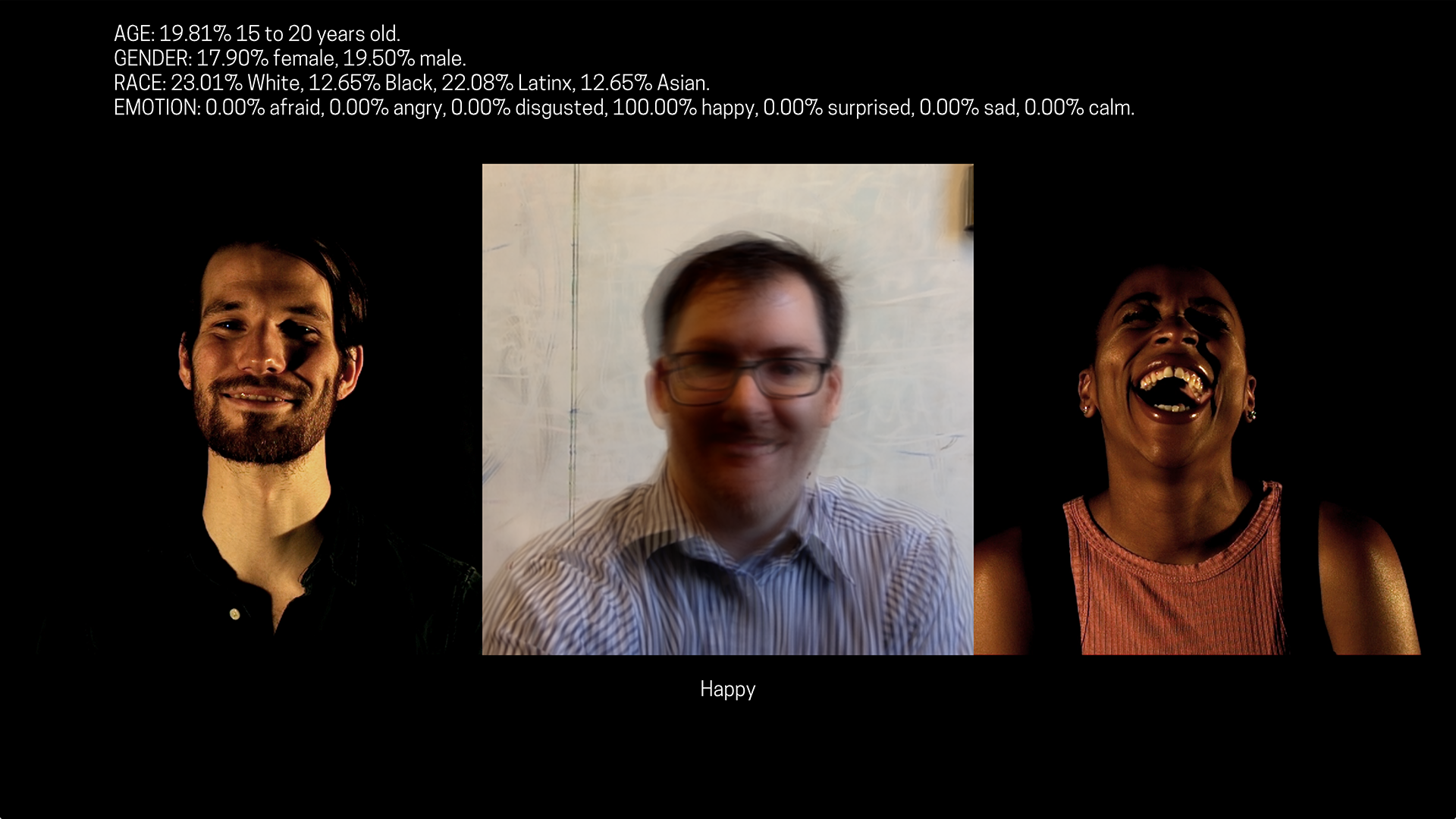

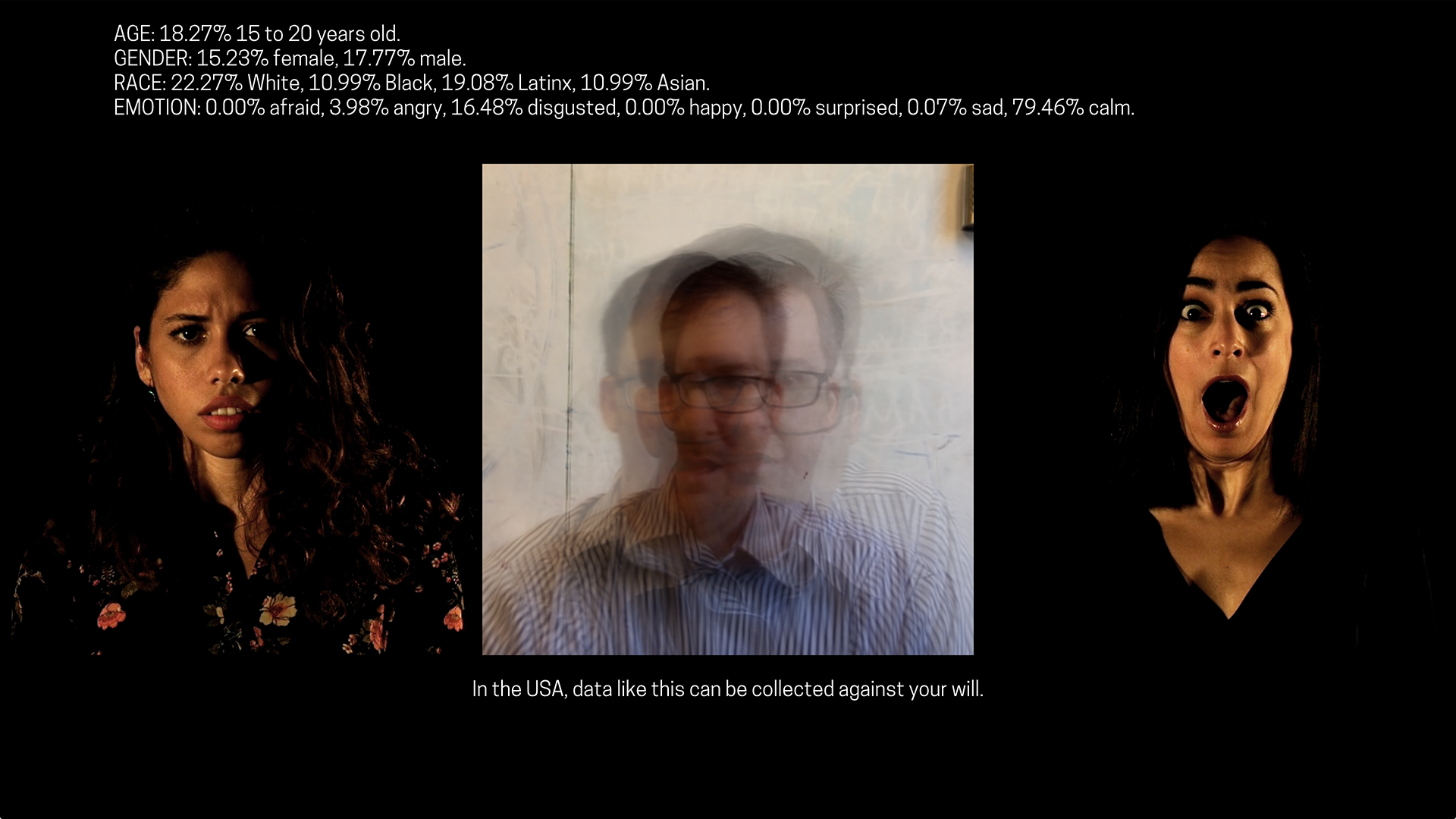

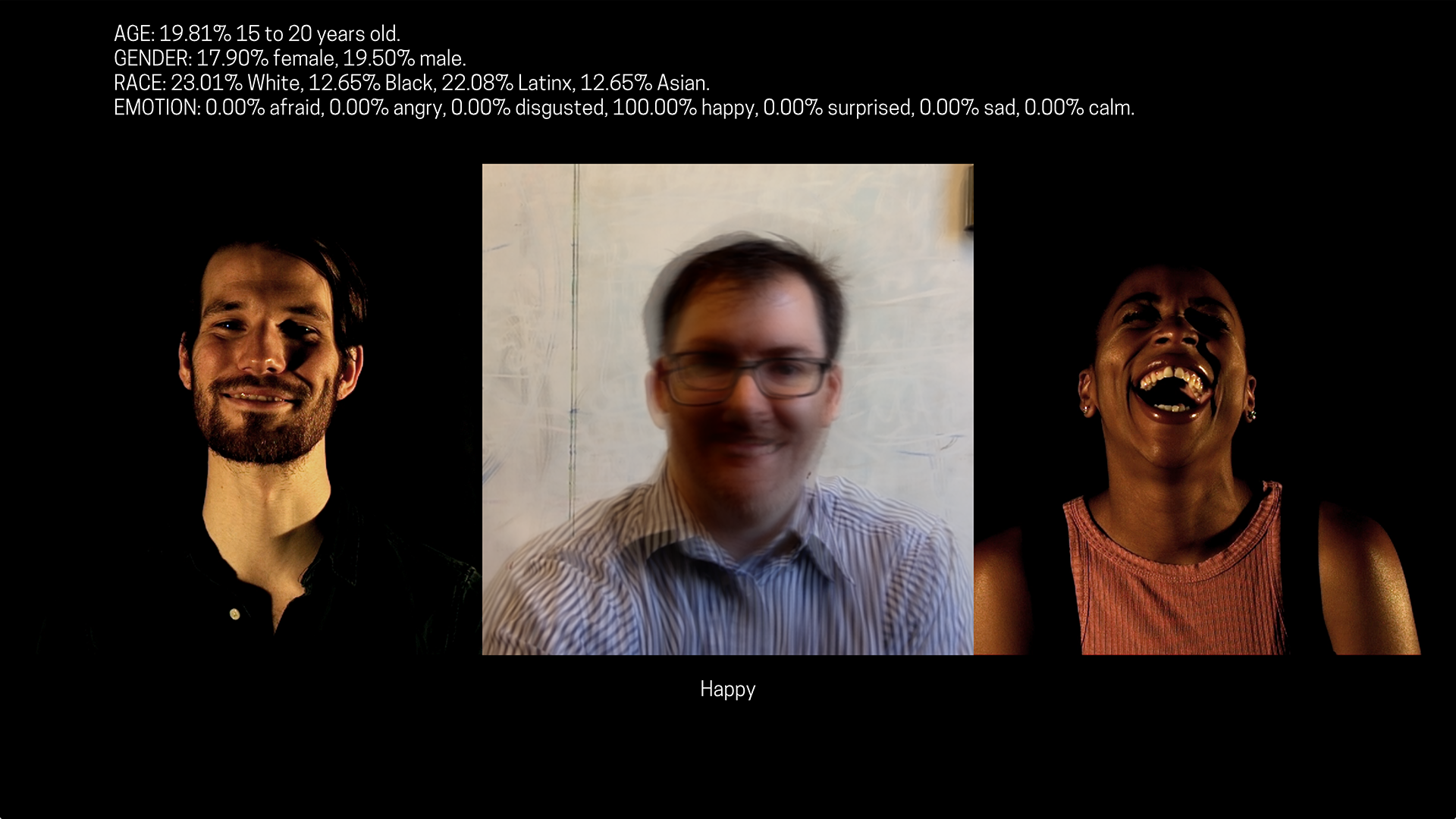

, an immersive installation exploring the pervasive but often hidden role of facial-detection technology in contemporary society. The installation is now being presented in Cooper Hewitt’s Process Lab. Curated by Ellen Lupton, senior curator of contemporary design, the installation features original work by designers R. Luke DuBois, Zachary Lieberman, Jessica Helfand, and Karen Palmer displayed within a digital environment designed by Matter Architecture Practice. Face Values won the Biennale Medal for most inspiring interpretation of the exhibition’s theme of emotional states.

View of R. Luke DuBois’s installation Expression Portrait for

Face Values

at Cooper Hewitt. Installation design by Matter Architecture Practice.

The design brief given to the forty participating countries, cities, and territories asked for spaces that drew attention to the ways in which design impacts human emotion. The design team assembled by Cooper Hewitt decided to turn that prompt on its head, asking: How can human emotion be used to impact design?

We are attuned to recognize the ways in which specific facial expressions can reveal our emotions. Some of these telltale signs are reasonably universal—laughter, for example, does similar things to people’s faces worldwide, as does disgust. However, many emotions impact our faces in culturally specific ways. In the United States, we read a smile that uses only your mouth, and not your eyes, as insincere. But in many other parts of the world, a wide grin is considered impolite. Familiarity gives us the best insights—loved ones read our faces like an open book, using facial cues to recognize sadness and anger faster and more accurately than acquaintances or coworkers ever could.

Over the last ten years, advances in computing have given rise to a variety of software that performs different kinds of facial recognition on digital images, videos, or live camera feeds. These systems claim to accomplish tasks or provide some sort of insight that the computer can give more rapidly, accurately, or inexpensively than a human. Some of these tasks are menial. A setting on your digital camera tells you when someone blinked in a photo. Auto-tagging identifies your friends in photos you post on social media. Other uses are downright innovative. A computer vision algorithm detects a person’s heart rate using ordinary webcams. A computer service purports to infer “deception,” partially through looking at facial cues in videos.

The more informed we are about these systems, however, the less we like them. Blink detection requires the computer to successfully disambiguate between eyes that are open and closed, a system that a Japanese camera manufacturer notably failed to deliver on with a product that consistently tagged Asian faces as “blinking” because of the shape of their eyes. Reading between the lines on social media auto-tagging, it might occur to you that if Facebook knows you were at your friend’s birthday party last Friday, so does the government. If you look carefully at the literature proposing and evaluating video-based heart-rate monitoring, you’ll notice that the systems fail on patients with dark skin color, a fact often passed over as something that requires “further study.” And as any armchair historian of lie detector technology knows, machines that use simple heuristics to detect truthfulness exhibit as much bias and predilection for false positives as humans.

Many (if not most) of these contemporary technologies leverage

machine learning

, a rapidly growing body of computing techniques that most people conflate with artificial intelligence. A.I., a research discipline that encompasses machine learning, is also used by laypersons as a term to describe machines doing things that we, as a culture, consider in that moment to be “human” activities, not “computer” activities. This is a moving target, so an average American in the early 1990s could very well have described things like GPS navigation systems as “artificial intelligence,” even if, from a computer scientist’s perspective, this product does not use A.I. in a formal way.

Machine learning, generally speaking, works through a process of learning and recognition, in a manner deliberately modeled after human cognition. For example, humans learn to recognize cats by seeing lots of cats (in real life or in media) and learning to extrapolate the core features of what makes a cat—whiskers, eyes with slit pupils, tails, etc. We also learn to recognize cats by comparing cats against other things we see and learning to distinguish among them. A cat is not a dog, nor is it a lampshade. Computers “learn” or, more accurately, are trained, through a similar process. They receive a little help from human supervisors, who point out the features to pay attention to in order to recognize, say, types of objects in a picture.

But imagine the following. What if, when we were young, the only cats we saw had gray fur? Later on in life, when encountering an orange tabby cat for the first time, would we recognize it as a cat? Or would we say it was a tiger, or a lion, which looks closer in some ways to a tabby cat than a gray cat? Our dilemma here is that our understanding of a phenomenon had

bias

. Data sets used to train machine learning systems have bias as well, sometimes to extraordinary degrees, depending on who curated the training data and what their goals were. This actual and potential bias is one of the things that makes machine learning difficult to use even in the most controlled situations. When deployed in society, it can be deeply problematic.

Two visitors experience the installation Expression Portrait in Face Values at Cooper Hewitt.

For the

Face Values

exhibition, I developed an interactive artwork that asks a computer to detect human emotion to explore these questions. Titled the Expression Portrait, the piece takes the form of an interactive photo booth, similar to the kind you’d find in an amusement park. There’s a chair, a screen, a loudspeaker, a video camera, and a big red button. When you press the button, on-screen imagery and a voice from the loudspeaker encourage you to take a seat and position yourself in front of the video camera for your “emotional self-portrait.” The computer picks a random emotion from seven possibilities of fear, anger, calmness, disgust, happiness, sadness, and surprise, and asks you to act that way for thirty seconds. During this time, a pair of videos shows actors mimicking those emotions, while the loudspeaker defines the emotion and music plays that’s meant to evoke the particular feeling.

At the end of the thirty seconds, the computer shows you an average image, akin to a time-lapse photograph of your face. It then tells you how well you did at your task, giving you the computer’s estimate of your dominant emotion, as well as its best guess as to your age, race, and gender. It then tells you that in the United States, data like this is collected all the time without your consent. To bring that point home, a second screen shows a running slideshow of recent portraits taken with the machine.

Screen captures of R. Luke DuBois’s Expression Portrait.

Screen captures of R. Luke DuBois’s Expression Portrait.

The installation analyzes your face using a machine learning algorithm trained on a number of public data sets of video and images developed in the last five years at universities and regularly used in machine learning systems to recognize emotion, age, race, and gender. For emotion, I considered two large data sets: the Ryerson Audio-Visual Database of Speech and Song (RAVDESS) and AffectNet, developed at the University of Denver. For age estimation, I used the IMDB-WIKI database developed at the Swiss Federal Institute of Technology in Zurich (ETH-Zurich). For race and gender, I used the Chicago Face Database developed at the University of Chicago.

As nearly everyone who interacted with the piece pointed out, it was often wrong. In the context of the installation, it was kind of funny and lighthearted. But in the context of how these technologies are used in society, it’s very troubling.

The Ryerson emotion data set uses video files of twenty-four young, mostly white drama students at that institution “overacting” the required emotions. The actors in the “fear” videos look literally terrified. The “sadness” videos involve tears. The “anger” videos have lots of scrunched-up faces, and so on. On the opposite side of the spectrum, the AffectNet researchers sourced over one million still images of faces discovered by searching for emotion keywords on Google, Bing, and other search engines. The bias factor here is the bias factor of the search engine, which discovers images from celebrity Instagram feeds, movie posters, and stock photography far more regularly than they ever find examples of “regular” people displaying emotions. The upshot of this in the installation was that people really had to overreact to the emotional prompt for the computer to recognize it. An open mouth was almost a prerequisite to show surprise, for example, and trying to look sufficiently angry for the machine to notice was, in itself, infuriating.

The IMDB-WIKI database, by a similar token, uses the photos of celebrities and notable persons to create a data set that correlates faces with human age. This is a pretty ingenious way of curating a data set, as the database creators could reasonably assume that both IMDB and Wikipedia have correct birthdays for the people in question. The problem here, when used against ordinary people (with ordinary lighting and ordinary makeup), is that the data set skews people older. Biennale visitors in London in their twenties were shocked to find that the computer thought they were in their fifties. I had to remind them that the computer wasn’t comparing them to average people at all, but to celebrities like Julia Roberts and George Clooney.

The race and gender data set, collected by professionally photographing 158 representative Chicagoans in controlled conditions, poses an alternate dilemma. It classifies its subjects according to a binary and cisgender understanding of humanity, assuming that we are either male or female, and also adheres to a culturally specific definition of race: white, black, Latinx, or Asian, which falls apart in our modern, global, multiracial world. Londoners of South Asian descent found themselves recognized as Latinx by the system, while visitors who had freckles—a perfectly common trait among people from pretty much anywhere on the planet—were almost always classified by the computer as white, even as they laughed and told me afterwards that their parents were from Jamaica, Ghana, or Bhutan.

Unfortunately, while the installation was intended to be fun, this is no laughing matter. As my colleagues Dr. Kate Crawford and Meredith Whittaker, the codirectors of the A.I. Now Institute at NYU, point out, artificial intelligence is the core ethical and social concern for human society’s relationship with technology in the twenty-first century. In her excellent, visually annotated essay “The Anatomy of an A.I. System,” Dr. Crawford walks readers through what goes into these technologies, using the Amazon Echo—voice recognition software—as an example. The takeaway is incredibly troubling, and these devices and services are rapidly becoming ubiquitous.

So I ask all of you to do me a favor. The next time a device or a piece of software comes along promising to make your life easier by recognizing you in some way, don’t take it at face value.

R. Luke DuBois explores temporal, verbal, and visual structures through music, art, and technology. He is the director of the Brooklyn Experimental Media Center at the NYU Tandon School of Engineering, where he and his students explore the implications of new technologies for individuals and society. His work expands the limits of portraiture in the digital age by linking human identity to data and social networks.

Face Values

is on view at Cooper Hewitt through May 17, 2020.

This article was originally published in the Winter 2018 issue of

Design Journal

, Cooper Hewitt’s biannual magazine.

This design is spectacular! You obviously know how to keep a reader entertained. Between your wit and your videos, I was almost moved to start my own blog (well, almost…HaHa!) Great job. I really enjoyed what you had to say, and more than that, how you presented it. Too cool!

I¡¦m no longer sure where you’re getting your information, however good topic. I must spend a while learning much more or figuring out more. Thanks for magnificent info I was in search of this information for my mission.

Really enjoyed this blog post, can I set it up so I receive an update sent in an email when you publish a fresh update?