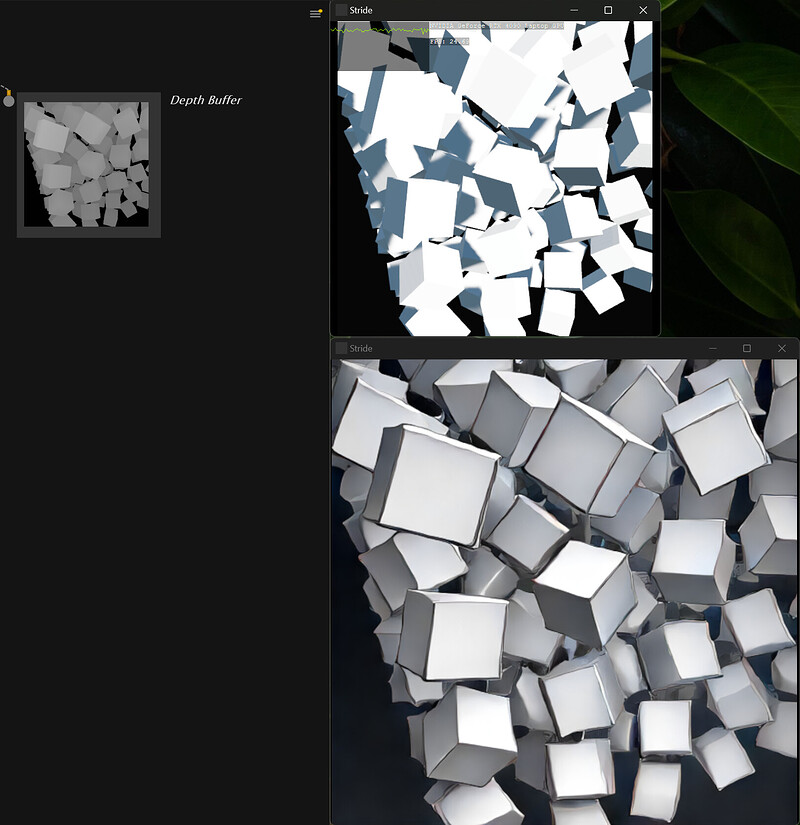

Exploring multithreading and running Python in a background thread could improve the experience and will make it possible to run vvvv for visuals in a different framerate.

Also, vvvv’s node factory feature could be used to automatically import Python scripts or libraries and build a node set for it. For example, get the complete PyTorch library as nodes for high-performance data manipulation on the GPU.

Currently, I do not intend to offer it for free or as open-source. The library will be available under a commercial license. However, an affordable hobbyist/personal use license will be available in a few months.

That’s it for now, I’ll update here if something new happens. If you have any questions or ideas, add them here. For issues or problems, please create a new thread or write in the Element channel.

Guys, I will never tire of saying that this is fascinatingly awesome.

This is one of the best things to happen to VVVV in years.

But I have a big request. Although I find neural networks and especially ComfyUI interesting and community is interested in them, can you specifically beta test VL.PythonNET? I have some experimental Python scripts that I cannot reproduce in VVVV and I would like to try to run them.

Although I find neural networks and especially ComfyUI interesting and community is interested in them, can you specifically beta test VL.PythonNET?

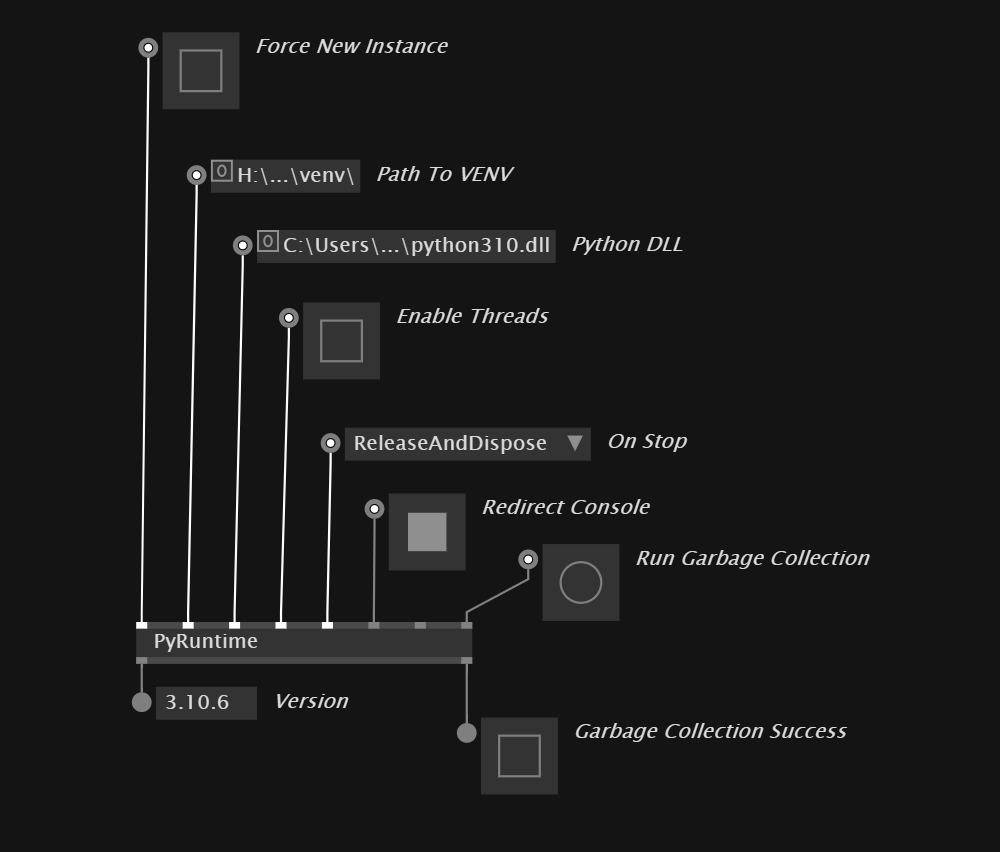

Yes, of course, VL.PythonNET is the core of this development. You do not need to use any neural network, you can just run any Python code, as long as you create a venv with the right dependencies or your Python installation or the machine has everything installed to run the script.

No, currently, I do not intend to offer it for free or as open-source. The library will be available under a commercial license. However, an affordable hobbyist/personal use license will be available in a few months.

EDIT: I’ve added a licensing section in the text above.

I can officially confirm that this is a game changer and an exceptional addition to vvvv armada.

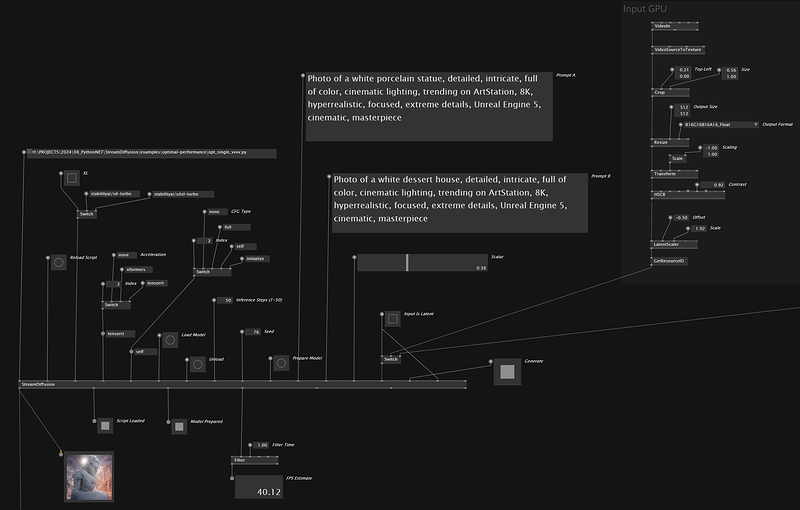

StreamDiffusion can now use all sd21 control nets with the sd-turbo model, including TensorRT acceleration:

As ControlNet is another network that needs to be evaluated, the performance impact is about 40%, it went from 45fps to about 25fps on my laptop 4090 GPU. A desktop 4090 GPU could reach 40-60fps.

Yes, I have looked at it, it is nice to get the app set up, but it would need to have some modification to work with vvvv. I am already on their discord and will talk with the developers to evaluate options when I have more time.

But the apps on there are mostly not real-time and my interest is mainly in high-performance real-time AI projects. I don’t see a big benefit in having non-realtime standalone apps in vvvv. But if you have use cases that you couldn’t do otherwise, please let me know.

As mentioned already, this is bringing gamma to absolutely new heights, tapping into this huge ecosystem.

after I updated to the latest and definitely easier to install VL.StreamDiffusion I faced an issue with performance.

FPS keep dropping as time passes by, for instance, the example patch when initializes starts with ±27 fps (fairly good for a laptop equipped with a 3070) but after a while (less than 10 mins) it drops down to 9 fps and obviously it crashes at some point.