|

SSL 2.0

Unsupported

Unsupported

Unsupported

SSL 3.0

Unsupported

Unsupported

Unsupported

TLS 1.0

No

[1]

No

[1]

Maybe

[2]

TLS 1.1

No

[1]

No

[1]

Maybe

[2]

TLS 1.2

TLS 1.3

N/A

[3]

N/A

[3]

N/A

[3]

Disabled by default, but can be enabled in the server configuration.

Some internal clients, such as the LDAP client.

TLS 1.3 is still under development.

The following list of enabled cipher suites of OpenShift Container Platform’s server and

oc

client are sorted in preferred order:

TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305

TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305

TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256

TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256

TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384

TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA256

TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA256

TLS_ECDHE_ECDSA_WITH_AES_128_CBC_SHA

TLS_ECDHE_ECDSA_WITH_AES_256_CBC_SHA

TLS_ECDHE_RSA_WITH_AES_128_CBC_SHA

TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA

TLS_RSA_WITH_AES_128_GCM_SHA256

TLS_RSA_WITH_AES_256_GCM_SHA384

TLS_RSA_WITH_AES_128_CBC_SHA

TLS_RSA_WITH_AES_256_CBC_SHA

Chapter 2. Infrastructure Components

2.1. Kubernetes Infrastructure

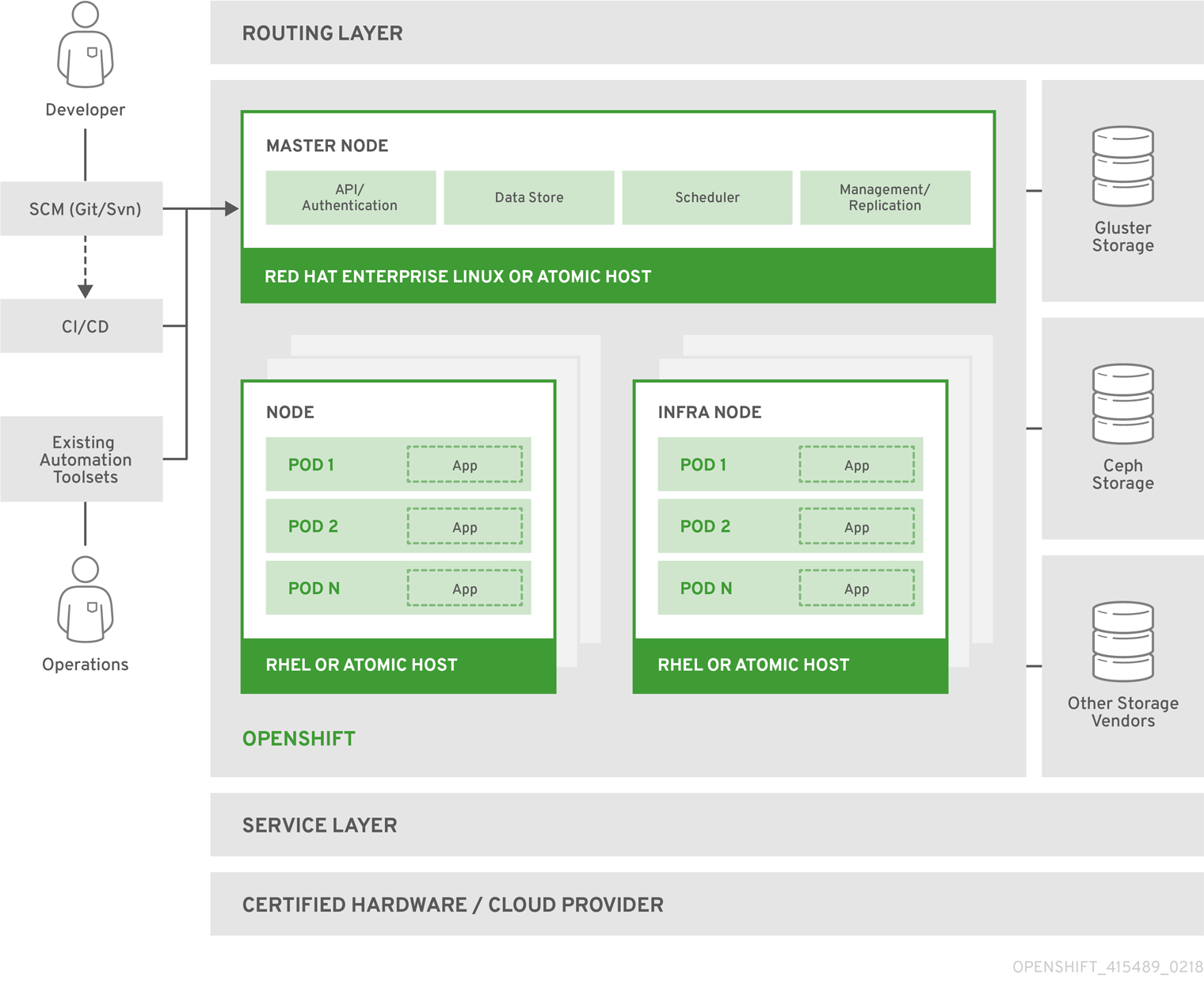

Within OpenShift Container Platform, Kubernetes manages containerized applications across a set of containers or hosts and provides mechanisms for deployment, maintenance, and application-scaling. The container runtime packages, instantiates, and runs containerized applications. A Kubernetes cluster consists of one or more masters and a set of nodes.

You can optionally configure your masters for

high availability

(HA) to ensure that the cluster has no single point of failure.

OpenShift Container Platform uses Kubernetes 1.11 and Docker 1.13.1.

The master is the host or hosts that contain the control plane components, including the API server, controller manager server, and etcd. The master manages

nodes

in its Kubernetes cluster and schedules

pods

to run on those nodes.

Table 2.1. Master Components

|

Component

|

Description

|

|

API Server

The Kubernetes API server validates and configures the data for pods, services, and replication controllers. It also assigns pods to nodes and synchronizes pod information with service configuration.

etcd stores the persistent master state while other components watch etcd for changes to bring themselves into the desired state. etcd can be optionally configured for high availability, typically deployed with 2n+1 peer services.

Controller Manager Server

The controller manager server watches etcd for changes to replication controller objects and then uses the API to enforce the desired state. Several such processes create a cluster with one active leader at a time.

HAProxy

Optional, used when configuring

highly-available masters

with the

native

method to balance load between API master endpoints. The

cluster installation process

can configure HAProxy for you with the

native

method. Alternatively, you can use the

native

method but pre-configure your own load balancer of choice.

|

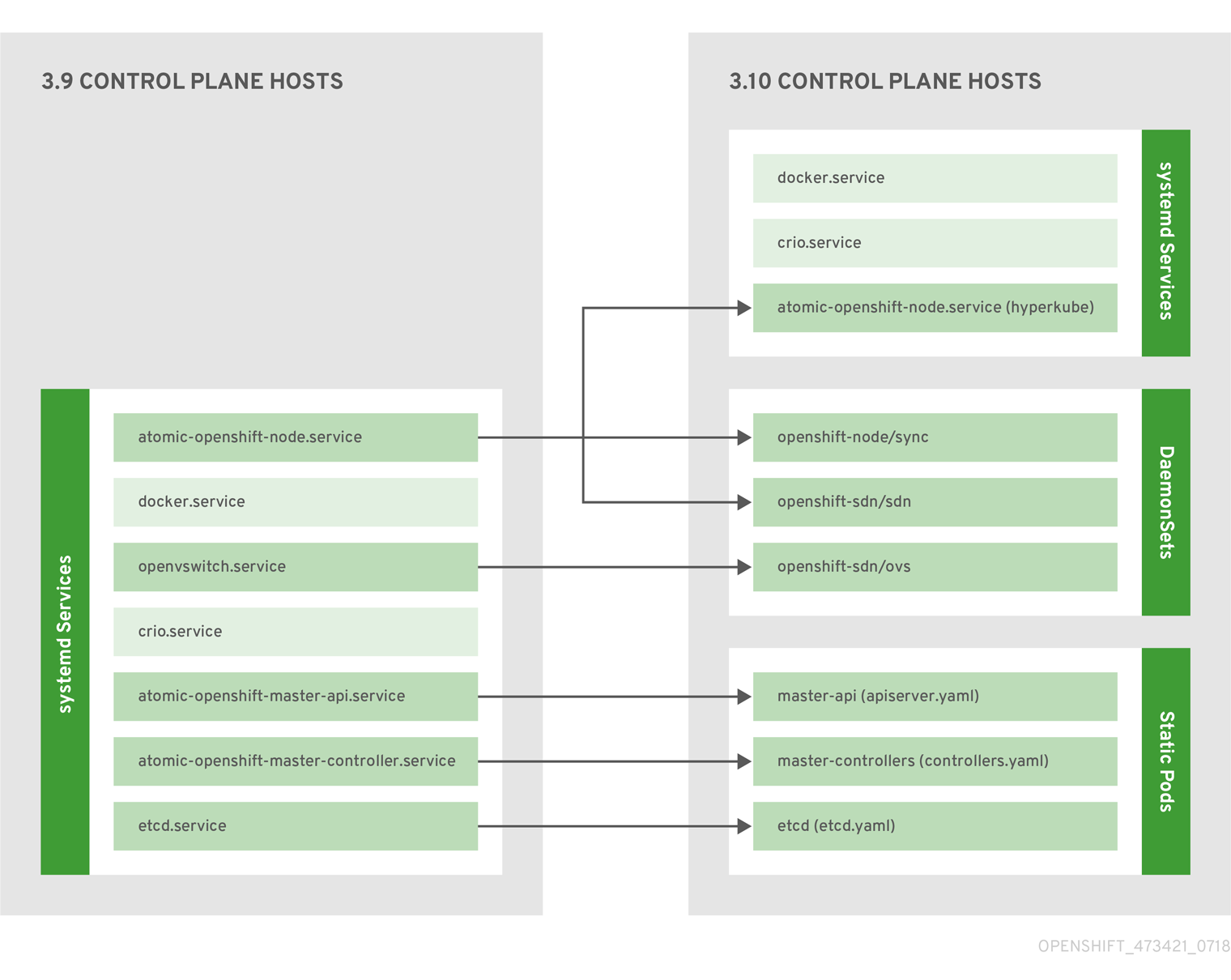

2.1.2.1. Control Plane Static Pods

The core control plane components, the API server and the controller manager components, run as

static pods

operated by the kubelet.

For masters that have etcd co-located on the same host, etcd is also moved to static pods. RPM-based etcd is still supported on etcd hosts that are not also masters.

In addition, the node components

openshift-sdn

and

openvswitch

are now run using a DaemonSet instead of a

systemd

service.

Even with control plane components running as static pods, master hosts still source their configuration from the

/etc/origin/master/master-config.yaml

file, as described in the

Master and Node Configuration

topic.

Startup Sequence Overview

Hyperkube

is a binary that contains all of Kubernetes (

kube-apiserver

,

controller-manager

,

scheduler

,

proxy

, and

kubelet

). On startup, the

kubelet

creates the

kubepods.slice

. Next, the

kubelet

creates the QoS-level slices

burstable.slice

and

best-effort.slice

inside the

kubepods.slice

. When a pod starts, the

kubelet

creats a pod-level slice with the format

pod<UUID-of-pod>.slice

and passes that path to the runtime on the other side of the Container Runtime Interface (CRI). Docker or CRI-O then creates the container-level slices inside the pod-level slice.

Mirror Pods

The kubelet on master nodes automatically creates

mirror pods

on the API server for each of the control plane static pods so that they are visible in the cluster in the

kube-system

project. Manifests for these static pods are installed by default by the

openshift-ansible

installer, located in the

/etc/origin/node/pods

directory on the master host.

These pods have the following

hostPath

volumes defined:

/etc/origin/master

Contains all certificates, configuration files, and the

admin.kubeconfig

file.

/var/lib/origin

Contains volumes and potential core dumps of the binary.

/etc/origin/cloudprovider

Contains cloud provider specific configuration (AWS, Azure, etc.).

/usr/libexec/kubernetes/kubelet-plugins

Contains additional third party volume plug-ins.

/etc/origin/kubelet-plugins

Contains additional third party volume plug-ins for system containers.

The set of operations you can do on the static pods is limited. For example:

$ oc logs master-api-<hostname> -n kube-system

returns the standard output from the API server. However:

$ oc delete pod master-api-<hostname> -n kube-system

will not actually delete the pod.

As another example, a cluster administrator might want to perform a common operation, such as increasing the

loglevel

of the API server to provide more verbose data if a problem occurs. You must edit the

/etc/origin/master/master.env

file, where the

--loglevel

parameter in the

OPTIONS

variable can be modified, because this value is passed to the process running inside the container. Changes require a restart of the process running inside the container.

Restarting Master Services

To restart control plane services running in control plane static pods, use the

master-restart

command on the master host.

To restart the master API:

# master-restart api

To restart the controllers:

# master-restart controllers

To restart etcd:

# master-restart etcd

Viewing Master Service Logs

To view logs for control plane services running in control plane static pods, use the

master-logs

command for the respective component:

# master-logs api api

# master-logs controllers controllers

# master-logs etcd etcd

2.1.2.2. High Availability Masters

You can optionally configure your masters for high availability (HA) to ensure that the cluster has no single point of failure.

To mitigate concerns about availability of the master, two activities are recommended:

A

runbook

entry should be created for reconstructing the master. A runbook entry is a necessary backstop for any highly-available service. Additional solutions merely control the frequency that the runbook must be consulted. For example, a cold standby of the master host can adequately fulfill SLAs that require no more than minutes of downtime for creation of new applications or recovery of failed application components.

Use a high availability solution to configure your masters and ensure that the cluster has no single point of failure. The

cluster installation documentation

provides specific examples using the

native

HA method and configuring HAProxy. You can also take the concepts and apply them towards your existing HA solutions using the

native

method instead of HAProxy.

In production OpenShift Container Platform clusters, you must maintain high availability of the API Server load balancer. If the API Server load balancer is not available, nodes cannot report their status, all their pods are marked dead, and the pods' endpoints are removed from the service.

In addition to configuring HA for OpenShift Container Platform, you must separately configure HA for the API Server load balancer. To configure HA, it is much preferred to integrate an enterprise load balancer (LB) such as an F5 Big-IP™ or a Citrix Netscaler™ appliance. If such solutions are not available, it is possible to run multiple HAProxy load balancers and use Keepalived to provide a floating virtual IP address for HA. However, this solution is not recommended for production instances.

When using the

native

HA method with HAProxy, master components have the following availability:

Table 2.2. Availability Matrix with HAProxy

|

Role

|

Style

|

Notes

|

|

Active-active

Fully redundant deployment with load balancing. Can be installed on separate hosts or collocated on master hosts.

API Server

Active-active

Managed by HAProxy.

Controller Manager Server

Active-passive

One instance is elected as a cluster leader at a time.

HAProxy

Active-passive

Balances load between API master endpoints.

While clustered etcd requires an odd number of hosts for quorum, the master services have no quorum or requirement that they have an odd number of hosts. However, since you need at least two master services for HA, it is common to maintain a uniform odd number of hosts when collocating master services and etcd.

A node provides the runtime environments for containers. Each node in a Kubernetes cluster has the required services to be managed by the master. Nodes also have the required services to run pods, including the container runtime, a kubelet, and a service proxy.

OpenShift Container Platform creates nodes from a cloud provider, physical systems, or virtual systems. Kubernetes interacts with

node objects

that are a representation of those nodes. The master uses the information from node objects to validate nodes with health checks. A node is ignored until it passes the health checks, and the master continues checking nodes until they are valid. The

Kubernetes documentation

has more information on node statuses and management.

Administrators can

manage nodes

in an OpenShift Container Platform instance using the CLI. To define full configuration and security options when launching node servers, use

dedicated node configuration files

.

See the

cluster limits

section for the recommended maximum number of nodes.

Each node has a kubelet that updates the node as specified by a container manifest, which is a YAML file that describes a pod. The kubelet uses a set of manifests to ensure that its containers are started and that they continue to run.

A container manifest can be provided to a kubelet by:

A file path on the command line that is checked every 20 seconds.

An HTTP endpoint passed on the command line that is checked every 20 seconds.

The kubelet watching an etcd server, such as

/registry/hosts/$(hostname -f)

, and acting on any changes.

The kubelet listening for HTTP and responding to a simple API to submit a new manifest.

Each node also runs a simple network proxy that reflects the services defined in the API on that node. This allows the node to do simple TCP and UDP stream forwarding across a set of back ends.

2.1.3.3. Node Object Definition

The following is an example node object definition in Kubernetes:

apiVersion: v1 1

kind: Node 2

metadata:

creationTimestamp: null

labels: 3

kubernetes.io/hostname: node1.example.com

name: node1.example.com 4

spec:

externalID: node1.example.com 5

status:

nodeInfo:

bootID: ""

containerRuntimeVersion: ""

kernelVersion: ""

kubeProxyVersion: ""

kubeletVersion: ""

machineID: ""

osImage: ""

systemUUID: ""

-

1

-

apiVersion

defines the API version to use.

kind

set to

Node

identifies this as a definition for a node object.

metadata.labels

lists any

labels

that have been added to the node.

metadata.name

is a required value that defines the name of the node object. This value is shown in the

NAME

column when running the

oc get nodes

command.

spec.externalID

defines the fully-qualified domain name where the node can be reached. Defaults to the

metadata.name

value when empty.

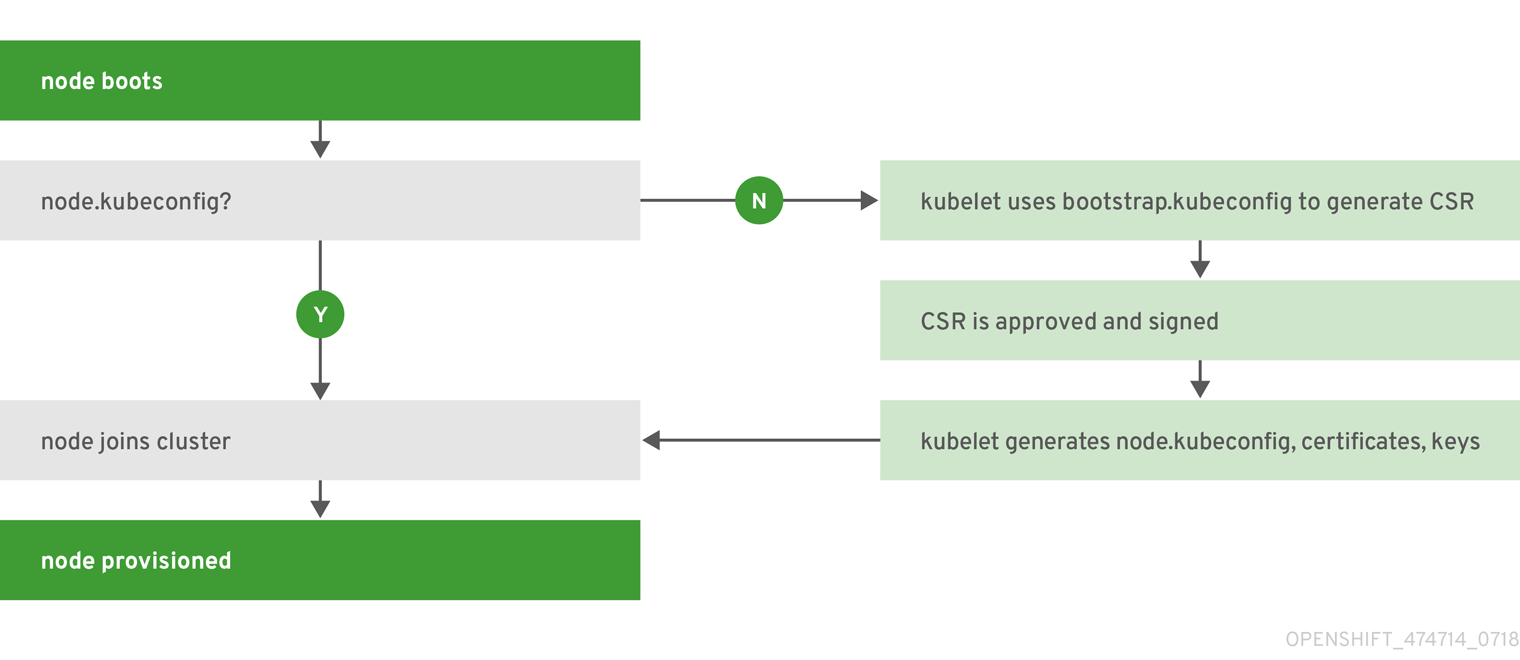

2.1.3.4. Node Bootstrapping

A node’s configuration is bootstrapped from the master, which means nodes pull their pre-defined configuration and client and server certificates from the master. This allows faster node start-up by reducing the differences between nodes, as well as centralizing more configuration and letting the cluster converge on the desired state. Certificate rotation and centralized certificate management are enabled by default.

When node services are started, the node checks if the

/etc/origin/node/node.kubeconfig

file and other node configuration files exist before joining the cluster. If they do not, the node pulls the configuration from the master, then joins the cluster.

ConfigMaps

are used to store the node configuration in the cluster, which populates the configuration file on the node host at

/etc/origin/node/node-config.yaml

. For definitions of the set of default node groups and their ConfigMaps, see

Defining Node Groups and Host Mappings

in Installing Clusters.

Node Bootstrap Workflow

The process for automatic node bootstrapping uses the following workflow:

By default during cluster installation, a set of

clusterrole

,

clusterrolebinding

and

serviceaccount

objects are created for use in node bootstrapping:

The

system:node-bootstrapper

cluster role is used for creating certificate signing requests (CSRs) during node bootstrapping:

$ oc describe clusterrole.authorization.openshift.io/system:node-bootstrapper

Modifying Node Configurations

A node’s configuration is modified by editing the appropriate ConfigMap in the

openshift-node

project. The

/etc/origin/node/node-config.yaml

must not be modified directly.

For example, for a node that is in the

node-config-compute

group, edit the ConfigMap using:

$ oc edit cm node-config-compute -n openshift-node

OpenShift Container Platform can utilize any server implementing the container image registry API as a source of images, including the Docker Hub, private registries run by third parties, and the integrated OpenShift Container Platform registry.

2.2.2. Integrated OpenShift Container Registry

OpenShift Container Platform provides an integrated container image registry called

OpenShift Container Registry

(OCR) that adds the ability to automatically provision new image repositories on demand. This provides users with a built-in location for their application builds to push the resulting images.

Whenever a new image is pushed to OCR, the registry notifies OpenShift Container Platform about the new image, passing along all the information about it, such as the namespace, name, and image metadata. Different pieces of OpenShift Container Platform react to new images, creating new builds and

deployments

.

OCR can also be deployed as a stand-alone component that acts solely as a container image registry, without the build and deployment integration. See

Installing a Stand-alone Deployment of OpenShift Container Registry

for details.

2.2.3. Third Party Registries

OpenShift Container Platform can create containers using images from third party registries, but it is unlikely that these registries offer the same image notification support as the integrated OpenShift Container Platform registry. In this situation OpenShift Container Platform will fetch tags from the remote registry upon imagestream creation. Refreshing the fetched tags is as simple as running

oc import-image <stream>

. When new images are detected, the previously-described build and deployment reactions occur.

OpenShift Container Platform can communicate with registries to access private image repositories using credentials supplied by the user. This allows OpenShift Container Platform to push and pull images to and from private repositories. The

Authentication

topic has more information.

2.2.4. Red Hat Quay Registries

If you need an enterprise-quality container image registry, Red Hat Quay is available both as a hosted service and as software you can install in your own data center or cloud environment. Advanced registry features in Red Hat Quay include geo-replication, image scanning, and the ability to roll back images.

Visit the

Quay.io

site to set up your own hosted Quay registry account. After that, follow the

Quay Tutorial

to log in to the Quay registry and start managing your images. Alternatively, refer to

Getting Started with Red Hat Quay

for information about setting up your own Red Hat Quay registry.

You can access your Red Hat Quay registry from OpenShift Container Platform like any remote container image registry. To learn how to set up credentials to access Red Hat Quay as a secured registry, refer to

Allowing Pods to Reference Images from Other Secured Registries

.

2.2.5. Authentication Enabled Red Hat Registry

All container images available through the Red Hat Container Catalog are hosted on an image registry,

registry.access.redhat.com

. With OpenShift Container Platform 3.11 Red Hat Container Catalog moved from

registry.access.redhat.com

to

registry.redhat.io

.

The new registry,

registry.redhat.io

, requires authentication for access to images and hosted content on OpenShift Container Platform. Following the move to the new registry, the existing registry will be available for a period of time.

OpenShift Container Platform pulls images from

registry.redhat.io

, so you must configure your cluster to use it.

The new registry uses standard OAuth mechanisms for authentication, with the following methods:

Authentication token.

Tokens, which are

generated by administrators

, are service accounts that give systems the ability to authenticate against the container image registry. Service accounts are not affected by changes in user accounts, so the token authentication method is reliable and resilient. This is the only supported authentication option for production clusters.

Web username and password.

This is the standard set of credentials you use to log in to resources such as

access.redhat.com

. While it is possible to use this authentication method with OpenShift Container Platform, it is not supported for production deployments. Restrict this authentication method to stand-alone projects outside OpenShift Container Platform.

You can use

docker login

with your credentials, either username and password or authentication token, to access content on the new registry.

All image streams point to the new registry. Because the new registry requires authentication for access, there is a new secret in the OpenShift namespace called

imagestreamsecret

.

You must place your credentials in two places:

OpenShift namespace

. Your credentials must exist in the OpenShift namespace so that the image streams in the OpenShift namespace can import.

Your host

. Your credentials must exist on your host because Kubernetes uses the credentials from your host when it goes to pull images.

To access the new registry:

Verify image import secret,

imagestreamsecret

, is in your OpenShift namespace. That secret has credentials that allow you to access the new registry.

Verify all of your cluster nodes have a

/var/lib/origin/.docker/config.json

, copied from master, that allows you to access the Red Hat registry.

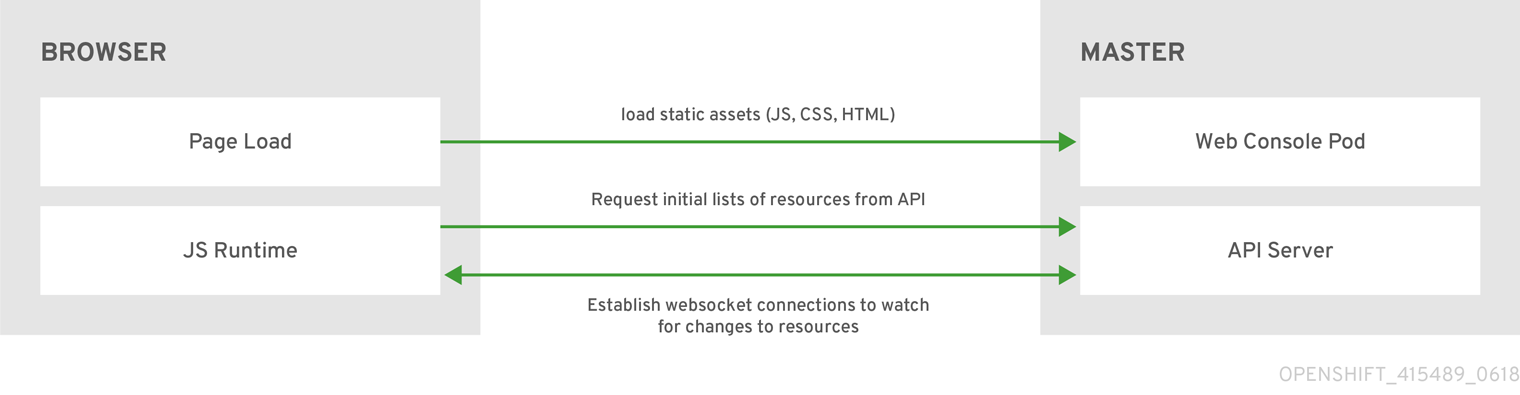

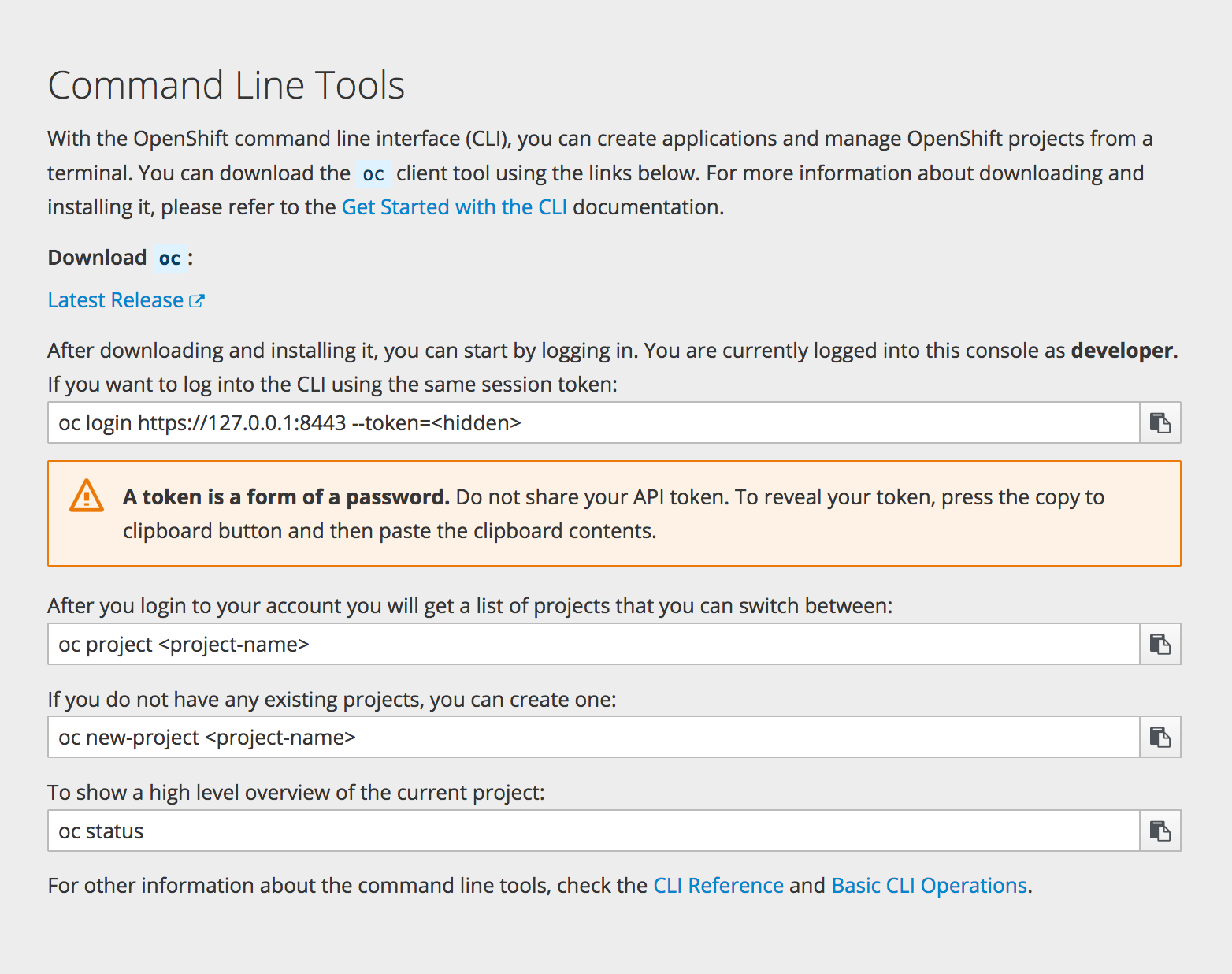

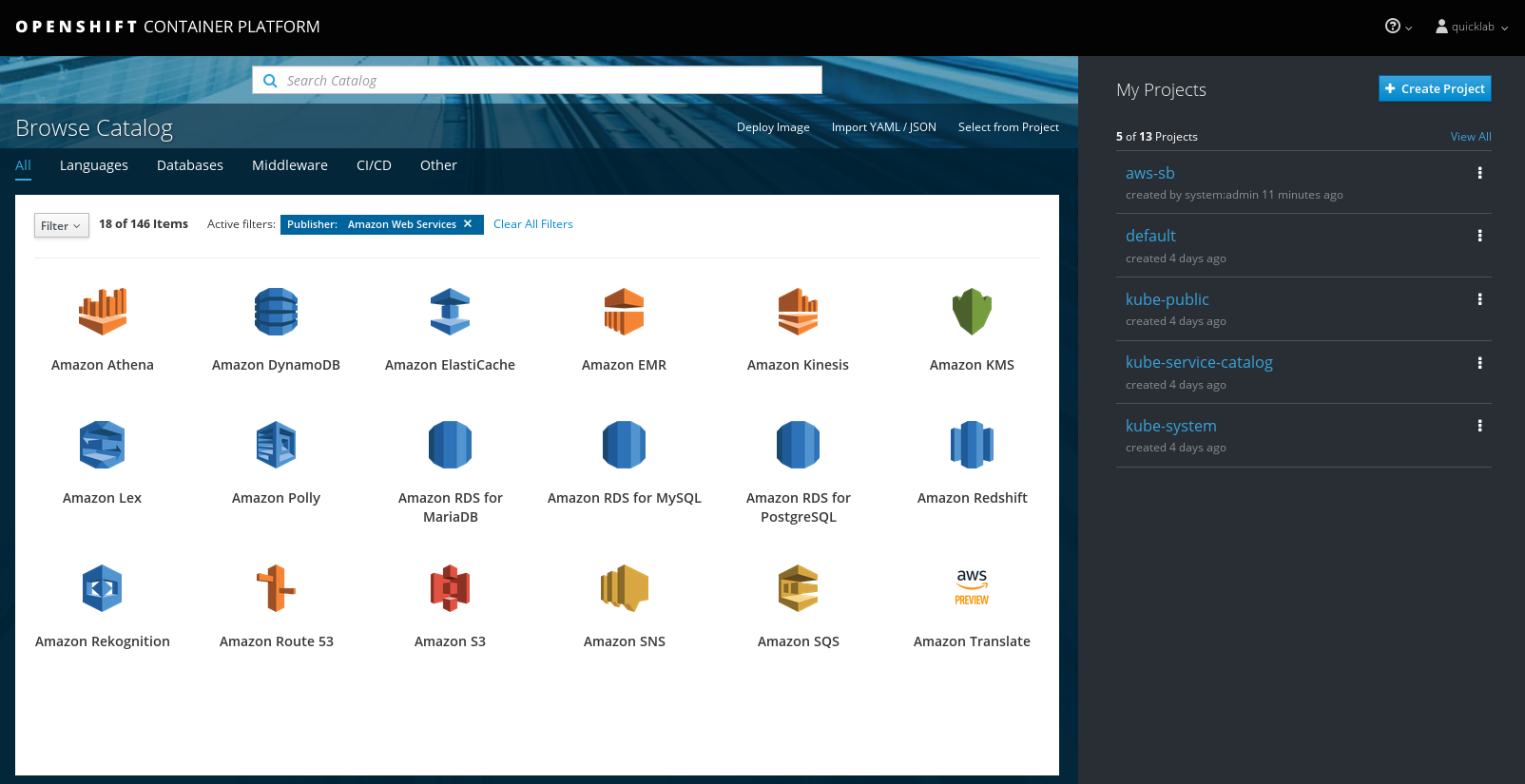

The OpenShift Container Platform web console is a user interface accessible from a web browser. Developers can use the web console to visualize, browse, and manage the contents of

projects

.

JavaScript must be enabled to use the web console. For the best experience, use a web browser that supports

WebSockets

.

The web console runs as a pod on the

master

. The static assets required to run the web console are served by the pod. Administrators can also

customize the web console

using extensions, which let you run scripts and load custom stylesheets when the web console loads.

When you access the web console from a browser, it first loads all required static assets. It then makes requests to the OpenShift Container Platform APIs using the values defined from the

openshift start

option

--public-master

, or from the related parameter

masterPublicURL

in the

webconsole-config

config map defined in the

openshift-web-console

namespace. The web console uses WebSockets to maintain a persistent connection with the API server and receive updated information as soon as it is available.

The configured host names and IP addresses for the web console are whitelisted to access the API server safely even when the browser would consider the requests to be

cross-origin

. To access the API server from a web application using a different host name, you must whitelist that host name by specifying the

--cors-allowed-origins

option on

openshift start

or from the related

master configuration file parameter

corsAllowedOrigins

.

The

corsAllowedOrigins

parameter is controlled by the configuration field. No pinning or escaping is done to the value. The following is an example of how you can pin a host name and escape dots:

corsAllowedOrigins:

- (?i)//my\.subdomain\.domain\.com(:|\z)

-

The

(?i)

makes it case-insensitive.

The

//

pins to the beginning of the domain (and matches the double slash following

http:

or

https:

).

The

\.

escapes dots in the domain name.

The

(:|\z)

matches the end of the domain name

(\z)

or a port separator

(:)

.

After

logging in

, the web console provides developers with an overview for the currently selected

project

:

-

-

The project selector allows you to

switch between projects

you have access to.

To quickly find services from within project view, type in your search criteria

Create new applications

using a source repository

or service from the service catalog.

Notifications related to your project.

The

Overview

tab (currently selected) visualizes the contents of your project with a high-level view of each component.

Applications

tab: Browse and perform actions on your deployments, pods, services, and routes.

Builds

tab: Browse and perform actions on your builds and image streams.

Resources

tab: View your current quota consumption and other resources.

Storage

tab: View persistent volume claims and request storage for your applications.

Monitoring

tab: View logs for builds, pods, and deployments, as well as event notifications for all objects in your project.

Catalog

tab: Quickly get to the catalog from within a project.

Cockpit

is automatically installed and enabled in to help you monitor your development environment.

Red Hat Enterprise Linux Atomic Host: Getting Started with Cockpit

provides more information on using Cockpit.

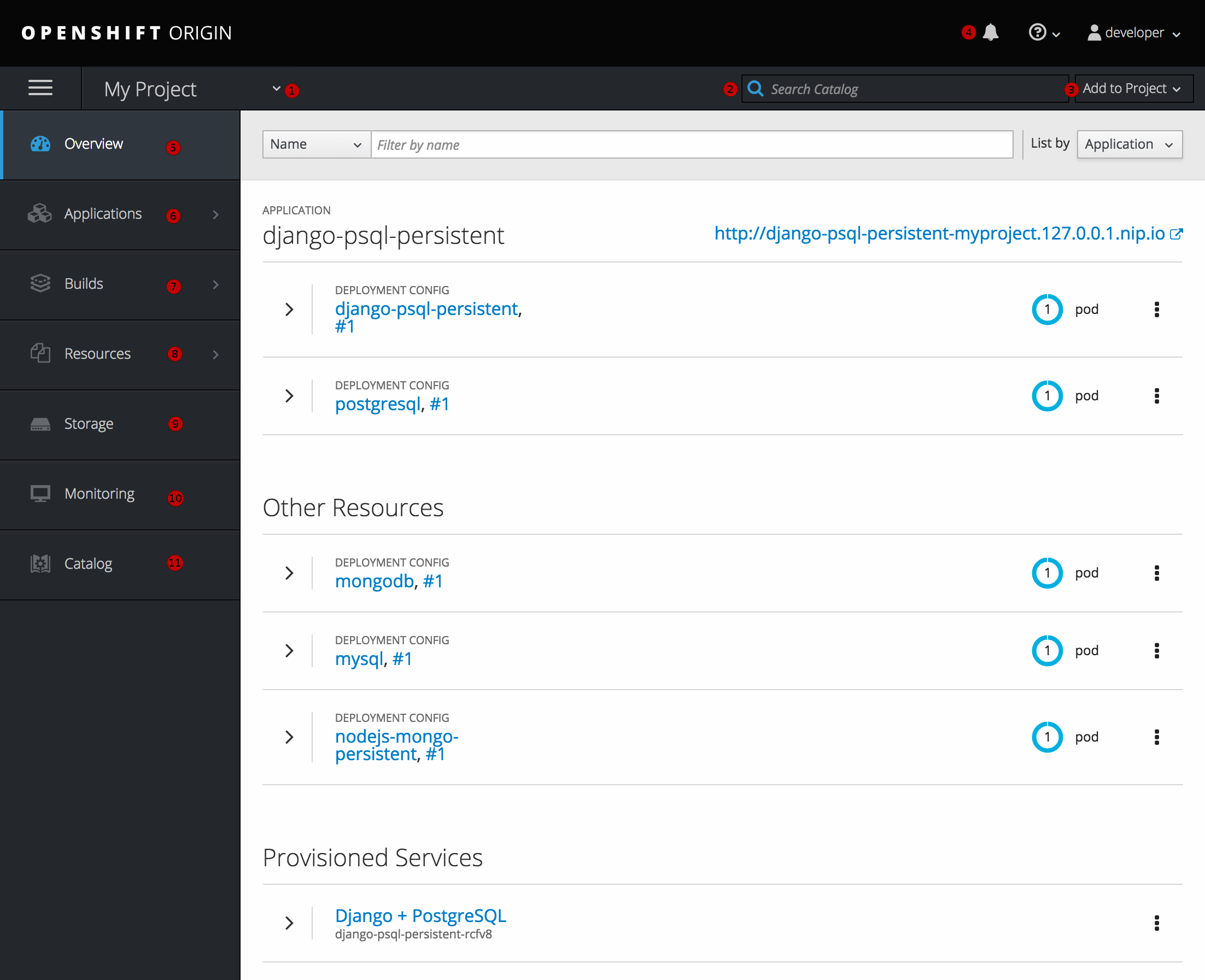

For pods based on Java images, the web console also exposes access to a

hawt.io

-based JVM console for viewing and managing any relevant integration components. A

Connect

link is displayed in the pod’s details on the

Browse → Pods

page, provided the container has a port named

jolokia

.

After connecting to the JVM console, different pages are displayed depending on which components are relevant to the connected pod.

The following pages are available:

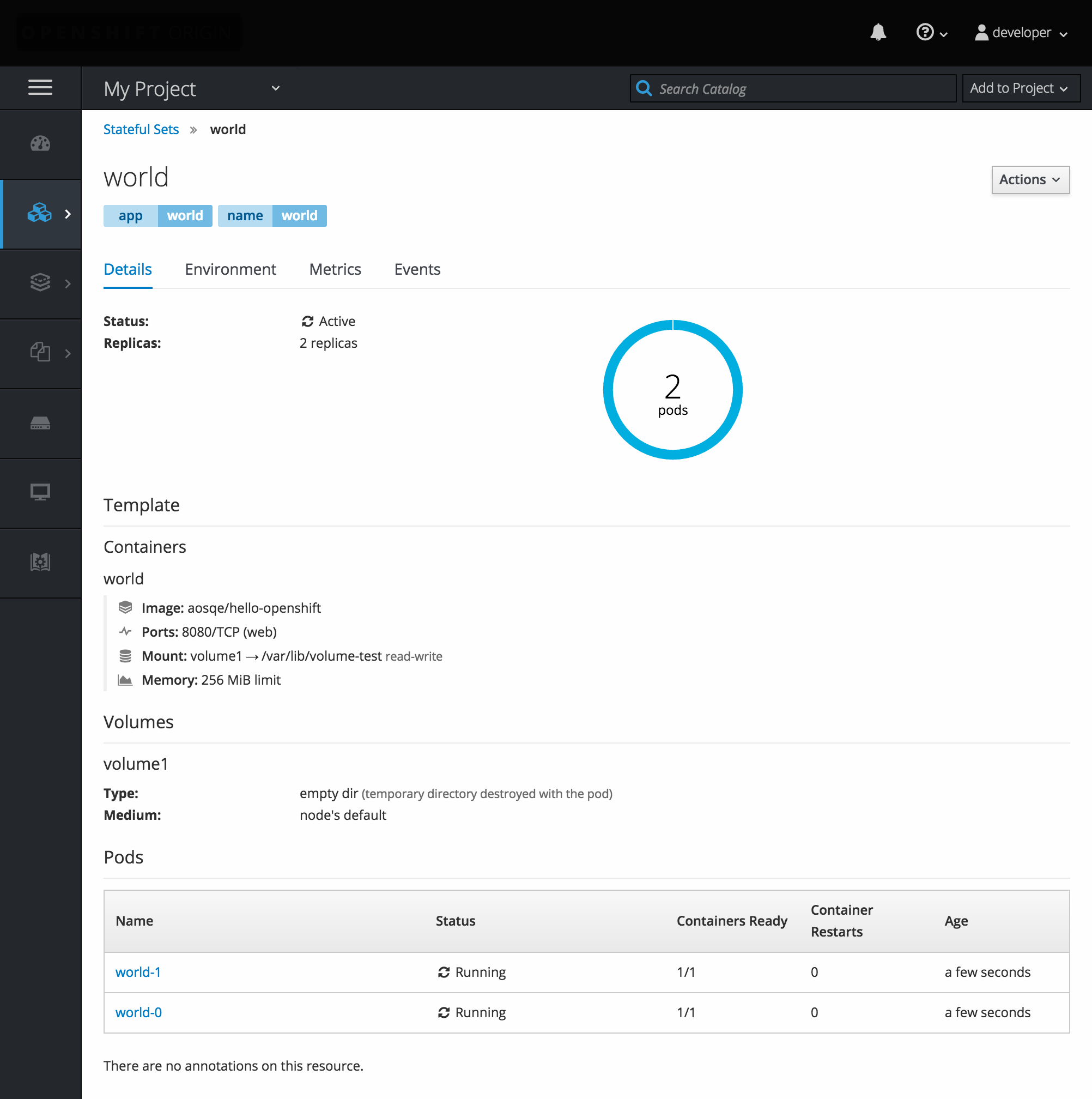

A

StatefulSet

controller provides a unique identity to its pods and determines the order of deployments and scaling.

StatefulSet

is useful for unique network identifiers, persistent storage, graceful deployment and scaling, and graceful deletion and termination.

The following topics provide high-level, architectural information on core concepts and objects you will encounter when using OpenShift Container Platform. Many of these objects come from Kubernetes, which is extended by OpenShift Container Platform to provide a more feature-rich development lifecycle platform.

Containers and images

are the building blocks for deploying your applications.

Pods and services

allow for containers to communicate with each other and proxy connections.

Projects and users

provide the space and means for communities to organize and manage their content together.

Builds and image streams

allow you to build working images and react to new images.

Deployments

add expanded support for the software development and deployment lifecycle.

Routes announce your service to the world.

Templates

allow for many objects to be created at once based on customized parameters.

3.2. Containers and Images

The basic units of OpenShift Container Platform applications are called

containers

.

Linux container technologies

are lightweight mechanisms for isolating running processes so that they are limited to interacting with only their designated resources.

Many application instances can be running in containers on a single host without visibility into each others' processes, files, network, and so on. Typically, each container provides a single service (often called a "micro-service"), such as a web server or a database, though containers can be used for arbitrary workloads.

The Linux kernel has been incorporating capabilities for container technologies for years. More recently the Docker project has developed a convenient management interface for Linux containers on a host. OpenShift Container Platform and Kubernetes add the ability to orchestrate Docker-formatted containers across multi-host installations.

Though you do not directly interact with the Docker CLI or service when using OpenShift Container Platform, understanding their capabilities and terminology is important for understanding their role in OpenShift Container Platform and how your applications function inside of containers. The

docker

RPM is available as part of RHEL 7, as well as CentOS and Fedora, so you can experiment with it separately from OpenShift Container Platform. Refer to the article

Get Started with Docker Formatted Container Images on Red Hat Systems

for a guided introduction.

A pod can have init containers in addition to application containers. Init containers allow you to reorganize setup scripts and binding code. An init container differs from a regular container in that it always runs to completion. Each init container must complete successfully before the next one is started.

For more information, see

Pods and Services

.

Containers in OpenShift Container Platform are based on Docker-formatted container

images

. An image is a binary that includes all of the requirements for running a single container, as well as metadata describing its needs and capabilities.

You can think of it as a packaging technology. Containers only have access to resources defined in the image unless you give the container additional access when creating it. By deploying the same image in multiple containers across multiple hosts and load balancing between them, OpenShift Container Platform can provide redundancy and horizontal scaling for a service packaged into an image.

You can use the Docker CLI directly to build images, but OpenShift Container Platform also supplies builder images that assist with creating new images by adding your code or configuration to existing images.

Because applications develop over time, a single image name can actually refer to many different versions of the "same" image. Each different image is referred to uniquely by its hash (a long hexadecimal number e.g.

fd44297e2ddb050ec4f…

) which is usually shortened to 12 characters (e.g.

fd44297e2ddb

).

Image Version Tag Policy

Rather than version numbers, the Docker service allows applying tags (such as

v1

,

v2.1

,

GA

, or the default

latest

) in addition to the image name to further specify the image desired, so you may see the same image referred to as

centos

(implying the

latest

tag),

centos:centos7

, or

fd44297e2ddb

.

Do not use the

latest

tag for any official OpenShift Container Platform images. These are images that start with

openshift3/

.

latest

can refer to a number of versions, such as

3.10

, or

3.11

.

How you tag the images dictates the updating policy. The more specific you are, the less frequently the image will be updated. Use the following to determine your chosen OpenShift Container Platform images policy:

The vX.Y tag points to X.Y.Z-<number>. For example, if the

registry-console

image is updated to v3.11, it points to the newest 3.11.Z-<number> tag, such as 3.11.1-8.

X.Y.Z

Similar to the vX.Y example above, the X.Y.Z tag points to the latest X.Y.Z-<number>. For example, 3.11.1 would point to 3.11.1-8

X.Y.Z-<number>

The tag is unique and does not change. When using this tag, the image does not update if an image is updated. For example, the 3.11.1-8 will always point to 3.11.1-8, even if an image is updated.

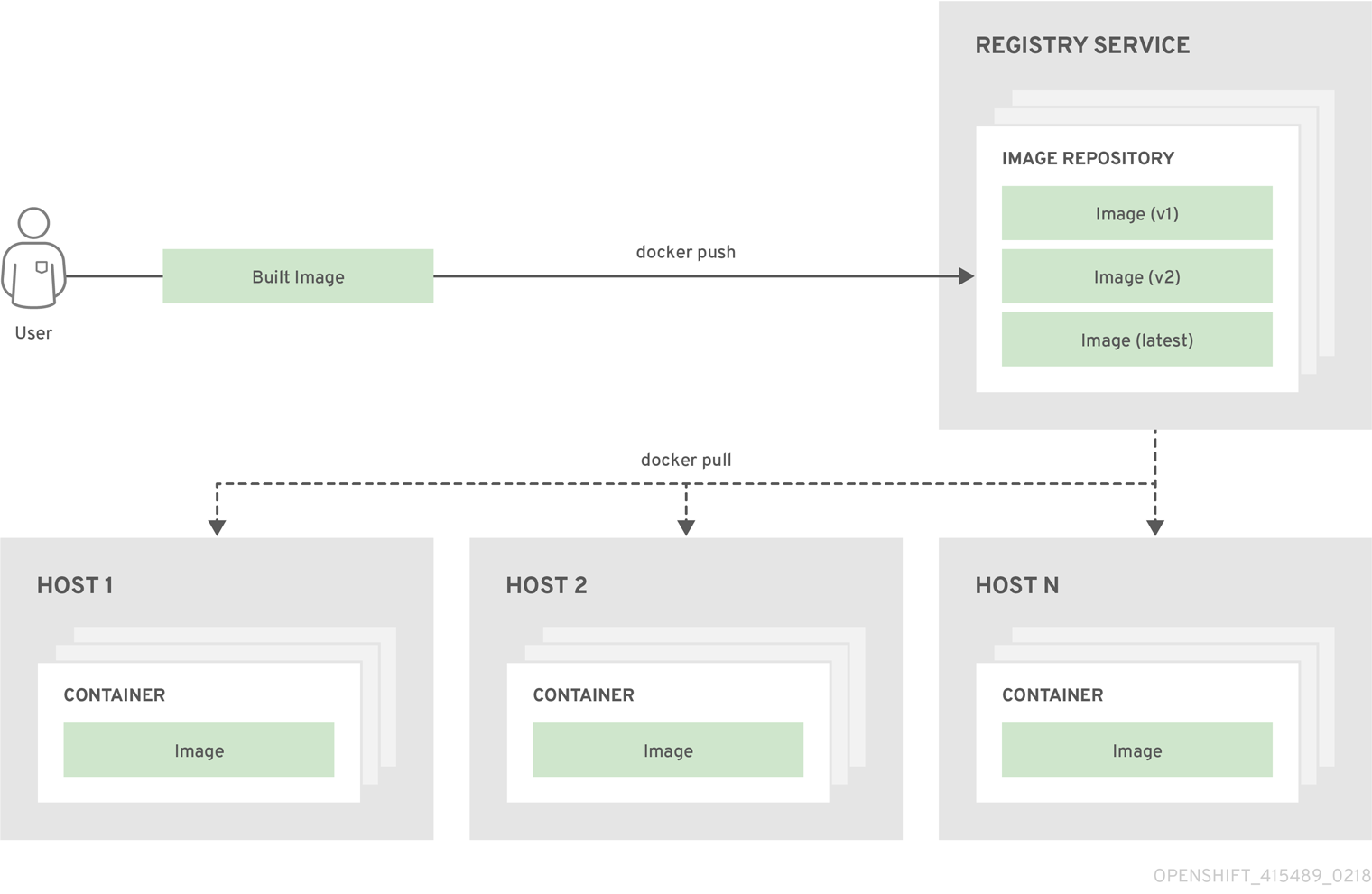

3.2.3. Container Image Registries

A container image registry is a service for storing and retrieving Docker-formatted container images. A registry contains a collection of one or more image repositories. Each image repository contains one or more tagged images. Docker provides its own registry, the

Docker Hub

, and you can also use private or third-party registries. Red Hat provides a registry at

registry.redhat.io

for subscribers. OpenShift Container Platform can also supply its own internal registry for managing custom container images.

The relationship between containers, images, and registries is depicted in the following diagram:

OpenShift Container Platform leverages the Kubernetes concept of a

pod

, which is one or more

containers

deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed.

Pods are the rough equivalent of a machine instance (physical or virtual) to a container. Each pod is allocated its own internal IP address, therefore owning its entire port space, and containers within pods can share their local storage and networking.

Pods have a lifecycle; they are defined, then they are assigned to run on a node, then they run until their container(s) exit or they are removed for some other reason. Pods, depending on policy and exit code, may be removed after exiting, or may be retained in order to enable access to the logs of their containers.

OpenShift Container Platform treats pods as largely immutable; changes cannot be made to a pod definition while it is running. OpenShift Container Platform implements changes by terminating an existing pod and recreating it with modified configuration, base image(s), or both. Pods are also treated as expendable, and do not maintain state when recreated. Therefore pods should usually be managed by higher-level

controllers

, rather than directly by users.

For the maximum number of pods per OpenShift Container Platform node host, see the

Cluster Maximums

.

Bare pods that are not managed by a

replication controller

will be not rescheduled upon node disruption.

Below is an example definition of a pod that provides a long-running service, which is actually a part of the OpenShift Container Platform infrastructure: the integrated container image registry. It demonstrates many features of pods, most of which are discussed in other topics and thus only briefly mentioned here:

Pod Object Definition (YAML)

apiVersion: v1

kind: Pod

metadata:

annotations: { ... }

labels: 1

deployment: docker-registry-1

deploymentconfig: docker-registry

docker-registry: default

generateName: docker-registry-1- 2

spec:

containers: 3

- env: 4

- name: OPENSHIFT_CA_DATA

value: ...

- name: OPENSHIFT_CERT_DATA

value: ...

- name: OPENSHIFT_INSECURE

value: "false"

- name: OPENSHIFT_KEY_DATA

value: ...

- name: OPENSHIFT_MASTER

value: https://master.example.com:8443

image: openshift/origin-docker-registry:v0.6.2 5

imagePullPolicy: IfNotPresent

name: registry

ports: 6

- containerPort: 5000

protocol: TCP

resources: {}

securityContext: { ... } 7

volumeMounts: 8

- mountPath: /registry

name: registry-storage

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-br6yz

readOnly: true

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: default-dockercfg-at06w

restartPolicy: Always 9

serviceAccount: default 10

volumes: 11

- emptyDir: {}

name: registry-storage

- name: default-token-br6yz

secret:

secretName: default-token-br6yz

Pods can be "tagged" with one or more

labels

, which can then be used to select and manage groups of pods in a single operation. The labels are stored in key/value format in the

metadata

hash. One label in this example is

docker-registry=default

.

Pods must have a unique name within their

namespace

. A pod definition may specify the basis of a name with the

generateName

attribute, and random characters will be added automatically to generate a unique name.

containers

specifies an array of container definitions; in this case (as with most), just one.

Environment variables can be specified to pass necessary values to each container.

Each container in the pod is instantiated from its own

Docker-formatted container image

.

The container can bind to ports which will be made available on the pod’s IP.

OpenShift Container Platform defines a security context for containers which specifies whether they are allowed to run as privileged containers, run as a user of their choice, and more. The default context is very restrictive but administrators can modify this as needed.

The container specifies where external storage volumes should be mounted within the container. In this case, there is a volume for storing the registry’s data, and one for access to credentials the registry needs for making requests against the OpenShift Container Platform API.

The

pod restart policy

with possible values

Always

,

OnFailure

, and

Never

. The default value is

Always

.

Pods making requests against the OpenShift Container Platform API is a common enough pattern that there is a

serviceAccount

field for specifying which

service account

user the pod should authenticate as when making the requests. This enables fine-grained access control for custom infrastructure components.

The pod defines storage volumes that are available to its container(s) to use. In this case, it provides an ephemeral volume for the registry storage and a

secret

volume containing the service account credentials.

This pod definition does not include attributes that are filled by OpenShift Container Platform automatically after the pod is created and its lifecycle begins. The

Kubernetes pod documentation

has details about the functionality and purpose of pods.

3.3.1.1. Pod Restart Policy

A pod restart policy determines how OpenShift Container Platform responds when containers in that pod exit. The policy applies to all containers in that pod.

The possible values are:

Always

- Tries restarting a successfully exited container on the pod continuously, with an exponential back-off delay (10s, 20s, 40s) until the pod is restarted. The default is

Always

.

OnFailure

- Tries restarting a failed container on the pod with an exponential back-off delay (10s, 20s, 40s) capped at 5 minutes.

Never

- Does not try to restart exited or failed containers on the pod. Pods immediately fail and exit.

Once bound to a node, a pod will never be bound to another node. This means that a controller is necessary in order for a pod to survive node failure:

The Custom build strategy allows developers to define a specific builder image responsible for the entire build process. Using your own builder image allows you to customize your build process.

A

Custom builder image

is a plain Docker-formatted container image embedded with build process logic, for example for building RPMs or base images. The

openshift/origin-custom-docker-builder

image is available on the

Docker Hub

registry as an example implementation of a Custom builder image.

The Pipeline build strategy allows developers to define a

Jenkins pipeline

for execution by the Jenkins pipeline plugin. The build can be started, monitored, and managed by OpenShift Container Platform in the same way as any other build type.

Pipeline workflows are defined in a Jenkinsfile, either embedded directly in the build configuration, or supplied in a Git repository and referenced by the build configuration.

The first time a project defines a build configuration using a Pipeline strategy, OpenShift Container Platform instantiates a Jenkins server to execute the pipeline. Subsequent Pipeline build configurations in the project share this Jenkins server.

For more details on how the Jenkins server is deployed and how to configure or disable the autoprovisioning behavior, see

Configuring Pipeline Execution

.

The Jenkins server is not automatically removed, even if all Pipeline build configurations are deleted. It must be manually deleted by the user.

For more information about Jenkins Pipelines, see the

Jenkins documentation

.

An image stream and its associated tags provide an abstraction for referencing

container images

from within OpenShift Container Platform. The image stream and its tags allow you to see what images are available and ensure that you are using the specific image you need even if the image in the repository changes.

Image streams do not contain actual image data, but present a single virtual view of related images, similar to an image repository.

You can configure

Builds

and

Deployments

to watch an image stream for notifications when new images are added and react by performing a Build or Deployment, respectively.

For example, if a Deployment is using a certain image and a new version of that image is created, a Deployment could be automatically performed to pick up the new version of the image.

However, if the image stream tag used by the Deployment or Build is not updated, then even if the container image in the container image registry is updated, the Build or Deployment will continue using the previous (presumably known good) image.

The source images can be stored in any of the following:

OpenShift Container Platform’s

integrated registry

An external registry, for example

registry.redhat.io

or

hub.docker.com

Other image streams in the OpenShift Container Platform cluster

When you define an object that references an image stream tag (such as a Build or Deployment configuration), you point to an image stream tag, not the Docker repository. When you Build or Deploy your application, OpenShift Container Platform queries the Docker repository using the image stream tag to locate the associated ID of the image and uses that exact image.

The image stream metadata is stored in the etcd instance along with other cluster information.

The following image stream contains two tags:

34

which points to a Python v3.4 image and

35

which points to a Python v3.5 image:

$ oc describe is python

Example Output

Name: python

Namespace: imagestream

Created: 25 hours ago

Labels: app=python

Annotations: openshift.io/generated-by=OpenShiftWebConsole

openshift.io/image.dockerRepositoryCheck=2017-10-03T19:48:00Z

Docker Pull Spec: docker-registry.default.svc:5000/imagestream/python

Image Lookup: local=false

Unique Images: 2

Tags: 2

tagged from centos/python-34-centos7

* centos/python-34-centos7@sha256:28178e2352d31f240de1af1370be855db33ae9782de737bb005247d8791a54d0

14 seconds ago

tagged from centos/python-35-centos7

* centos/python-35-centos7@sha256:2efb79ca3ac9c9145a63675fb0c09220ab3b8d4005d35e0644417ee552548b10

7 seconds ago

Using image streams has several significant benefits:

You can tag, rollback a tag, and quickly deal with images, without having to re-push using the command line.

You can trigger Builds and Deployments when a new image is pushed to the registry. Also, OpenShift Container Platform has generic triggers for other resources (such as Kubernetes objects).

You can

mark a tag for periodic re-import

. If the source image has changed, that change is picked up and reflected in the image stream, which triggers the Build and/or Deployment flow, depending upon the Build or Deployment configuration.

You can share images using fine-grained access control and quickly distribute images across your teams.

If the source image changes, the image stream tag will still point to a known-good version of the image, ensuring that your application will not break unexpectedly.

You can

configure security

around who can view and use the images through permissions on the image stream objects.

Users that lack permission to read or list images on the cluster level can still retrieve the images tagged in a project using image streams.

For a curated set of image streams, see the

OpenShift Image Streams and Templates library

.

When using image streams, it is important to understand what the image stream tag is pointing to and how changes to tags and images can affect you. For example:

If your image stream tag points to a container image tag, you need to understand how that container image tag is updated. For example, a container image tag

docker.io/ruby:2.5

points to a v2.5 ruby image, but a container image tag

docker.io/ruby:latest

changes with major versions. So, the container image tag that a image stream tag points to can tell you how stable the image stream tag is.

If your image stream tag follows another image stream tag instead of pointing directly to a container image tag, it is possible that the image stream tag might be updated to follow a different image stream tag in the future. This change might result in picking up an incompatible version change.

-

Docker repository

-

A collection of related container images and tags identifying them. For example, the OpenShift Jenkins images are in a Docker repository:

docker.io/openshift/jenkins-2-centos7

-

Container registry

-

A content server that can store and service images from Docker repositories. For example:

registry.redhat.io

-

container image

-

A specific set of content that can be run as a container. Usually associated with a particular tag within a Docker repository.

-

container image tag

-

A label applied to a container image in a repository that distinguishes a specific image. For example, here

3.6.0

is a tag:

docker.io/openshift/jenkins-2-centos7:3.6.0

A container image tag can be updated to point to new container image content at any time.

-

container image ID

-

A SHA (Secure Hash Algorithm) code that can be used to pull an image. For example:

docker.io/openshift/jenkins-2-centos7@sha256:ab312bda324

A SHA image ID cannot change. A specific SHA identifier always references the exact same container image content.

-

Image stream

-

An OpenShift Container Platform object that contains pointers to any number of Docker-formatted container images identified by tags. You can think of an image stream as equivalent to a Docker repository.

-

Image stream tag

-

A named pointer to an image in an image stream. An image stream tag is similar to a container image tag. See

Image Stream Tag

below.

-

Image stream image

-

An image that allows you to retrieve a specific container image from a particular image stream where it is tagged. An image stream image is an API resource object that pulls together some metadata about a particular image SHA identifier. See

Image Stream Images

below.

-

Image stream trigger

-

A trigger that causes a specific action when an image stream tag changes. For example, importing can cause the value of the tag to change, which causes a trigger to fire when there are Deployments, Builds, or other resources listening for those. See

Image Stream Triggers

below.

3.5.2.3. Image Stream Images

An

image stream image

points from within an image stream to a particular image ID.

Image stream images allow you to retrieve metadata about an image from a particular image stream where it is tagged.

Image stream image objects are automatically created in OpenShift Container Platform whenever you import or tag an image into the image stream. You should never have to explicitly define an image stream image object in any image stream definition that you use to create image streams.

The image stream image consists of the image stream name and image ID from the repository, delimited by an

@

sign:

<image-stream-name>@<image-id>

To refer to the image in the

image stream object example above

, the image stream image looks like:

origin-ruby-sample@sha256:47463d94eb5c049b2d23b03a9530bf944f8f967a0fe79147dd6b9135bf7dd13d

3.5.2.4. Image Stream Tags

An

image stream tag

is a named pointer to an image in an

image stream

. It is often abbreviated as

istag

. An image stream tag is used to reference or retrieve an image for a given image stream and tag.

Image stream tags can reference any local or externally managed image. It contains a history of images represented as a stack of all images the tag ever pointed to. Whenever a new or existing image is tagged under particular image stream tag, it is placed at the first position in the history stack. The image previously occupying the top position will be available at the second position, and so forth. This allows for easy rollbacks to make tags point to historical images again.

The following image stream tag is from the

image stream object example above

:

Image Stream Tag with Two Images in its History

tags:

- items:

- created: 2017-09-02T10:15:09Z

dockerImageReference: 172.30.56.218:5000/test/origin-ruby-sample@sha256:47463d94eb5c049b2d23b03a9530bf944f8f967a0fe79147dd6b9135bf7dd13d

generation: 2

image: sha256:909de62d1f609a717ec433cc25ca5cf00941545c83a01fb31527771e1fab3fc5

- created: 2017-09-29T13:40:11Z

dockerImageReference: 172.30.56.218:5000/test/origin-ruby-sample@sha256:909de62d1f609a717ec433cc25ca5cf00941545c83a01fb31527771e1fab3fc5

generation: 1

image: sha256:47463d94eb5c049b2d23b03a9530bf944f8f967a0fe79147dd6b9135bf7dd13d

tag: latest

Image stream tags can be

permanent

tags or

tracking

tags.

Permanent tags

are version-specific tags that point to a particular version of an image, such as Python 3.5.

Tracking tags

are reference tags that follow another image stream tag and could be updated in the future to change which image they follow, much like a symlink. Note that these new levels are not guaranteed to be backwards-compatible.

For example, the

latest

image stream tags that ship with OpenShift Container Platform are tracking tags. This means consumers of the

latest

image stream tag will be updated to the newest level of the framework provided by the image when a new level becomes available. A

latest

image stream tag to

v3.10

could be changed to

v3.11

at any time. It is important to be aware that these

latest

image stream tags behave differently than the Docker

latest

tag. The

latest

image stream tag, in this case, does not point to the latest image in the Docker repository. It points to another image stream tag, which might not be the latest version of an image. For example, if the

latest

image stream tag points to

v3.10

of an image, when the

3.11

version is released, the

latest

tag is not automatically updated to

v3.11

, and remains at

v3.10

until it is manually updated to point to a

v3.11

image stream tag.

Tracking tags are limited to a single image stream and cannot reference other image streams.

You can create your own image stream tags for your own needs. See the

Recommended Tagging Conventions

.

The image stream tag is composed of the name of the image stream and a tag, separated by a colon:

<image stream name>:<tag>

For example, to refer to the

sha256:47463d94eb5c049b2d23b03a9530bf944f8f967a0fe79147dd6b9135bf7dd13d

image in the

image stream object example above

, the image stream tag would be:

origin-ruby-sample:latest

3.5.2.5. Image Stream Change Triggers

Image stream triggers allow your Builds and Deployments to be automatically invoked when a new version of an upstream image is available.

For example, Builds and Deployments can be automatically started when an image stream tag is modified. This is achieved by monitoring that particular image stream tag and notifying the Build or Deployment when a change is detected.

The

ImageChange

trigger results in a new replication controller whenever the content of an

image stream tag

changes (when a new version of the image is pushed).

3.5.2.6. Image Stream Mappings

When the

integrated registry

receives a new image, it creates and sends an image stream mapping to OpenShift Container Platform, providing the image’s project, name, tag, and image metadata.

Configuring image stream mappings is an advanced feature.

This information is used to create a new image (if it does not already exist) and to tag the image into the image stream. OpenShift Container Platform stores complete metadata about each image, such as commands, entry point, and environment variables. Images in OpenShift Container Platform are immutable and the maximum name length is 63 characters.

See the

Developer Guide

for details on manually tagging images.

The following image stream mapping example results in an image being tagged as

test/origin-ruby-sample:latest

:

3.5.2.7. Working with Image Streams

The following sections describe how to use image streams and image stream tags. For more information on working with image streams, see

Managing Images

.

3.5.2.7.1. Getting Information about Image Streams

To get general information about the image stream and detailed information about all the tags it is pointing to, use the following command:

$ oc describe is/<image-name>

For example:

$ oc describe is/python

3.5.2.7.2. Adding Additional Tags to an Image Stream

To add a tag that points to one of the existing tags, you can use the

oc tag

command:

oc tag <image-name:tag> <image-name:tag>

For example:

$ oc tag python:3.5 python:latest

3.5.2.7.4. Updating an Image Stream Tag

To update a tag to reflect another tag in an image stream:

$ oc tag <image-name:tag> <image-name:latest>

For example, the following updates the

latest

tag to reflect the

3.6

tag in an image stream:

$ oc tag python:3.6 python:latest

3.5.2.7.6. Configuring Periodic Importing of Tags

When working with an external container image registry, to periodically re-import an image (such as, to get latest security updates), use the

--scheduled

flag:

$ oc tag <repositiory/image> <image-name:tag> --scheduled

For example:

$ oc tag docker.io/python:3.6.0 python:3.6 --scheduled

3.6.1. Replication controllers

A

replication controller

ensures that a specified number of replicas of a pod are running at all times. If pods exit or are deleted, the replication controller acts to instantiate more up to the defined number. Likewise, if there are more running than desired, it deletes as many as necessary to match the defined amount.

A replication controller configuration consists of:

The number of replicas desired (which can be adjusted at runtime).

A pod definition to use when creating a replicated pod.

A selector for identifying managed pods.

A selector is a set of labels assigned to the pods that are managed by the replication controller. These labels are included in the pod definition that the replication controller instantiates. The replication controller uses the selector to determine how many instances of the pod are already running in order to adjust as needed.

The replication controller does not perform auto-scaling based on load or traffic, as it does not track either. Rather, this would require its replica count to be adjusted by an external auto-scaler.

A replication controller is a core Kubernetes object called

ReplicationController

.

The following is an example

ReplicationController

definition:

apiVersion: v1

kind: ReplicationController

metadata:

name: frontend-1

spec:

replicas: 1 1

selector: 2

name: frontend

template: 3

metadata:

labels: 4

name: frontend 5

spec:

containers:

- image: openshift/hello-openshift

name: helloworld

ports:

- containerPort: 8080

protocol: TCP

restartPolicy: Always

-

1

-

The number of copies of the pod to run.

The label selector of the pod to run.

A template for the pod the controller creates.

Labels on the pod should include those from the label selector.

The maximum name length after expanding any parameters is 63 characters.

Similar to a

replication controller

, a replica set ensures that a specified number of pod replicas are running at any given time. The difference between a replica set and a replication controller is that a replica set supports set-based selector requirements whereas a replication controller only supports equality-based selector requirements.

Only use replica sets if you require custom update orchestration or do not require updates at all, otherwise, use

Deployments

. Replica sets can be used independently, but are used by deployments to orchestrate pod creation, deletion, and updates. Deployments manage their replica sets automatically, provide declarative updates to pods, and do not have to manually manage the replica sets that they create.

A replica set is a core Kubernetes object called

ReplicaSet

.

The following is an example

ReplicaSet

definition:

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: frontend-1

labels:

tier: frontend

spec:

replicas: 3

selector: 1

matchLabels: 2

tier: frontend

matchExpressions: 3

- {key: tier, operator: In, values: [frontend]}

template:

metadata:

labels:

tier: frontend

spec:

containers:

- image: openshift/hello-openshift

name: helloworld

ports:

- containerPort: 8080

protocol: TCP

restartPolicy: Always

-

1

-

A label query over a set of resources. The result of

matchLabels

and

matchExpressions

are logically conjoined.

Equality-based selector to specify resources with labels that match the selector.

Set-based selector to filter keys. This selects all resources with key equal to

tier

and value equal to

frontend

.

A job is similar to a replication controller, in that its purpose is to create pods for specified reasons. The difference is that replication controllers are designed for pods that will be continuously running, whereas jobs are for one-time pods. A job tracks any successful completions and when the specified amount of completions have been reached, the job itself is completed.

The following example computes π to 2000 places, prints it out, then completes:

apiVersion: extensions/v1

kind: Job

metadata:

name: pi

spec:

selector:

matchLabels:

app: pi

template:

metadata:

name: pi

labels:

app: pi

spec:

containers:

- name: pi

image: perl

command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"]

restartPolicy: Never

See the

Jobs

topic for more information on how to use jobs.

3.6.4. Deployments and Deployment Configurations

Building on replication controllers, OpenShift Container Platform adds expanded support for the software development and deployment lifecycle with the concept of deployments. In the simplest case, a deployment just creates a new replication controller and lets it start up pods. However, OpenShift Container Platform deployments also provide the ability to transition from an existing deployment of an image to a new one and also define hooks to be run before or after creating the replication controller.

The OpenShift Container Platform

DeploymentConfig

object defines the following details of a deployment:

The elements of a

ReplicationController

definition.

Triggers for creating a new deployment automatically.

The strategy for transitioning between deployments.

Life cycle hooks.

Each time a deployment is triggered, whether manually or automatically, a deployer pod manages the deployment (including scaling down the old replication controller, scaling up the new one, and running hooks). The deployment pod remains for an indefinite amount of time after it completes the deployment in order to retain its logs of the deployment. When a deployment is superseded by another, the previous replication controller is retained to enable easy rollback if needed.

For detailed instructions on how to create and interact with deployments, refer to

Deployments

.

Here is an example

DeploymentConfig

definition with some omissions and callouts:

apiVersion: v1

kind: DeploymentConfig

metadata:

name: frontend

spec:

replicas: 5

selector:

name: frontend

template: { ... }

triggers:

- type: ConfigChange 1

- imageChangeParams:

automatic: true

containerNames:

- helloworld

from:

kind: ImageStreamTag

name: hello-openshift:latest

type: ImageChange 2

strategy:

type: Rolling 3

-

1

-

A

ConfigChange

trigger causes a new deployment to be created any time the replication controller template changes.

An

ImageChange

trigger causes a new deployment to be created each time a new version of the backing image is available in the named image stream.

The default

Rolling

strategy makes a downtime-free transition between deployments.

A template describes a set of

objects

that can be parameterized and processed to produce a list of objects for creation by OpenShift Container Platform. The objects to create can include anything that users have permission to create within a project, for example

services

, build configurations, and

deployment configurations

. A template may also define a set of

labels

to apply to every object defined in the template.

See the

template guide

for details about creating and using templates.

Chapter 4. Additional Concepts

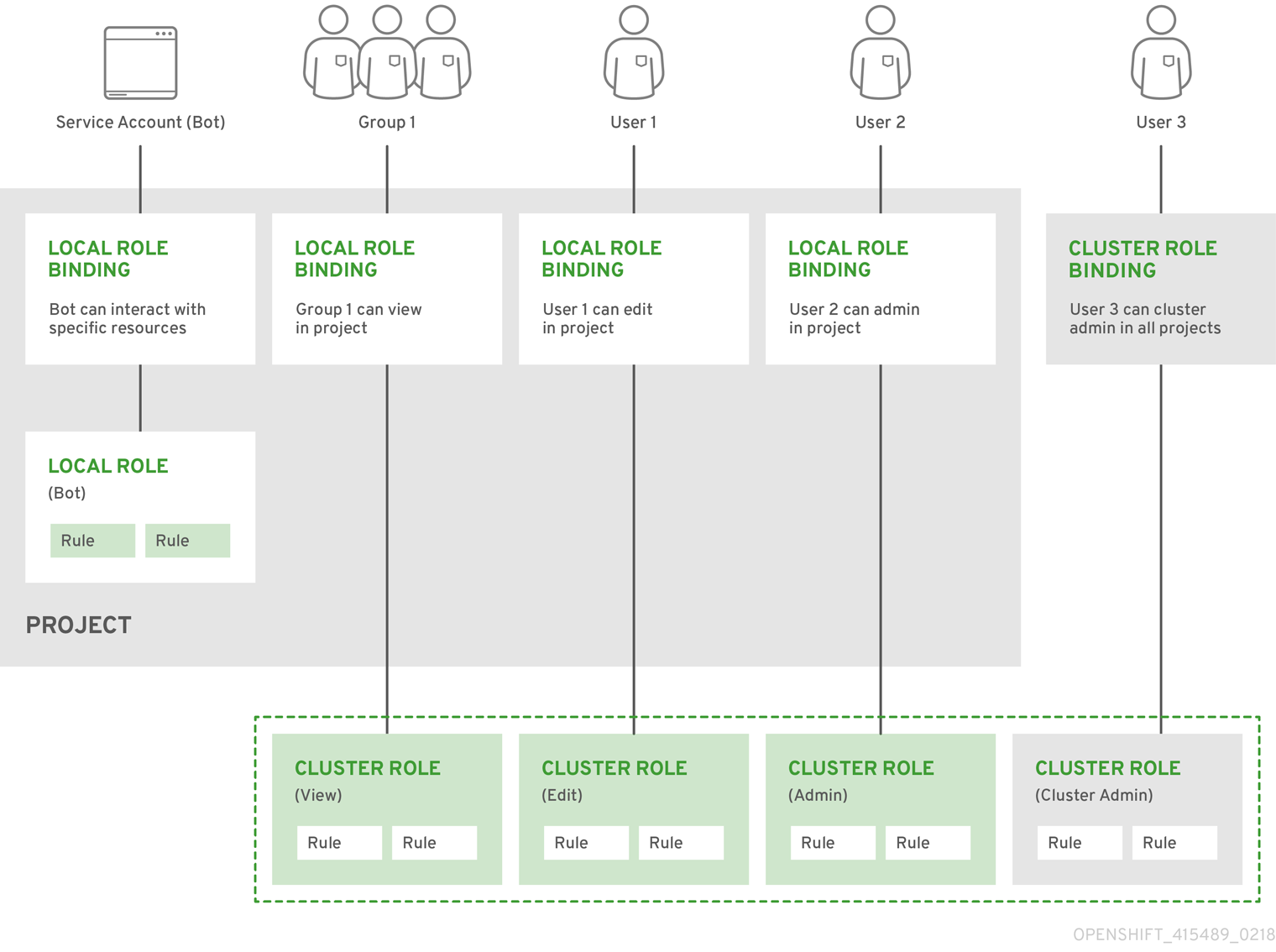

The authentication layer identifies the user associated with requests to the OpenShift Container Platform API. The authorization layer then uses information about the requesting user to determine if the request should be allowed.

As an administrator, you can

configure authentication

using a

master configuration file

.

A

user

in OpenShift Container Platform is an entity that can make requests to the OpenShift Container Platform API. Typically, this represents the account of a developer or administrator that is interacting with OpenShift Container Platform.

A user can be assigned to one or more

groups

, each of which represent a certain set of users. Groups are useful when

managing authorization policies

to grant permissions to multiple users at once, for example allowing access to

objects

within a

project

, versus granting them to users individually.

In addition to explicitly defined groups, there are also system groups, or

virtual groups

, that are automatically provisioned by OpenShift. These can be seen when

viewing cluster bindings

.

In the default set of virtual groups, note the following in particular:

4.1.3. API Authentication

Requests to the OpenShift Container Platform API are authenticated using the following methods:

-

OAuth Access Tokens

-

-

Obtained from the OpenShift Container Platform OAuth server using the

<master>

/oauth/authorize

and

<master>

/oauth/token

endpoints.

Sent as an

Authorization: Bearer…

header.

Sent as a websocket subprotocol header in the form

base64url.bearer.authorization.k8s.io.<base64url-encoded-token>

for websocket requests.

-

X.509 Client Certificates

-

-

Requires a HTTPS connection to the API server.

Verified by the API server against a trusted certificate authority bundle.

The API server creates and distributes certificates to controllers to authenticate themselves.

Any request with an invalid access token or an invalid certificate is rejected by the authentication layer with a 401 error.

If no access token or certificate is presented, the authentication layer assigns the

system:anonymous

virtual user and the

system:unauthenticated

virtual group to the request. This allows the authorization layer to determine which requests, if any, an anonymous user is allowed to make.

A request to the OpenShift Container Platform API can include an

Impersonate-User

header, which indicates that the requester wants to have the request handled as though it came from the specified user. You impersonate a user by adding the

--as=<user>

flag to requests.

Before User A can impersonate User B, User A is authenticated. Then, an authorization check occurs to ensure that User A is allowed to impersonate the user named User B. If User A is requesting to impersonate a service account,

system:serviceaccount:namespace:name

, OpenShift Container Platform confirms that User A can impersonate the

serviceaccount

named

name

in

namespace

. If the check fails, the request fails with a 403 (Forbidden) error code.

By default, project administrators and editors can impersonate service accounts in their namespace. The

sudoers

role allows a user to impersonate

system:admin

, which in turn has cluster administrator permissions. The ability to impersonate

system:admin

grants some protection against typos, but not security, for someone administering the cluster. For example, running

oc delete nodes --all

fails, but running

oc delete nodes --all --as=system:admin

succeeds. You can grant a user that permission by running this command:

$ oc create clusterrolebinding <any_valid_name> --clusterrole=sudoer --user=<username>

If you need to create a project request on behalf of a user, include the

--as=<user> --as-group=<group1> --as-group=<group2>

flags in your command. Because

system:authenticated:oauth

is the only bootstrap group that can create project requests, you must impersonate that group, as shown in the following example:

$ oc new-project <project> --as=<user> \

--as-group=system:authenticated --as-group=system:authenticated:oauth

The OpenShift Container Platform master includes a built-in OAuth server. Users obtain OAuth access tokens to authenticate themselves to the API.

When a person requests a new OAuth token, the OAuth server uses the configured

identity provider

to determine the identity of the person making the request.

It then determines what user that identity maps to, creates an access token for that user, and returns the token for use.

Every request for an OAuth token must specify the OAuth client that will receive and use the token. The following OAuth clients are automatically created when starting the OpenShift Container Platform API:

|

|