What are Gitlab Runners

Gitlab runners execute pipeline jobs. These runners are configured and registered inside a

gitlab runner application.

That application can be installed directly on the OS or a docker container.

The runners configuration is stored into

/etc/gitlab-runner/config.toml

where each

runner is defined as a

[[runners]]

entry.

General common issues

– The job is failing with a 403 error such as:

Getting source from Git repository

00:01

Fetching changes with git depth set to 20...

Reinitialized existing Git repository in /builds/basic-projects-using-shared-ci/maven-docker-basic-example1/.git/

remote: You are not allowed to download code from this project.

fatal: unable to access 'http://gitlab.david.com/basic-projects-using-shared-ci/maven-docker-basic-example1.git/': The requested URL returned error: 403

ERROR: Job failed: exit code 1

|

How to fix

.

Normally we don’t need to set any credential in the gitlab runner configuration to allow git

cloning.

So trying to do that is not the right way.

A common issue is the user that triggers the job is not member of the project.

Not: even if we are administrator on the gitlab it doesn’t mean we are member of all

projects,

it means we need to check in the project or the group precisely.

– The job is stuck

with the following error message where foo-tag… is (are) the tags

set for a stage defined in the yaml pipeline :

This job is stuck because you don't have any active runners online or available with any of these tags assigned to them: foo-tag..."

Go to project CI settings

|

How to fix

.

case 1 : no runner at all is installed matching to that tag.

Solution : as suggested,

configure/add a runner for that tag.

case 2 : a runner is installed for that tag but it is not accessible for that project.

subcase 1 : the runner was installed specifically in another project/Settings/CICD and not in a

common group which the two project belong.

solution : delete the runner installed at the project level and install it at the

group level.

subcase 2 :the runner was installed in the common group but in Settings/CICD,

the « Enable shared runners for this group » was disabled.

solution : just enable it again.

– The docker runner fails eagerly with a error message concerning caching volume.

The message can look like :

ERROR: Preparation failed: adding cache volume: set volume permissions: running permission container "9802d1f1586765cef195531e7d5571911151d5ba698c2c4d70a251e9ea2e14c5" for volume "runner-84exxmn--project-23046492-concurrent-0-cache-c33bcaa1fd2c77edfc3893b41966cea8": starting permission container: Error response from daemon: error evaluating symlinks from mount source "/var/lib/docker/volumes/runner-84exxmn--project-23046492-concurrent-0-cache-c33bcaa1fd2c77edfc3893b41966cea8/_data": lstat /var/lib/docker/volumes/runner-84exxmn--project-23046492-concurrent-0-cache-c33bcaa1fd2c77edfc3893b41966cea8: no such file or directory (linux_set.go:105:0s)

|

That kind of error may happen when a docker volume used for gitlab for caching resources was

dropped.

How to fix

: Recreate the docker volume on the hosts with the folder _data as child

folder such as :

"9802d1f1586765cef195531e7d5571911151d5ba698c2c4d70a251e9ea2e14c5"/_data

.

– gitlab runner job fails eagerly with a error message concerning disk space missing in a

subfolder of /var/lib/docker/overlay2/ while the directory contains a lot of space.

The message looks like :

:

ERROR: Job failed (system failure): Error response from daemon: error creating overlay

mount to /var/lib/docker/overlay2/15454/545454.... no space left on device

(docker.go:787:0s)

How to fix

:

-check first with df that the /var/lib/docker/overlay2/ folder of the host where the gitlab

runner is run has enough space

Double validate it with a dd to generate for example a file of 2GB.

– if no space issue detected : try by deleting/restarting the gitlab runner. If not working

restart docker too

docker run -d --name gitlab-runner --restart always \

-v /var/run/docker.sock:/var/run/docker.sock \

-v gitlab-runner-config:/etc/gitlab-runner \

gitlab/gitlab-runner:latest

|

The volume config contains the gitlab runner app configuration (mainly the .toml file) and we map

the docker socket of the host to allow the runners that work with docker executor to

start/stop/rm docker containers.

Register a runner instance with interactive form :

docker run --rm -it -v gitlab-runner-config:/etc/gitlab-runner gitlab/gitlab-runner:latest register

|

Here we are prompted to enter :

– the gitlab url

– the registration token retrievable since the Gitlab GUI settings/CDI/Runners.

– the executor type (docker, kubernetes, custom, shell, virtualbox, …)

– the base image

Register a runner instance with non-interactive form :

Here it is an example to create a runner allowing to use the docker client via the docker socket

binding of the host :

docker run --rm -it \

-v gitlab-runner-config:/etc/gitlab-runner \

-v /var/run/docker.sock:/var/run/docker.sock \

gitlab/gitlab-runner:latest register \

--non-interactive \

--executor "docker" \

--docker-image "docker:19.03.12" \

--url "https://gitlab.com" \

--registration-token "ijEKCYos8s4hPv7J5DXW" \

--description "docker-runner" \

--tag-list "docker" \

--access-level="not_protected"

|

Restart the runner application (not required generally because that performs hot

changes) :

docker restart gitlab-runner

Enable Docker commands in your CI/CD jobs

There are 4 ways to enable the use of docker build and docker run during jobs, each with their

own tradeoffs :

– The shell executor. Here job scripts are executed as the gitlab-runner user of the Gitlab

runner machine/server and it requires to install Docker Engine on that machine/server.

– The Docker executor with the Docker image (Docker-in-Docker)

– Docker socket binding

– kaniko. It is a Google tool that doesn’t depend on a Docker daemon and executes each command

within a Dockerfile completely in userspace

To use the Docker-in-Docker way or the Docker socket binding, we need to specify the

docker

executor.

Here is a runner register with the docker socket binding way :

WARN : The

non-interactive form doesn’t work (in my case)! It created a docker+machine executor instead of

a docker executor. That is not visible in the config.toml but at runtime when the gitlab runner

starts :

Preparing the « docker+machine » executor

docker run --rm -it \

-v gitlab-runner-config:/etc/gitlab-runner \

gitlab/gitlab-runner:latest register \

--non-interactive \

--executor "docker" \

--docker-image "docker:19.03.12" \

--docker-volumes /var/run/docker.sock:/var/run/docker.sock \

--url "https://gitlab.com" \

--registration-token "ijEKCYos8s4hPv7J5DXW" \

--description "docker-runner" \

--tag-list "docker" \

--access-level="not_protected"

|

So favor the interactive form by selecting the docker executor when asked.

Finally we need to have in the config.toml a runner with a volume mount for the docker socket

such as :

[[runners]]

name = "docker-runner"

url = "https://gitlab.com"

token = "Kg-y8nNj3zsvx9Xs1DsM"

executor = "docker"

[runners.custom_build_dir]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

[runners.cache.azure]

[runners.docker]

tls_verify = false

image = "docker:19.03.12"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]

pull_policy = "if-not-present"

extra_hosts = ["gitlab.david.com:172.17.0.1"]

shm_size = 0

|

pull_policy

:

To use local images present on the docker host (scenario of docker with socket binding), we use

that option :

[runners.docker]

pull_policy = "if-not-present"

|

Helpful if the docker image specified with the FROM keyword of Dockerfile or the image property

specified in the gitlab-ci is a custom image that is not in the public registry.

Without that stick, we need to add the image in a private registry and to configure the gitlab

runner to use a certificate to access to that registry (see /etc/gitlab/certs folder in the

gitlab runner)

extra_hosts

:

It mimics the add-host argument of the docker run command.

It adds mapping(s) « host:ip address » in the

/etc/hosts

of the ephemeral container

started by the docker runner.

And here how to use docker in pipeline :

Docker Build:

stage: docker-build

tags:

- docker # docker is the tag we specified for the runner

image: docker:19.03.12 # optional, we can use the image defined in the runner

script:

- docker build -f docker/Dockerfile -t my-app .

when: always

only:

refs:

- master

|

The docker in docker way is similar but requires two changes : no socket binding in the runner

volume config, privileged mode to true and dind service conf. For now I didn’t manage to do it

working.

Use docker-compose in your CI/CD jobs

There are several ways to use that.

A very simple is defining a stage relying on the docker image

docker/compose:latest

(or better a specific version such as 1.27.4) and also declaring the docker dind service or a

gitlab runner mounted with the host docker socket.

Example with a gitlab runner mounted with the host docker socket :

Docker Build:

stage: docker-build

tags:

- docker

image:

name: docker/compose:1.27.4

script:

- docker-compose -v

- docker-compose -f docker-compose-single-step.yml ps

- docker-compose -f docker-compose-single-step.yml build

when: always

only:

refs:

- master

|

Two ways to deploy on kubernetes :

– to use a runner with the Kubernetes executor and correct env variables in the runner

– to use a runner with any executor but using a kubernetes cluster configuration registered in

gitlab

Scenario 1 : gitlab ci native integration with an existing kubernetes cluster that we added in

gitlab

Register an existing kubernetes cluster in gitlab

The cluster may be added at 3 distinct levels in gitlab :

– project

– group

– instance/global

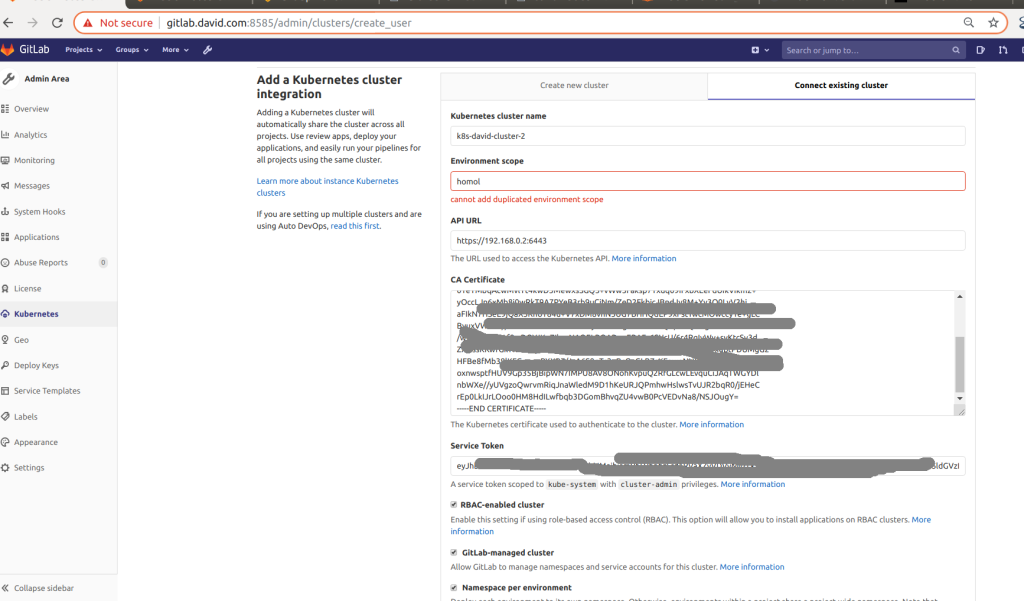

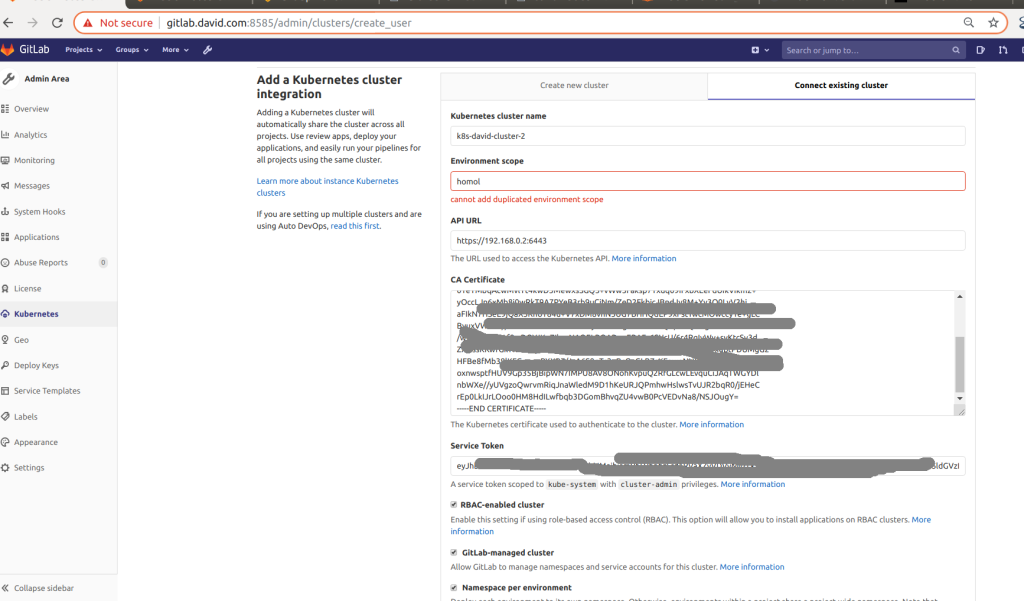

In the Gitlab Kubernetes menu page, search the option « Add a Kubernetes cluster

integration ».

Here a preview with gitlab and k8s setup in local :

Fields to set :

–

Kubernetes cluster name

(required) – The name you wish to give the cluster.

–

Environment scope

(required) – The associated environment to this cluster.

–

API URL

(required) – It’s the URL that GitLab uses to access the Kubernetes

API. Kubernetes exposes several APIs, we want the “base” URL that is common to all of them. For

example, https://kubernetes.example.com rather than https://kubernetes.example.com/api/v1.

Get the API URL by running this command:

Fields to set :

–

Kubernetes cluster name

(required) – The name you wish to give the cluster.

–

Environment scope

(required) – The associated environment to this cluster.

–

API URL

(required) – It’s the URL that GitLab uses to access the Kubernetes

API. Kubernetes exposes several APIs, we want the “base” URL that is common to all of them. For

example, https://kubernetes.example.com rather than https://kubernetes.example.com/api/v1.

Get the API URL by running this command:

kubectl cluster-info | grep -E 'Kubernetes master|Kubernetes control plane' | awk '/http/

{print $NF}'

–

CA certificate

(required) – A valid Kubernetes certificate is needed to

authenticate to the cluster. We use the certificate created by default.

List the secrets with kubectl get secrets, and one should be named similar to

default-token-xxxxx. Copy that token name for use below.

Get the certificate by running this command:

kubectl get secret <secret name> -o jsonpath="{['data']['ca\.crt']}" | base64

--decode

Note : if the command returns the entire certificate chain, you must copy the Root CA

certificate and any intermediate certificates at the bottom of the chain.

–

Token

– GitLab authenticates against Kubernetes using service tokens, which

are scoped to a particular namespace.

The token used should belong to a service account with cluster-admin privileges.

To create this service account:

Create a file called gitlab-admin-service-account.yaml and deploy it with kubectl :

kubectl apply -f gitlab-admin-service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: gitlab

namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: gitlab-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: gitlab

namespace: kube-system

|

At last retrieve the token for the gitlab service account:

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep gitlab |

awk '{print $1}')

–

Project namespace (optional)

– You don’t have to fill this in. By leaving it

blank, GitLab creates one for you.

Note:

Each project should have a unique namespace.

The project namespace is not necessarily the namespace of the secret, if you’re using a secret

with broader permissions, like the secret from default.

You should not use default as the project namespace.

If you or someone created a secret specifically for the project, usually with limited

permissions, the secret’s namespace and project namespace may be the same.

Define a pipeline in 3 stage : build a spring boot/redis app, build/tag/push a docker

image to the gitlab container registry and finally deploys the image as a pod on

kubernetes

Here is the complete pipeline :

stages:

- maven-build

- docker-build

- deploy

variables:

MAVEN_OPTS: "-Dmaven.repo.local=$CI_PROJECT_DIR/.m2/repository"

CI_DEBUG_TRACE: "true"

Maven Package:

stage: maven-build

tags:

- maven3-jdk11

image: maven:3.6.3-jdk-11

cache:

paths:

- .m2/repository

key: ${CI_COMMIT_REF_NAME}

script:

# we store GIT branch/commit/pipeline info into a file. Helpful for app version actuator or about->version information

- echo { \"branch\":\"${CI_COMMIT_REF_NAME}\", \"commit\":\"${CI_COMMIT_SHA}\", \"pipelineId\":\"${CI_PIPELINE_ID}\"} > git-info.json

- echo "git-info.json content =" && cat git-info.json

- mv git-info.json ${CI_PROJECT_DIR}/src/main/resources

- mvn $MAVEN_ARGS clean package

# compute the the docker build version : if master, we take the maven artifact version without snapshot. Otherwise we take the take the branch name

if [[ "${CI_COMMIT_REF_NAME}" = "master" ]]; then

dockerImageVersion=$(mvn $MAVEN_ARGS -q -U -Dexpression=project.version -DforceStdout org.apache.maven.plugins:maven-help-plugin:3.2.0:evaluate)

dockerImageVersion=${dockerImageVersion/-SNAPSHOT/}

dockerImageVersion=${CI_COMMIT_REF_NAME}

- echo "dockerImageVersion=${dockerImageVersion}"

- echo ${dockerImageVersion} > docker-image-version.json

artifacts:

name: ${CI_PROJECT_NAME}:${CI_COMMIT_REF_NAME}

expire_in: 1 week

paths:

- ${CI_PROJECT_DIR}/target/dependency/

- ${CI_PROJECT_DIR}/docker-image-version.json

when: always

only:

refs:

- master

Docker Build:

stage: docker-build

tags:

- docker

# image: docker:19.03.12

script:

- cat docker-image-version.json

- ls -R target/

- imageName="$CI_PROJECT_NAMESPACE/$CI_PROJECT_NAME:$(cat docker-image-version.json)"

- docker build -f docker/Dockerfile -t $imageName .

- docker login -u $CI_DEPLOY_USER -p $CI_DEPLOY_PASSWORD $CI_REGISTRY

- docker tag $imageName $CI_REGISTRY/$imageName

- docker push $CI_REGISTRY/$imageName

when: always

only:

refs:

- master

Deploy in Kubernetes:

stage: deploy

tags:

- docker

# image: google/cloud-sdk:350.0.0

# image: registry.gitlab.com/gitlab-examples/kubernetes-deploy

image:

name: bitnami/kubectl:1.18.19

entrypoint: [""] # 1. by default the image cmd is set in the entrypoint as kubectl and gitlab runner executes and 2.gitlab runner executes the entrypoint of the image at the "step_script" stage.

# "so it executes sth like "kubectl sh -c"

# Which generates that error : unknown command "sh" for "kubectl"

# Fix : removing the entrypoint

environment: dev

script:

- kubectl config view --minify | grep namespace

# create a secret in the current namespace (computed and created by gitlab runner) to allow to login to the container registry

- kubectl create secret docker-registry gitlab-registry --docker-server="$CI_REGISTRY" --docker-username="$CI_DEPLOY_USER" --docker-password="$CI_DEPLOY_PASSWORD" --docker-email="$GITLAB_USER_EMAIL" -o yaml --dry-run=client | kubectl apply -f -

# update the service account to use that secret to pull images

kubectl -n spring-boot-docker-kubernetes-example-32-dev patch serviceaccount default -p '{"imagePullSecrets": [{"name": "gitlab-registry"}]}'

- EPOCH_SEC=\"$(date +%s)\"

- image_version=$(cat docker-image-version.json)

- image="$CI_REGISTRY/kubernetes-projects/spring-boot-docker-kubernetes-example:${image_version}"

- echo $CI_DEPLOY_USER

- echo "EPOCH_SEC=$EPOCH_SEC"

- echo "image_version=$image_version"

- echo "image=$image"

- kubectl get pods

- cp k8s/deployment.yml k8s/deployment-dynamic.yml

- sed -i s@__COMMIT_SHA__@$CI_COMMIT_SHA@ k8s/deployment-dynamic.yml

- sed -i s@__DATE_TIME__@$EPOCH_SEC@ k8s/deployment-dynamic.yml

- sed -i s@__JAR_VERSION__@$image_version@ k8s/deployment-dynamic.yml

- sed -i s@__IMAGE__@$image@ k8s/deployment-dynamic.yml

- sed -i s@__NAMESPACE__@$KUBE_NAMESPACE@g k8s/deployment-dynamic.yml

- kubectl apply -f k8s/deployment-dynamic.yml

when: always

only:

refs:

- master

|

And here the kubernetes yml that defines the resource to deploy.

Note that that is a template with some variable part that we set during the deploy stage before

effectively deploying the resources with kubectl.

apiVersion: v1

kind: Namespace

metadata:

name: __NAMESPACE__

# spring boot app

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-boot-docker-kubernetes-example-sboot

namespace: __NAMESPACE__

spec:

replicas: 1

selector: #it defines how the Deployment finds which Pods to manage.

matchLabels: # It may have complexer rules. Here we simply select a label that is defined just below in the pod template

app: spring-boot-docker-kubernetes-example-sboot

template: # pod template

metadata:

labels:

#Optional : add a commit sha or a datime label to make the deployment to be updated by kubcetl apply even if the docker image is the same

app: spring-boot-docker-kubernetes-example-sboot

commitSha: __COMMIT_SHA__

dateTime: __DATE_TIME__

jarVersion: __JAR_VERSION__

spec:

containers:

- image: __IMAGE__ #Make imag variable

name: spring-boot-docker-kubernetes-example-sboot

command: ["java"]

args: ["-cp", "/app:/app/lib/*", "-Djava.security.egd=file:/dev/./urandom", "-Xdebug", "-Xrunjdwp:transport=dt_socket,server=y,suspend=n,address=0.0.0.0:8888", "davidxxx.SpringBootAndRedisApplication"]

imagePullPolicy: Always # useful in dev because the source code may change but that we reuse the same dev docker image

# imagePullPolicy: IfNotPresent # prevent pulling from a docker registry

readinessProbe:

httpGet:

scheme: HTTP

# We use the default spring boot health check readiness (spring boot 2.2 or +)

path: /actuator/health/readiness

port: 8090

initialDelaySeconds: 15

timeoutSeconds: 5

livenessProbe:

httpGet:

scheme: HTTP

# We use the default spring boot health check liveness (spring boot 2.2 or +)

path: /actuator/health/liveness

port: 8090

initialDelaySeconds: 15

timeoutSeconds: 15

# it is informational (only ports declared in service are required). It is alike docker EXPOSE instruction

# ports:

# - containerPort: 8090 # most of the time : it has to be the same than NodePort.targetPort

apiVersion: v1

kind: Service

metadata:

labels:

app: spring-boot-docker-kubernetes-example-sboot

# service name matters. It provides the way which other pods of the cluster may communicate with that pod

name: spring-boot-docker-kubernetes-example-sboot

namespace: __NAMESPACE__

spec:

type: NodePort

ports:

- name: "application-port" # port.name is just informational

targetPort: 8090 # port of the running app

port: 8090 # Cluster IP Port

nodePort: 30000 # External port (has to be unique in the cluster). By default Kubernetes allocates a node port from a range (default: 30000-32767)

- name: "debug-port"# port.name is just informational

targetPort: 8888 # port of the running app

port: 8888 # Cluster IP Port

nodePort: 30001 # External port (has to be unique in the cluster). By default Kubernetes allocates a node port from a range (default: 30000-32767)

selector:

app: spring-boot-docker-kubernetes-example-sboot

###------- REDIS---------###

# Redis app

apiVersion: apps/v1

kind: Deployment

metadata:

name: spring-boot-docker-kubernetes-example-redis

namespace: __NAMESPACE__

spec:

replicas: 1

selector: #it defines how the Deployment finds which Pods to manage.

matchLabels: # It may have complexer rules. Here we simply select a label that is defined just below in the pod template

app: spring-boot-docker-kubernetes-example-redis

template: # pod template

metadata:

labels:

app: spring-boot-docker-kubernetes-example-redis

commitSha: __COMMIT_SHA__ #Optional : add a commit sha label to make the deployment to be updated by kubcetl apply even if the docker image is the same

dateTime: __DATE_TIME__

spec:

# Optional : hard constraint : force to deploy on a node that has as tag redis-data=true

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: redis-data

operator: In

values:

- "true"

# alternative : soft constraint : try to deploy on a node that has as tag redis-data=true

# preferredDuringSchedulingIgnoredDuringExecution:

# - weight: 1

# preference:

# matchExpressions:

# - key: redis-data

# operator: In

# values:

# - "true"

containers:

- image: redis:6.0.9-alpine3.12

name: spring-boot-docker-kubernetes-example-redis

# --appendonly flag allows to persist data into a file

command: ["redis-server"]

args: ["--appendonly", "yes"]

# it is informational (only ports declared in service are required). It is alike docker EXPOSE instruction

# ports:

# - containerPort: 6379

# name: foo-redis

volumeMounts:

- mountPath: /data

name: redis-data

volumes:

- name: redis-data

hostPath:

path: /var/kub-volume-mounts/spring-boot-docker-kubernetes-example-redis/_data

apiVersion: v1

kind: Service

metadata:

labels:

app: spring-boot-docker-kubernetes-example-redis

# service name matters. It provides the way which other pods of the cluster may communicate with that pod

name: spring-boot-docker-kubernetes-example-redis

namespace: __NAMESPACE__

spec:

type: ClusterIP

ports:

- name: "application-port" # port.name is just informational

targetPort: 6379 # port of the running app

port: 6379 # Cluster IP Port

selector:

app: spring-boot-docker-kubernetes-example-redis

|

Pipeline explanations

Note

: no explanation on the two first part

(maven build and docker build/tag/push) that is not the matter here.

Here how Gitlab ci native Kubernetes features works when a pipeline is executed

.

1) The Gitlab runner relies on the native Kubernetes features only if the stage definition

define the

environment

property. It defines in which kubernetes « conceptual »

environment the stage is currently executing.

2) The Gitlab runner creates a namespace (if not existing) for the project.

The namespace is a concatenation of project name + project id +

environment

value we set in the stage.

For example a project named

spring-boot-docker-kubernetes-example

with as id

32

and an

environment

property set to

dev

will make gitlab ci to

create/use the kubernetes namespace

spring-boot-docker-kubernetes-example-32-dev

.

3) The Gitlab runner uses that namespace to interact and deploy any resources on kubectl.

Kubernetes basic behavior

:

When a namespace is created, Kubernetes creates automatically a service account for it, named

« default ».

We are so able to apply resources on that namespace (pod, deployment, service, secret,

volume…).

Kubernetes interaction with container registry :

To pull images from a private registry that requires an authentication, Kubernetes needs some

configuration .

Either by setting a imagePullSecrets field in the pod resource or by configuring the service

account of the current namespace with that information.

We will use the second way that is more generic and practical.

So in the first lines of the deploy stage we :

– create in that namespace a secret resource that allows to access to the registry

– update the service account of that namespace to use that secret to pull images.

Common issues with kubernetes

Problem

:

kubectl apply -f ...

doesn’t fail and Kubernetes creates

the pod but the pod is not READY and keeps as status : ImagePullBackOff along a « denied: access

forbidden » event .

kubectl describe pod foo-pod

returns something like :

Normal Scheduled 56s default-scheduler Successfully assigned spring-boot-docker-kubernetes-example-32-dev/spring-boot-docker-kubernetes-example-sboot-7b964b78b5-5wjps to david-virtual-machine

Normal BackOff 24s (x3 over 53s) kubelet Back-off pulling image "registry.david.com:5050/kubernetes-projects/spring-boot-docker-kubernetes-example:1.0.0"

Warning Failed 24s (x3 over 53s) kubelet Error: ImagePullBackOff

Normal Pulling 10s (x3 over 54s) kubelet Pulling image "registry.david.com:5050/kubernetes-projects/spring-boot-docker-kubernetes-example:1.0.0"

Warning Failed 10s (x3 over 53s) kubelet Failed to pull image "registry.david.com:5050/kubernetes-projects/spring-boot-docker-kubernetes-example:1.0.0": rpc error: code = Unknown desc = Error response from daemon: Head https://registry.david.com:5050/v2/kubernetes-projects/spring-boot-docker-kubernetes-example/manifests/1.0.0: denied: access forbidden

Warning Failed 10s (x3 over 53s) kubelet Error: ErrImagePull

|

Create a secret docker-registry with kubectl.

Problem

: gitlab runner looks for in the official docker registry

while we don’t want to

If we don’t use a docker registry for kube, the gitlab job with k8s may contact the official

docker registry access

and fail if not reachable.

Symptom

:

Look for events in the gitlab runner process/container that shows a failed image pull.

Solution

:

Manually pull the image on the docker node that runs the pod and set the

imagePullPolicy

attribute of the deployment/pod to

never

to prevent

any error related to pulling.

Problem

:

the kubernetes master contacted by the gitlab runner doesn’t manage to make the pod running. It

sticks to the pending state

Symptom

:

ci pipeline is blocked with a error that loops forever like (where gitlab is the k8s namespace

used by the k8s serviceaccount to deploy a pod from the gitlab runner):

Waiting for pod gitlab/runner-t3jt2q9b-project-230-concurrent-aser6h to be running, status is Pending

Waiting for pod gitlab/runner-t3jt2q9b-project-230-concurrent-aser6h to be running, status is Pending

51s Warning FailedCreatePodSandBox pod/runner-xxxxxxxx-project-115-concurrent-yyyyy Failed to create pod sandbox: rpc error: code = Unknown desc = failed to create a sandbox for pod "runner-t3jt2q6a-project-115-concurrent-0mfkf8": operation timeout: context deadline exceeded

50s Normal SandboxChanged pod/runner-xxxxxxxx-project-115-concurrent-yyyyy Pod sandbox changed, it will be killed and re-created. |

Step one: Diagnostic issues with below checks

Step two :

if something looks to prevent pod running (memory issue, node toleration issue, and so for…)

or appears blocking at the cluster level, fix that.

– Check details and logs of the pod (it has two containers : build and helper):

kubectl -n gitlab describe pod

gitlab/runner-t3jt2q9b-project-230-concurrent-aser6h

kubectl -n gitlab logs gitlab/runner-t3jt2q9b-project-230-concurrent-aser6h helper

kubectl -n gitlab logs gitlab/runner-t3jt2q9b-project-230-concurrent-aser6h build

– Check events on the gitlab namespace and on the whole cluster

kubectl -n gitlab get events

kubectl get -A events

– Check the state of the kube-system components

kubectl -n kube-system logs kube-scheduler-hostnameofmaster

kubectl -n kube-system logs kube-apiserver-hostnameofmaster

kubectl -n kube-system logs kube-controller-manager-hostnameofmaster

and so for…

Problem : we encounter some logs such as below means that there

is an issue with creds/certs to access to the cluster:

error: You must be logged in to the server (the server has asked for the client to provide credentials ( pods/log kube-scheduler-hostnameofmaster) |

– restart kubelet

– if not enough kill kube-XXX docker containers (scheduler, apiserver, controller-manager).

Killing docker child containers should be enough (that is those not prefixed with « k8s_POD_ »).

Gitlab runner cache

Phsyical Location

Its location depends on the way which the runner application was installed : locally or with

docker.

Local install : stored under the gitlab-runner user’s home directory:

/home/gitlab-runner/cache/<user>/<project>/<cache-key>/cache.zip.

Docker install , stored on the docker host as a volume : /var/lib/docker/volumes/<volume-id>/_data/<user>/<project>/<cache-key>/cache.zip .

About docker way : A distinct cache is created by user/project/cache-key and so a distinct

volume too. Volume ids have long and generated name such as :

runner-84exxmn--project-23046492-concurrent-0-cache-3c3f060a0374fc8bc39395164f415a70

Caches/Volumes are created/read on the fly by the gitlab runner during the CI execution. So

these cache volumes are never mounted on the gitlab runner container.

Enable caching

No caching is enabled by default. We enable it thanks to the cache: keyword.

Cache

is shared between pipelines of a same project. Caches are indeed not shared across

projects.

Caching availability for jobs

Caching may be declared/usable at pipeline level to make it to be available at every stage or at

some specific stage levels to make it to be available at some specific stages.

It depends on the declaration location of the cache: keyword : stage(s) or root

level.

Caching scope

A Cache may be created for a specific branch or across different or even all branches.

We define it thanks to the key attribute.

Caching content

We define directories and/or files to cache with the paths: that accepts a list of

elements.

Example : caching the .m2/repository folder with a distinct

cache for each branch/tag name and usable only in that specific stage :

|