Used Zammad version: 3.1.x

Used Zammad installation source: package

Operating system: Cent OS 7

Elastic Search stopped working yesterday. I can simply restart it. But eventually someone know why it stopped?

[admin@zammad zammad]$ sudo systemctl status elasticsearch

[sudo] Passwort für admin:

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; enabled; vendor preset: disabled)

Active: failed (Result: signal) since Do 2019-09-26 19:48:52 CEST; 11h ago

Docs: http://www.elastic.co

Process: 1772 ExecStart=/usr/share/elasticsearch/bin/elasticsearch -p ${PID_DIR}/elasticsearch.pid --quiet -Edefault.path.logs=${LOG_DIR} -Edefault.path.data=${DATA_DIR} -Edefault.path.conf=${CONF_DIR} (code=killed, signal=KILL)

Process: 1764 ExecStartPre=/usr/share/elasticsearch/bin/elasticsearch-systemd-pre-exec (code=exited, status=0/SUCCESS)

Main PID: 1772 (code=killed, signal=KILL)

Sep 26 19:48:52 zammad systemd[1]: elasticsearch.service: main process exited, code=killed, status=9/KILL

Sep 26 19:48:52 zammad systemd[1]: Unit elasticsearch.service entered failed state.

Sep 26 19:48:52 zammad systemd[1]: elasticsearch.service failed.

Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

Maybe you find some errors in the log file?

less /var/log/elasticsearch/elasticsearch.log (exit with “q”

)

)

Which version of elasticsearch are you using?

bash /usr/share/elasticsearch/bin/elasticsearch --version

Cheers, Daniel.

Thanks Daniel,

I can’t find any error in the log. I guess the log emptied after the restart of elastic search. I am still at version 5.6.16.

It happens just every 2-3 months. I monitor Zammad and usually I can simply restart elastic search. I will take a look at the logs the next time it happens.

Thanks,

“Killed” sounds like you had insufficient memory left and your kernel killed a process.

Linux-Systems love to do that, this action can be dangerous, because you can’t control what it kills.

I’ve seen systems that killed just the webserver or a single database process to clear memory.

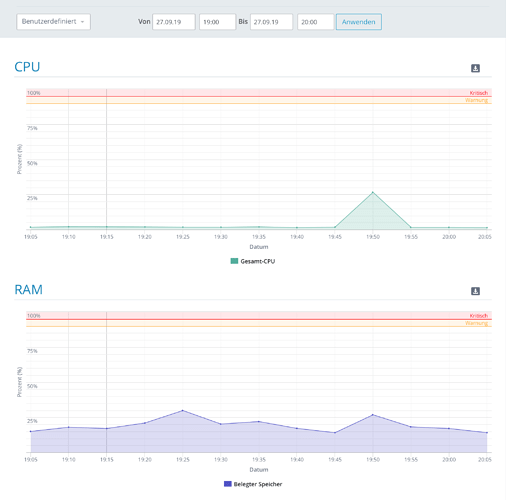

You might want to monitor your memory usuage, you should see a memory usuage drop around the time the kill entry appears.

Edit: This might be out of our scope, this is a configuration question of your system itself. :x

Thanks for the help so far. After I restarted Elasticsearch this morning, Zammad worked fine all day. Tonight, almost exactly 24 hours later, the same happened again. I have had any kind of error with Elasticsearch only 1-2 times before and in the past, it always was related to updates or at least something done to the server.

Yesterday and today or even the complete past week, nothing was done on the server. No updates or whatsoever. The server is only used for Zammad.

What happens at 19:49 h is the daily backup through “Acronis Anydata” (Standard Backup solution for 1&1/ionos Cloud Server). But the backup console doesn’t show any errors and the monitoring of 1&1/ionos does not show any alerts for CPU or RAM.

Same error message today:

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; enabled; vendor preset: disabled)

Active: failed (Result: signal) since Fr 2019-09-27 19:49:08 CEST; 1h 29min ago

Docs: http://www.elastic.co

Process: 51137 ExecStart=/usr/share/elasticsearch/bin/elasticsearch -p ${PID_DIR}/elasticsearch.pid --quiet -Edefault.path.logs=${LOG_DIR} -Edefault.path.data=${DATA_DIR} -Edefault.path.conf=${CONF_DIR} (code=killed, signal=KILL)

Process: 51135 ExecStartPre=/usr/share/elasticsearch/bin/elasticsearch-systemd-pre-exec (code=exited, status=0/SUCCESS)

Main PID: 51137 (code=killed, signal=KILL)

Sep 27 07:36:54 zammad systemd[1]: Starting Elasticsearch...

Sep 27 07:36:54 zammad systemd[1]: Started Elasticsearch.

Sep 27 19:49:08 zammad systemd[1]: elasticsearch.service: main process exited, code=killed, status=9/KILL

Sep 27 19:49:08 zammad systemd[1]: Unit elasticsearch.service entered failed state.

Sep 27 19:49:08 zammad systemd[1]: elasticsearch.service failed.

If I run less /var/log/elasticsearch/elasticsearch.log and go to the end (with “G”) it doesn’t show any entries around that time. The latest entries are from several hours before.

[2019-09-27T11:33:09,658][WARN ][o.a.p.p.f.PDType1Font ] Using fallback font LiberationSans for Helvetica-Bold

[2019-09-27T11:33:09,673][WARN ][o.a.p.p.f.PDType1Font ] Using fallback font LiberationSans for Helvetica

[2019-09-27T11:33:09,673][WARN ][o.a.p.p.f.PDType1Font ] Using fallback font LiberationSans for Helvetica-Bold

[2019-09-27T11:33:10,534][INFO ][o.e.m.j.JvmGcMonitorService] [TNWfDTe] [gc][young][14131][53] duration [800ms], collections [1]/[1.4s], total [800ms]/[18.5s], memory [171.5mb]->[185.6mb]/[1.9gb], all_pools {[young] [524.4kb]->[732.3kb]/[133.1mb]}{[survivor] [16.6mb]->[16.6mb]/[16.6mb]}{[old] [154.4mb]->[168.3mb]/[1.8gb]}

[2019-09-27T11:33:10,558][WARN ][o.e.m.j.JvmGcMonitorService] [TNWfDTe] [gc][14131] overhead, spent [800ms] collecting in the last [1.4s]

[2019-09-27T11:34:42,587][INFO ][o.e.m.j.JvmGcMonitorService] [TNWfDTe] [gc][14223] overhead, spent [251ms] collecting in the last [1s]

[2019-09-27T12:44:24,903][WARN ][o.e.m.j.JvmGcMonitorService] [TNWfDTe] [gc][18404] overhead, spent [726ms] collecting in the last [1.2s]

The error must have something to do with the backup process. But I don’t know what to check.

I will restart Elasticsearch again and than see what happens tomorrow night. I will probably get the same error tomorrow and might restart the server completely. But many thanks upfront if anyone has a tip what to check.

Thanks,

I had no troubles on Saturday and Sunday but usually no agent is working on weekends. Monday night, same issue.

I then rebooted the server completely and since then the problem is gone. I expect some kind of cache that was out of memory. Since the server was running without a reboot for at least 4-5 months, I won’t look further for any solutions. I can reboot it each 3 months and hope that the issue does not reoccur.

If someone has a tip where to look for, I will be happy to check it.

Thanks,

Hmm maybe this is a swap issue?

You might want to check for “swappiness” and could decrease its value if it’s too high.

Normally I’ve only seen swap issues on ubuntu that could cause such issues.